Blog

Concept Note: Network Neutrality in South Asia

Network Neutrality South Asia Concept Note _ORF CIS.pdf

—

PDF document,

238 kB (244150 bytes)

Network Neutrality South Asia Concept Note _ORF CIS.pdf

—

PDF document,

238 kB (244150 bytes)

The Case of Whatsapp Group Admins

Censorship laws in India have now roped in group administrators of chat groups on instant messaging platforms such as Whatsapp (group admin(s)) for allegedly objectionable content that was posted by other users of these chat groups. Several incidents[1] were reported this year where group admins were arrested in different parts of the country for allowing content that was allegedly objectionable under law. A few reports mentioned that these arrests were made under Section 153A[2] read with Section 34[3] of the Indian Penal Code (IPC) and Section 67[4] of the Information Technology Act (IT Act).

Targeting of a group admin for content posted by other members of a chat group has raised concerns about how this liability is imputed. Whether a group admin should be considered an intermediary under Section 2 (w) of the IT Act? If yes, whether a group admin would be protected from such liability?

Group admin as an intermediary

Whatsapp is an instant messaging platform which can be used for mass communication by opting to create a chat group. A chat group is a feature on Whatsapp that allows joint participation of Whatsapp users. The number of Whatsapp users on a single chat group can be up to 100. Every chat group has one or more group admins who control participation in the group by deleting or adding people. [5] It is imperative that we understand that by choosing to create a chat group on Whatsapp whether a group admin can become liable for content posted by other members of the chat group.

Section 34 of the IPC provides that when a number of persons engage in a criminal act with a common intention, each person is made liable as if he alone did the act. Common intention implies a pre-arranged plan and acting in concert pursuant to the plan. It is interesting to note that group admins have been arrested under Section 153A on the ground that a group admin and a member posting content on a chat group that is actionable under this provision have common intention to post such content on the group. But would this hold true when for instance, a group admin creates a chat group for posting lawful content (say, for matchmaking purposes) and a member of the chat group posts content which is actionable under law (say, posting a video abusing Dalit women)? Common intention can be established by direct evidence or inferred from conduct or surrounding circumstances or from any incriminating facts.[6]

We need to understand whether common intention can be established in case of a user merely acting as a group admin. For this purpose it is necessary to see how a group admin contributes to a chat group and whether he acts as an intermediary.

We know that parameters for determining an intermediary differ across jurisdictions and most global organisations have categorised them based on their role or technical functions.[7] Section 2 (w) of the Information Technology Act, 2000 (IT Act) defines an intermediary as any person, who on behalf of another person, receives, stores or transmits messages or provides any service with respect to that message and includes the telecom services providers, network providers, internet service providers, web-hosting service providers, search engines, online payment sites, online-auction sites, online marketplaces and cyber cafés. Does a group admin receive, store or transmit messages on behalf of group participants or provide any service with respect to messages of group participants or falls in any category mentioned in the definition? Whatsapp does not allow a group admin to receive, or store on behalf of another participant on a chat group. Every group member independently controls his posts on the group. However, a group admin helps in transmitting messages of another participant to the group by allowing the participant to be a part of the group thus effectively providing service in respect of messages. A group admin therefore, should be considered an intermediary. However his contribution to the chat group is limited to allowing participation but this is discussed in further detail in the section below.

According to the Organisation for Economic Co-operation and Development (OECD), in a 2010 report[8], an internet intermediary brings together or facilitates transactions between third parties on the Internet. It gives access to, hosts, transmits and indexes content, products and services originated by third parties on the Internet or provide Internet-based services to third parties. A Whatsapp chat group allows people who are not on your list to interact with you if they are on the group admins’ contact list. In facilitating this interaction, according to the OECD definition, a group admin may be considered an intermediary.

Liability as an intermediary

Section 79 (1) of the IT Act protects an intermediary from any liability under any law in force (for instance, liability under Section 153A pursuant to the rule laid down in Section 34 of IPC) if an intermediary fulfils certain conditions laid down therein. An intermediary is required to carry out certain due diligence obligations laid down in Rule 3 of the Information Technology (Intermediaries Guidelines) Rules, 2011 (Rules). These obligations include monitoring content that infringes intellectual property, threatens national security or public order, or is obscene or defamatory or violates any law in force (Rule 3(2)).[9] An intermediary is liable for publishing or hosting such user generated content, however, as mentioned earlier, this liability is conditional. Section 79 of IT Act states that an intermediary would be liable only if it initiates transmission, selects receiver of the transmission and selects or modifies information contained in the transmission that falls under any category mentioned in Rule 3 (2) of the Rules. While we know that a group admin has the ability to facilitate sharing of information and select receivers of such information, he has no direct editorial control over the information shared. Group admins can only remove members but cannot remove or modify the content posted by members of the chat group. An intermediary is liable in the event it fails to comply with due diligence obligations laid down under rule 3 (2) and 3 (3) of the Rules however, since a group admin lacks the authority to initiate transmission himself and control content, he can’t comply with these obligations. Therefore, a group admin would be protected from any liability arising out of third party/user generated content on his group pursuant to Section 79 of the IT Act.

It is however relevant to note whether the ability of a group admin to remove participants amounts to an indirect form of editorial control.

Other pertinent observations

In several reports[10] there have been discussions about how holding a group admin liable makes the process convenient as it is difficult to locate all the users of a particular group. This reasoning may not be correct as the Whatsapp policy[11] makes it mandatory for a prospective user to provide his mobile number in order to use the platform and no additional information is collected from group admins which may justify why group admins are targeted. Investigation agencies can access mobile numbers of Whatsapp users and gain more information from telecom companies.

It is also interesting to note that the group admins were arrested after a user or someone familiar to a user filed a complaint with the police about content being objectionable or hurtful. Earlier this year, the apex court had ruled in the case of Shreya Singhal v. Union of India[12] that an intermediary needed a court order or a government notification for taking down information. With actions taken against group admins on mere complaints filed by anyone, it is clear that the law enforcement officials have been overriding the mandate of the court.

Conclusion

According to a study conducted by a global research consultancy, TNS Global, around 38 % of internet users in India use instant messaging applications such as Snapchat and Whatsapp on a daily basis, Whatsapp being the most widely used application. These figures indicate the scale of impact that arrests of group admins may have on our daily communication.

It is noteworthy that categorising a group admin as an intermediary would effectively make the Rules applicable to all Whatsapp users intending to create groups and make it difficult to enforce and would perhaps blur the distinction between users and intermediaries.

The critical question however is whether a chat group is considered a part of the bundle of services that Whatsapp offers to its users and not as an independent platform that makes a group admin a separate entity. Also, would it be correct to draw comparison of a Whatsapp group chat with a conference call on Skype or sharing a Google document with edit rights to understand the domain in which censorship laws are penetrating today?

Valuable contribution by Pranesh Prakash and Geetha Hariharan

[1] http://www.nagpurtoday.in/whatsapp-admin-held-for-hurting-religious-sentiment/06250951 ; http://www.catchnews.com/raipur-news/whatsapp-group-admin-arrested-for-spreading-obscene-video-of-mahatma-gandhi-1440835156.html ; http://www.financialexpress.com/article/india-news/whatsapp-group-admin-along-with-3-members-arrested-for-objectionable-content/147887/

[2] Section 153A. “Promoting enmity between different groups on grounds of religion, race, place of birth, residence, language, etc., and doing acts prejudicial to maintenance of harmony.— (1) Whoever— (a) by words, either spoken or written, or by signs or by visible representations or otherwise, promotes or attempts to promote, on grounds of religion, race, place of birth, residence, language, caste or community or any other ground whatsoever, disharmony or feelings of enmity, hatred or ill-will between different religious, racial, language or regional groups or castes or communities…” or 2) Whoever commits an offence specified in sub-section (1) in any place of worship or in any assembly engaged in the performance of religious worship or religious ceremonies, shall be punished with imprisonment which may extend to five years and shall also be liable to fine.

[3] Section 34. Acts done by several persons in furtherance of common intention – When a criminal act is done by several persons in furtherance of common intention of all, each of such persons is liable for that act in the same manner as if it were done by him alone.

[4] Section 67 Publishing of information which is obscene in electronic form. -Whoever publishes or transmits or causes to be published in the electronic form, any material which is lascivious or appeals to the prurient interest or if its effect is such as to tend to deprave and corrupt persons who are likely, having regard to all relevant circumstances, to read, see or hear the matter contained or embodied in it, shall be punished on first conviction with imprisonment of either description for a term which may extend to five years and with fine which may extend to one lakh rupees and in the event of a second or subsequent conviction with imprisonment of either description for a term which may extend to ten years and also with fine which may extend to two lakh rupees."

[5] https://www.whatsapp.com/faq/en/general/21073373

[6] Pandurang v. State of Hyderabad AIR 1955 SC 216

[7]https://www.eff.org/files/2015/07/08/manila_principles_background_paper.pdf; http://unesdoc.unesco.org/images/0023/002311/231162e.pdf

[8] http://www.oecd.org/internet/ieconomy/44949023.pdf

[9] Rule 3(2) (b) of the Rules

[10]http://www.thehindu.com/news/national/other-states/if-you-are-a-whatsapp-group-admin-better-be-careful/article7531350.ece; http://www.newindianexpress.com/states/tamil_nadu/Social-Media-Administrator-You-Could-Land-in-Trouble/2015/10/10/article3071815.ece; http://www.medianama.com/2015/10/223-whatsapp-group-admin-arrest/; http://www.thenewsminute.com/article/whatsapp-group-admin-you-are-intermediary-and-here%E2%80%99s-what-you-need-know-35031

[11] https://www.whatsapp.com/legal/

[12] http://supremecourtofindia.nic.in/FileServer/2015-03-24_1427183283.pdf

DNA Research

The Centre for Internet and Society, India has been researching privacy in India since the year 2010, with special focus on the following issues related to the DNA Bill:

- Validity and legality of collection, usage and storage of DNA samples and information derived from the same.

- Monitoring projects and policies around Human DNA Profiling.

- Raising public awareness around issues concerning biometrics.

In 2006, the Department of Biotechnology drafted the Human DNA Profiling Bill. In 2012 a revised Bill was released and a group of Experts was constituted to finalize the Bill. In 2014, another version was released, the approval of which is pending before the Parliament.

The Bill seeks to establish DNA Databases at the state and regional level and a national level database. The databases would store DNA profiles of suspects, offenders, missing persons, and deceased persons. The database could be used by courts, law enforcement (national and international) agencies, and other authorized persons for criminal and civil purposes. The Bill will also regulate DNA laboratories collecting DNA samples. Lack of adequate consent, the broad powers of the board, and the deletion of innocent persons profiles are just a few of the concerns voiced about the Bill.

Download the infographic. Credit: Scott Mason and CIS team.

1. DNA Bill

The Human DNA Profiling bill is a legislation that will allow the government of India to Create a National DNA Data Bank and a DNA Profiling Board for the purposes of forensic research and analysis. There have been many concerns raised about the infringement of privacy and the power that the government will have with such information raised by Human Rights Groups, individuals and NGOs. The bill proposes to profile people through their fingerprints and retinal scans which allow the government to create different unique profiles for individuals. Some of the concerns raised include the loss of privacy by such profiling and the manner in which they are conducted. Unless strictly controlled, monitored and protected, such a database of the citizens' fingerprints and retinal scans could lead to huge blowbacks in the form of security risks and privacy invasions. The following articles elaborate upon these matters.

- Biometrics - An 'Angootha Chaap' Nation

- Re: The Human DNA Profiling Bill, 2012

- Human DNA Profiling Bill 2012 Analysis

- Overview and Concerns Regarding the Indian Draft DNA Act

- India's Biometric Identification Programs and Privacy Concerns

- A Dissent note to the Expert Committee for DNA Profiling

- CIS Comments and Recommendations to the Human DNA Profiling Bill, June 2015

- Concerns regarding DNA Law

- Human DNA Profiling Bill 2012 v/s 2015 Bill

- The scariest Bill in the Parliament is getting no attention - Here's what you need to know about it

- Why the DNA Bill is open to misuse

- Regulation, misuse concerns still dog DNA Profiling Bill

- Genetic profiling - Is it all in the DNA?

- Comparison of the Human DNA Profiling Bill 2012 with - CIS Recommendations, Sub-Committee Recommendations, Expert Committee Recommendations, and the Human DNA Profiling Bill 2015

- Very Big Brother

2. Comparative Analysis with other Legislatures

Human DNA Profiling is a system that isn't proposed only in India. This system of identification has been proposed and implemented in many nations. Each of these systems differs from the other on bases dependent on the nation's and society's needs. The risks and criticisms that DNA profiling has faced may be the same but the manner in which solutions to such issues are varying. The following articles look into the different systems in place in different countries and create a comparison with the proposed system in India to give us a better understanding of the risks and implications of such a system being implemented.

- Comparative Analysis of DNA Profiling Legislations from Across the World

- Comparison of Section 35(1) of the Draft Human DNA Profiling Bill and Section 4 of the Identification Act Revised Statute of Canada

- A Comparison of the Draft DNA Profiling Bill 2007 and the Draft Human DNA Profiling Bill 2012

Privacy Policy Research

- Raising public awareness and dialogue around privacy,

- Undertaking in depth research of domestic and international policy pertaining to privacy

- Driving comprehensive privacy legislation in India through research.

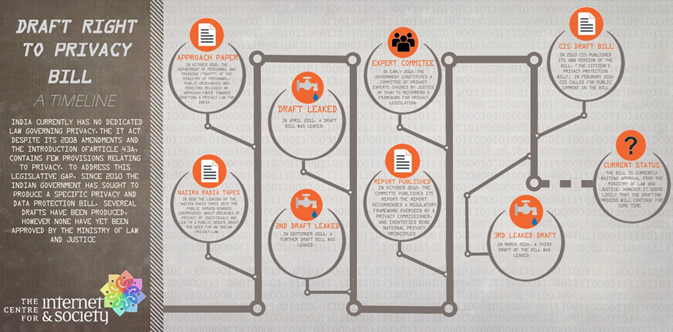

India does not have a comprehensive legislation covering issues of privacy or establishing the right to privacy In 2010 an "Approach Paper on Privacy" was published, in 2011 the Department of Personnel and Training released a draft Right to Privacy Bill, in 2012 the Planning Commission constituted a group of experts which published The Report of the Group of Experts on Privacy, in 2013 CIS drafted the citizens Privacy Protection Bill, and in 2014 the Right to Privacy Bill was leaked. Currently the Government is in the process of drafting and finalizing the Bill.

Privacy Research -

1. Approach Paper on Privacy, 2010 -

The following article contains the reply drafted by CIS in response to the Paper on Privacy in 2010. The Paper on Privacy was a document drafted by a group of officers created to develop a framework for a privacy legislation that would balance the need for privacy protection, security, sectoral interests, and respond to the domain legislation on the subject.

- CIS Responds to Privacy Approach Paper http://bit.ly/16dEPB3

2. Report on Privacy, 2012 -

The Report on Privacy, 2012 was drafted and published by a group of experts under the Planning Commission pertaining to the current legislation with respect to privacy. The following articles contain the responses and criticisms to the report and the current legislation.

- The National Cyber Security Policy: Not a Real Policy http://bit.ly/16yLYFq

- Privacy Law Must Fit the Bill http://bit.ly/19DNYjs

3. Privacy Protection Bill, 2013 -

The Privacy Protection Bill, 2013 was a legislation that aims to formulate the rules and law that governs privacy protection. The following articles refer to this legislation including a citizen's draft of the legislation.

- The Privacy (Protection) Bill 2013: A Citizen's Draft http://bit.ly/1bXYbL6

- Privacy Protection Bill, 2013 (With Amendments based on Public Feedback) http://bit.ly/1efkgbe

- Privacy (Protection) Bill, 2013: Updated Third Draft http://bit.ly/14WAgI7

- The Privacy Protection Bill, 2013 http://bit.ly/1g3TwIX

- The New Right to Privacy Bill 2011: A Blind Man's View of the Elephant http://bit.ly/17VSgCH

4. Right to Privacy Act, 2014 (Leaked Bill) -

The Right to Privacy Act, 2014 is a bill still under proposal that was leaked, linked below.

- Leaked Privacy Bill: 2014 vs. 2011 http://bit.ly/QV0Y0w

Sectoral Privacy Research

- Research on the issue of privacy in different sectors in India.

- Monitoring projects, practices, and policies around those sectors.

- Raising public awareness around the issue of privacy, in light of varied projects, industries, sectors and instances.

The Right to Privacy has evolved in India since many decades, where the question of it being a Fundamental Right has been debated many times in courts of Law. With the advent of information technology and digitisation of the services, the issue of Privacy holds more relevance in sectors like Banking, Healthcare, Telecommunications, ITC, etc., The Right to Privacy is also addressed in light of the Sexual minorities, Whistle-blowers, Government services, etc.

Sectors -

1. Consumer Privacy and other sectors -

Consumer privacy laws and regulations seek to protect any individual from loss of privacy due to failures or limitations of corporate customer privacy measures. The following articles deal with the current consumer privacy laws in place in India and around the world. Also, privacy concerns have been considered along with other sectors like Copyright law, data protection, etc.

§ Consumer Privacy - How to Enforce an Effective Protective Regime? http://bit.ly/1a99P2z

§ Privacy and Information Technology Act: Do we have the Safeguards for Electronic Privacy? http://bit.ly/10VJp1P

- Limits to Privacy http://bit.ly/19mPG6I

§ Copyright Enforcement and Privacy in India http://bit.ly/18fi9fM

- Privacy in India: Country Report http://bit.ly/14pnNwl

§ Transparency and Privacy http://bit.ly/1a9dMnC

§ The Report of the Group of Experts on Privacy (Contributed by CIS) http://bit.ly/VqzKtr

§ The (In) Visible Subject: Power, Privacy and Social Networking http://bit.ly/15koqol

§ Privacy and the Indian Copyright Act, 1857 as Amended in 2010 http://bit.ly/1euwX0r

§ Should Ratan Tata be afforded the Right to Privacy? http://bit.ly/LRlXin

§ Comments on Information Technology (Guidelines for Cyber Café) Rules, 2011 http://bit.ly/15kojJn

§ Broadcasting Standards Authority Censures TV9 over Privacy Violations! http://bit.ly/16L4izl

§ Is Data Protection Enough? http://bit.ly/1bvaWx2

§ Privacy, speech at stake in cyberspace http://cis-india.org/news/privacy-speech-at-stake-in-cyberspace-1

§ Q&A to the Report of the Group of Experts on Privacy http://bit.ly/TPhzQQ

§ Privacy worries cloud Facebook's WhatsApp Deal http://cis-india.org/internet-governance/blog/economic-times-march-14-2014-sunil-abraham-privacy-worries-cloud-facebook-whatsapp-deal

§ GNI Assessment Finds ICT Companies Protect User Privacy and Freedom of Expression http://bit.ly/1mjbpmL

§ A Stolen Perspective http://bit.ly/1bWHyzv

§ Is Data Protection enough? http://cis-india.org/internet-governance/blog/privacy/is-data-protection-enough

§ I don't want my fingerprints taken http://bit.ly/aYdMia

§ Keeping it Private http://bit.ly/15wjTVc

§ Personal Data, Public Profile http://bit.ly/15vlFk4

§ Why your Facebook Stalker is Not the Real Problem http://bit.ly/1bI2MSc

§ The Private Eye http://bit.ly/173ypSI

§ How Facebook is Blatantly Abusing our Trust http://bit.ly/OBXGXk

§ Open Secrets http://bit.ly/1b5uvK0

§ Big Brother is Watching You http://bit.ly/1cGpg0K

2. Banking/Finance -

Privacy in the banking and finance industry is crucial as the records and funds of one person must not be accessible by another without the due authorisation. The following articles deal with the current system in place that governs privacy in the financial and banking industry.

§ Privacy and Banking: Do Indian Banking Standards Provide Enough Privacy Protection? http://bit.ly/18fhsTM

§ Finance and Privacy http://bit.ly/15aUPh6

§ Making the Powerful Accountable http://bit.ly/1nvzSpC

3. Telecommunications -

The telecommunications industry is the backbone of current technology with respect to ICTs. The telecommunications industry has its own rules and regulations. These rules are the focal point of the following articles including criticism and acclaim.

§ Privacy and Telecommunications: Do We Have the Safeguards? http://bit.ly/10VJp1P

§ Privacy and Media Law http://bit.ly/18fgDfF

§ IP Addresses and Expeditious Disclosure of Identity in India http://bit.ly/16dBy4N

§ Telecommunications and Internet Privacy Read more: http://bit.ly/16dEcaF

§ Encryption Standards and Practices http://bit.ly/KT9BTy

§ Encryption Standards and Practices http://cis-india.org/internet-governance/blog/privacy/privacy_encryption

§ Security: Privacy, Transparency and Technology http://cis-india.org/internet-governance/blog/security-privacy-transparency-and-technolog y

4. Sexual Minorities -

While the internet is a global forum of self-expression and acceptance for most of us, it does not hold true for sexual minorities. The internet is a place of secrecy for those that do not conform to the typical identities set by society and therefore their privacy is more important to them than most. When they reveal themselves or are revealed by others, they typically face a lot of group hatred from the rest of the people and therefore value their privacy. The following article looks into their situation.

· Privacy and Sexual Minorities http://bit.ly/19mQUyZ

5. Health -

The privacy between a doctor and a patient is seen as incredibly important and so should the privacy of a person in any situation where they reveal more than they would to others in the sense of CT scans and other diagnoses. The following articles look into the present scenario of privacy in places like a hospital or diagnosis center.

§ Health and Privacy http://bit.ly/16L1AJX

§ Privacy Concerns in Whole Body Imaging: A Few Questions http://bit.ly/1jmvH1z

6. e-Governance -

The main focus of governments in ICTs is their gain for governance. There have many a multiplicity of laws and legislation passed by various countries including India in an effort to govern the universal space that is the internet. Surveillance is a major part of that governance and control. The articles listed below deal with the issues of ethics and drawbacks in the current legal scenario involving ICTs.

§ E-Governance and Privacy http://bit.ly/18fiReX

§ Privacy and Governmental Databases http://bit.ly/18fmSy8

§ Killing Internet Softly with its Rules http://bit.ly/1b5I7Z2

§ Cyber Crime & Privacy http://bit.ly/17VTluv

§ Understanding the Right to Information http://bit.ly/1hojKr7

§ Privacy Perspectives on the 2012-2013 Goa Beach Shack Policy http://bit.ly/ThAovQ

§ Identifying Aspects of Privacy in Islamic Law http://cis-india.org/internet-governance/blog/identifying-aspects-of-privacy-in-islamic-law

§ What Does Facebook's Transparency Report Tell Us About the Indian Government's Record on Free Expression & Privacy? http://cis-india.org/internet-governance/blog/what-does-facebook-transparency-report-tell -us-about-indian-government-record-on-free-expression-and-privacy

§ Search and Seizure and the Right to Privacy in the Digital Age: A Comparison of US and India http://cis-india.org/internet-governance/blog/search-and-seizure-and-right-to-privacy-in-digital-age

§ Internet Privacy in India http://cis-india.org/telecom/knowledge-repository-on-internet-access/internet-privacy-in-i ndia

§ Internet-driven Developments - Structural Changes and Tipping Points http://bit.ly/10s8HVH

§ Data Retention in India http://bit.ly/XR791u

§ 2012: Privacy Highlights in India http://bit.ly/1kWe3n7

§ Big Dog is Watching You! The Sci-fi Future of Animal and Insect Drones http://bit.ly/1kWee1W

- Privacy Law in India: A Muddled Field - I http://cis-india.org/internet-governance/blog/the-hoot-bhairav-acharya-april-15-2014-priv acy-law-in-india-a-muddled-field-1

- The Four Parts of Privacy in India http://cis-india.org/internet-governance/blog/economic-and-political-weekly-bhairav-acharya-may-30-2015-four-parts-of-privacy-in-india

- Right to Privacy in Peril http://cis-india.org/internet-governance/blog/right-to-privacy-in-peril

- Microsoft Releases its First Report on Data Requests by Law Enforcement Agencies around the World http://bit.ly/1kWjylM

- The Criminal Law Amendment Bill 2013 - Penalising 'Peeping Toms' and Other Privacy Issues http://bit.ly/1dO46o5

- Privacy vs. Transparency: An Attempt at Resolving the Dichotomy http://cis-india.org/openness/blog-old/privacy-v-transparency

- Open Letter to "Not" Recognize India as Data Secure Nation till Enactment of Privacy Legislation http://bit.ly/1sJME9j

- Open Letter to Prevent the Installation of RFID tags in Vehicles http://bit.ly/1hxidzU

- The National Privacy Roundtable Meetings http://bit.ly/158ayNW

- Transparency Reports - A Glance on What Google and Facebook Tell about Government Data Requests http://bit.ly/19NYTal

- CIS and International Coalition Calls upon Governments to Protect Privacy http://bit.ly/18oOTDk

- An Analysis of the Cases Filed under Section 46 of the Information Technology Act, 2000 for Adjudication in the State of Maharashtra http://bit.ly/16dKyoo

- Open Letter to Members of the European Parliament of the Civil Liberties, Justice and Home Affairs Committee http://bit.ly/17eZntz

- CIS Supports the UN Resolution on "The Right to Privacy in the Digital age" http://bit.ly/1c2A89q

- Brochures from Expos on Smart Cards, e-Security, RFID & Biometrics in India http://bit.ly/1f714fN

- Electoral Databases - Privacy and Security Concerns http://bit.ly/Mb4ktM

- Net Neutrality and Privacy http://bit.ly/1khi1GQ

- Intermediary Liability Resources http://bit.ly/1hRT8OD

- Feedback to the NIA Bill http://bit.ly/1ePhUeg

- India's Identity Crisis http://bit.ly/1lTRuuz

- Facebook, Privacy, and India http://bit.ly/a2HzhT

- Private censorship and the Right to Hear http://cis-india.org/internet-governance/blog/the-hoot-july-17-2014-chinmayi-arun-private-censorship-and-the-right-to-hear

- Your Privacy is Public Property (Rules issued by a control-obsessed government have armed officials with widespread powers to pry into your private life. http://cis-india.org/news/privacy-public-property

- The India Privacy Monitor Map http://bit.ly/19A5mCZ

- Privacy and Security can Co-Exist http://bit.ly/193fPXi

- A Street View of the Private and The Public (http://bit.ly/15VKmdf

- Sense and Censorship http://bit.ly/14KFwyo

- Government access to private sector data http://bit.ly/18rjd1X

- India: Privacy in Peril http://bit.ly/1g5QbZj

- Big Democracy, Big Surveillance: India's Surveillance State http://bit.ly/1nkg8Ho

- Who Governs the Internet? Implications for Freedom and National Security http://bit.ly/1hnnJ2a

7. Whistle-blowers -

Whistle-blowers are always in a difficult situation when they must reveal the misdeeds of their corporations and governments due to the blowback that is possible if their identity is revealed to the public. As in the case of Edward Snowden and many others, a whistle-blowers identity is to be kept the most private to avoid the consequences of revealing the information that they did. This is the main focus of the article below.

§ The Privacy Rights of Whistle-blowers http://bit.ly/18GWmM3

8. Cloud and Open Source -

Cloud computing and open source software have grown rapidly over the past few decades. Cloud computing is when an individual or company uses offsite hardware on a pay by usage basis provided and owned by someone else. The advantages are low costs and easy access along with decreased initial costs. Open source software on the other hand is software where despite the existence of proprietary elements and innovation, the software is available to the public at no charge. These software are based of open standards and have the obvious advantage of being compatible with many different set ups and are free. The following article highlights these computing solutions.

§ Privacy, Free/Open Source, and the Cloud http://bit.ly/1cTmGoI

9. e-Commerce -

One of the fastest growing applications of the internet is e-Commerce. This includes many facets of commerce such as online trading, the stock exchange etc. in these cases, just as in the financial and banking industries, privacy is very important to protect ones investments and capital. The following article's main focal point is the world of e-Commerce and its current privacy scenario.

§ Consumer Privacy in e-Commerce http://bit.ly/1dCtgTs

Security Research

- Research on the issue of privacy in different sectors in India.

- Monitoring projects, practices, and policies around those sectors.

- Raising public awareness around the issue of privacy, in light of varied projects, industries, sectors and instances.

State surveillance in India has been carried out by Government agencies for many years. Recent projects include: NATGRID, CMS, NETRA, etc. which aim to overhaul the overall security and intelligence infrastructure in the country. The purpose of such initiatives has been to maintain national security and ensure interconnectivity and interoperability between departments and agencies. Concerns regarding the structure, regulatory frameworks (or lack thereof), and technologies used in these programmes and projects have attracted criticism.

Surveillance/Security Research -

1. Central Monitoring System -

The Central Monitoring System or CMS is a clandestine mass electronic surveillance data mining program installed by the Center for Development of Telematics (C-DOT), a part of the Indian government. It gives law enforcement agencies centralized access to India's telecommunications network and the ability to listen in on and record mobile, landline, satellite, Voice over Internet Protocol (VoIP) calls along with private e-mails, SMS, MMS. It also gives them the ability to geo-locate individuals via cell phones in real time.

- The Central Monitoring System: Some Questions to be Raised in Parliament http://bit.ly/1fln2vu

- India´s ´Big Brother´: The Central Monitoring System (CMS) http://bit.ly/1kyyzKB

- India's Central Monitoring System (CMS): Something to Worry About? http://bit.ly/1gsM4oQ

- C-DoT's surveillance system making enemies on internet http://cis-india.org/news/dna-march-21-2014-krishna-bahirwani-c-dots-surveillance-system-making-enemies-on-internet

2. Surveillance Industry : Global And Domestic -

The surveillance industry is a multi-billion dollar economic sector that tracks individuals along with their actions such as e-mails and texts. With the cause for its existence being terrorism and the government's attempts to fight it, a network has been created that leaves no one with their privacy. All that an individual does in the digital world is suspect to surveillance. This included surveillance in the form of snooping where an individual's phone calls, text messages and e-mails are monitored or a more active kind where cameras, sensors and other devices are used to actively track the movements and actions of an individual. This information allows governments to bypass the privacy that an individual has in a manner that is considered unethical and incorrect. This information that is collected also in vulnerable to cyber-attacks that are serious risks to privacy and the individuals themselves. The following set of articles look into the ethics, risks, vulnerabilities and trade-offs of having a mass surveillance industry in place.

- Surveillance Technologies http://bit.ly/14pxg74

- New Standard Operating Procedures for Lawful Interception and Monitoring http://bit.ly/1mRRIo4

- Video Surveillance and Its Impact on the Right to Privacy http://cis-india.org/internet-governance/blog/privacy/video-surveillance-privacy

- More than a Hundred Global Groups Make a Principled Stand against Surveillance http://cis-india.org/internet-governance/blog/more-than-hundred-global-groups-make-principled-stand-against-surveillance

- Models for Surveillance and Interception of Communications Worldwide http://cis-india.org/internet-governance/blog/models-for-surveillance-and-interception-of-communications-worldwide

- Why 'Facebook' is More Dangerous than the Government Spying on You http://cis-india.org/internet-governance/blog/why-facebook-is-more-dangerous-than-the-government-spying-on-you

- The Difficult Balance of Transparent Surveillance http://cis-india.org/internet-governance/blog/the-difficult-balance-of-transparent-surveillance

- UK's Interception of Communications Commissioner - A Model of Accountability http://cis-india.org/internet-governance/blog/uk-interception-of-communications-commissioner-a-model-of-accountability

- Search and Seizure and the Right to Privacy in the Digital Age: A Comparison of US and India http://cis-india.org/internet-governance/blog/search-and-seizure-and-right-to-privacy-in-digital-age

- State Surveillance and Human Rights Camp: Summary http://bit.ly/ZZNm6M

- India Subject to NSA Dragnet Surveillance! No Longer a Hypothesis - It is Now Officially Confirmed http://bit.ly/1eqtD8g

- Spy Files 3: WikiLeaks Sheds More Light on the Global Surveillance Industry http://bit.ly/1d6EmjD

- Surveillance Camp IV: Disproportionate State Surveillance - A Violation of Privacy http://bit.ly/1ilTJts

- Hacking without borders: The future of artificial intelligence and surveillance http://bit.ly/1kWiwGv

- Driving in the Surveillance Society: Cameras, RFID tags and Black Boxes http://bit.ly/1mr3KTH

- Policy Brief: Oversight Mechanisms for Surveillance http://cis-india.org/internet-governance/blog/policy-brief-oversight-mechanisms-for-surveillance

3. Judgements By the Indian Courts -

The surveillance industry in India has been brought before the court in different cases. The following articles look into the cause of action in these cases along with their impact on India and its citizens.

- Anvar v. Basheer and the New (Old) Law of Electronic Evidence http://cis-india.org/internet-governance/blog/anvar-v-basheer-new-old-law-of-electronic-evidence

- The Gujarat High Court Judgement on the Snoopgate Issue http://cis-india.org/internet-governance/blog/gujarat-high-court-judgment-on-snoopgate-issue

4. International Privacy Laws -

Due to the universality of the internet, many questions of accountability arise and jurisdiction becomes a problem. Therefore certain treaties, agreements and other international legal literature was created to answer these questions. The articles listed below look into the international legal framework which governs the internet.

- Learning to Forget the ECJ's Decision on the Right to be Forgotten and its Implications http://cis-india.org/internet-governance/blog/learning-to-forget-ecj-decision-on-the-right-to-be-forgotten-and-its-implications

- Privacy and Security Can Co-exist http://cis-india.org/internet-governance/blog/privacy-and-security

- European Union Draft Report Admonishes Mass Surveillance, Calls for Stricter Data Protection and Privacy Laws http://cis-india.org/internet-governance/blog/european-union-draft-report-admonishes-mass-surveillance

- Draft International Principles on Communications Surveillance and Human Rights http://bit.ly/XCsk9b

5. Indian Surveillance Framework -

The Indian government's mass surveillance systems are configured a little differently from the networks of many countries such as the USA and the UK. This is because of the vast difference in infrastructure both in existence and the required amount. In many ways, it is considered that the surveillance network in India is far worse than other countries. This is due to the present form of the legal system in existence. The articles below explore the system and its functioning including the various methods through which we are spied on. The ethics and vulnerabilities are also explored in these articles.

- Paper-thin Safeguards and Mass Surveillance in India - http://cis-india.org/internet-governance/blog/paper-thin-safeguards-and-mass-surveillance-in-india

- The Surveillance Industry in India: At Least 76 Companies Aiding Our Watchers! - http://cis-india.org/internet-governance/blog/the-surveillance-industry-in-india-at-least-76-companies-aiding-our-watchers

- The Surveillance Industry in India - An Analysis of Indian Security Expos http://cis-india.org/internet-governance/blog/surveillance-industry-in-india-analysis-of-indian-security-expos

- GSMA Research Outputs: different legal and regulatory aspects of security and surveillance in India http://cis-india.org/internet-governance/blog/gsma-research-outputs

- Way to watch http://cis-india.org/internet-governance/blog/indian-express-june-26-2013-chinmayi-arun-way-to-watch

- Free Speech and Surveillance http://cis-india.org/internet-governance/blog/free-speech-and-surveillance

- Surveillance rises, privacy retreats http://cis-india.org/internet-governance/news/business-standard-namrata-acharya-april-12-2015-surveillance-rises-privacy-retreats

- Freedom from Monitoring: India Inc. should Push For Privacy Laws http://cis-india.org/internet-governance/blog/forbesindia-article-august-21-2013-sunil-abraham-freedom-from-monitoring

- Surat's Massive Surveillance Network Should Cause Concern, Not Celebration http://cis-india.org/internet-governance/blog/surat-massive-surveillance-network-cause-of-concern-not-celebration

- Vodafone Report Explains Government Access to Customer Data http://cis-india.org/internet-governance/blog/vodafone-report-explains-govt-access-to-customer-data

- A Review of the Functioning of the Cyber Appellate Tribunal and Adjudicator officers under the IT Act http://cis-india.org/internet-governance/blog/review-of-functioning-of-cyber-appellate-tribunal-and-adjudicatory-officers-under-it-act

- A Comparison of Indian Legislation to Draft International Principles on Surveillance of Communications http://bit.ly/U6T3xy

- SEBI and Communication Surveillance: New Rules, New Responsibilities? http://bit.ly/1eqtD8g

- Snooping Can Lead to Data Abuse http://cis-india.org/internet-governance/blog/snooping-to-data-abuse

- Big Brother is Watching You http://bit.ly/1arbxwm

- Moving Towards a Surveillance State http://cis-india.org/internet-governance/blog/moving-towards-surveillance-state

- How Surveillance Works in India http://cis-india.org/internet-governance/blog/nytimes-july-10-2013-pranesh-prakash-how-surveillance-works-in-india

- Big Democracy, Big Surveillance: India's Surveillance State http://bit.ly/1nkg8Ho

- Can India Trust Its Government on Privacy? http://cis-india.org/internet-governance/blog/new-york-times-july-11-2013-can-india-trust-its-government-on-piracy

- Indian surveillance laws & practices far worse than US http://cis-india.org/internet-governance/blog/economic-times-june-13-2013-pranesh-prakash-indian-surveillance-laws-and-practices-far-worse-than-us

- Security, Surveillance and Data Sharing Schemes and Bodies in India http://cis-india.org/internet-governance/blog/security-surveillance-and-data-sharing.pdf/view

- Policy Paper on Surveillance in India http://cis-india.org/internet-governance/blog/policy-paper-on-surveillance-in-indiahttp://cis-india.org/internet-governance/blog/security-privacy-transparency-and-technology

- The Constitutionality of Indian Surveillance Law: Public Emergency as a Condition Precedent for Intercepting Communications http://cis-india.org/internet-governance/blog/the-constitutionality-of-indian-surveillance-law

- Surveillance and the Indian Constitution - Part 1: Foundations http://bit.ly/1ntqsen

- Surveillance and the Indian Constitution - Part 2: Gobind and the Compelling State Interest Test http://bit.ly/1dH3meL

- Surveillance and the Indian Constitution - Part 3: The Public/Private Distinction and the Supreme Court's Wrong Turn http://bit.ly/1kBosnw

- Mastering the Art of Keeping Indians Under Surveillance http://cis-india.org/internet-governance/blog/the-wire-may-30-2015-bhairav-acharya-mastering-the-art-of-keeping-indians-under-surveillance

UID Research

- Researching the vision and implementation of the UID Scheme - both from a technical and regulatory perspective.

- Understanding the validity and legality of collection, usage and storage of Biometric information for this scheme.

- Raising public awareness around issues concerning privacy, data security and the objectives of the UID Scheme.

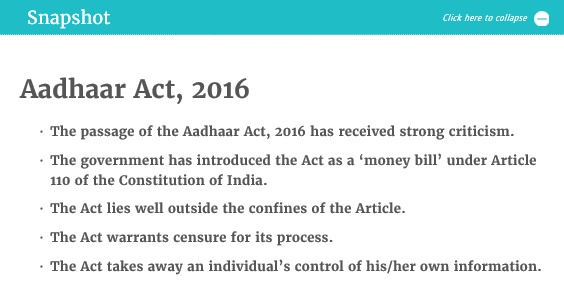

The UID scheme seeks to provide all residents of India an identity number based on their biometrics that can be used to authenticate individuals for the purpose of Government benefits and services. A 2015 Supreme Court ruling has clarified that the UID can only be used in the PDS and LPG Schemes.

Concerns with the scheme include the broad consent taken at the time of enrolment, the lack of clarity as to what happens with transactional metadata, the centralized storage of the biometric information in the CIDR, the seeding of the aadhaar number into service providers’ databases, and the possibility of function creep. Also, there are concerns due to absence of a legislation to look into the privacy and security concerns.

UID Research -

1. Ramifications of Aadhar and UID schemes -

The UID and Aadhar systems have been bombarded with criticisms and plagued with issues ranging from privacy concerns to security risks. The following articles deal with the many problems and drawbacks of these systems.

§ UID and NPR: Towards Common Ground http://cis-india.org/internet-governance/blog/uid-npr-towards-common-ground

§ Public Statement to Final Draft of UID Bill http://bit.ly/1aGf1NN

§ UID Project in India - Some Possible Ramifications http://cis-india.org/internet-governance/blog/uid-in-india

§ Aadhaar Number vs the Social Security Number http://cis-india.org/internet-governance/blog/aadhaar-vs-social-security-number

§ Feedback to the NIA Bill http://cis-india.org/internet-governance/blog/cis-feedback-to-nia-bill

§ Unique ID System: Pros and Cons http://bit.ly/1jmxbZS

§ Submitted seven open letters to the Parliamentary Finance Committee on the UID covering the following aspects: SCOSTA Standards (http://bit.ly/1hq5Rqd), Centralized Database (http://bit.ly/1hsHJDg), Biometrics (http://bit.ly/196drke), UID Budget (http://bit.ly/1e4c2Op), Operational Design (http://bit.ly/JXR61S), UID and Transactions (http://bit.ly/1gY6B8r), and Deduplication (http://bit.ly/1c9TkSg)

§ Comments on Finance Committee Statements to Open Letters on Unique Identity: The Parliamentary Finance Committee responded to the open letters sent by CIS through an email on 12 October 2011. CIS has commented on the points raised by the Committee: http://bit.ly/1kz4H0F

§ Unique Identification Scheme (UID) & National Population Register (NPR), and Governance http://cis-india.org/internet-governance/blog/uid-and-npr-a-background-note

§ Financial Inclusion and the UID http://cis-india.org/internet-governance/privacy_uidfinancialinclusion

§ The Aadhaar Case http://cis-india.org/internet-governance/blog/the-aadhaar-case

§ Do we need the Aadhaar scheme http://bit.ly/1850wAz

§ 4 Popular Myths about UID http://bit.ly/1bWFoQg

§ Does the UID Reflect India? http://cis-india.org/internet-governance/blog/privacy/uid-reflects-india

§ Would it be a unique identity crisis? http://cis-india.org/news/unique-identity-crisis

§ UID: Nothing to Hide, Nothing to Fear? http://cis-india.org/internet-governance/blog/privacy/uid-nothing-to-hide-fear

2. Right to Privacy and UID -

The UID system has been hit by many privacy concerns from NGOs, private individuals and others. The sharing of one's information, especially fingerprints and retinal scans to a system that is controlled by the government and is not vetted as having good security irks most people. These issues are dealt with the in the following articles.

§ India Fears of Privacy Loss Pursue Ambitious ID Project http://cis-india.org/news/india-fears-of-privacy-loss

§ Analysing the Right to Privacy and Dignity with Respect to the UID http://bit.ly/1bWFoQg

§ Analysing the Right to Privacy and Dignity with Respect to the UID http://cis-india.org/internet-governance/blog/privacy/privacy-uiddevaprasad

§ Supreme Court order is a good start, but is seeding necessary? http://cis-india.org/internet-governance/blog/supreme-court-order-is-a-good-start-but-is-seeding-necessary

§ Right to Privacy in Peril http://cis-india.org/internet-governance/blog/right-to-privacy-in-peril

3. Data Flow in the UID -

The articles below deal with the manner in which data is moved around and handled in the UID system in India.

§ UIDAI Practices and the Information Technology Act, Section 43A and Subsequent Rules http://cis-india.org/internet-governance/blog/uid-practices-and-it-act-sec-43-a-and-subsequent-rules

§ Data flow in the Unique Identification Scheme of India http://cis-india.org/internet-governance/blog/data-flow-in-unique-identification-scheme-of-india

CIS's Position on Net Neutrality

- Net Neutrality violations can potentially have multiple categories of harms — competition harms, free speech harms, privacy harms, innovation and ‘generativity’ harms, harms to consumer choice and user freedoms, and diversity harms thanks to unjust discrimination and gatekeeping by Internet service providers.

- Net Neutrality violations (including some those forms of zero-rating that violate net neutrality) can also have different kinds benefits — enabling the right to freedom of expression, and the freedom of association, especially when access to communication and publishing technologies is increased; increased competition [by enabling product differentiation, can potentially allow small ISPs compete against market incumbents]; increased access [usually to a subset of the Internet] by those without any access because they cannot afford it, increased access [usually to a subset of the Internet] by those who don't see any value in the Internet, reduced payments by those who already have access to the Internet especially if their usage is dominated by certain services and destinations.

- Given the magnitude and variety of potential harms, complete forbearance from all regulation is not an option for regulators nor is self-regulation sufficient to address all the harms emerging from Net Neutrality violations, since incumbent telecom companies cannot be trusted to effectively self-regulate. Therefore, CIS calls for the immediate formulation of Net Neutrality regulation by the telecom regulator [TRAI] and the notification thereof by the government [Department of Telecom of the Ministry of Information and Communication Technology]. CIS also calls for the eventual enactment of statutory law on Net Neutrality. All such policy must be developed in a transparent fashion after proper consultation with all relevant stakeholders, and after giving citizens an opportunity to comment on draft regulations.

- Even though some of these harms may be large, CIS believes that a government cannot apply the precautionary principle in the case of Net Neutrality violations. Banning technical innovations and business model innovations is not an appropriate policy option. The regulation must toe a careful line to solve the optimization problem: refraining from over-regulation of ISPs and harming innovation at the carrier level (and benefits of net neutrality violations mentioned above) while preventing ISPs from harming innovation and user choice. ISPs must be regulated to limit harms from unjust discrimination towards consumers as well as to limit harms from unjust discrimination towards the services they carry on their networks.

- Based on regulatory theory, we believe that a regulatory framework that is technologically neutral, that factors in differences in technological context, as well as market realities and existing regulation, and which is able to respond to new evidence is what is ideal.

This means that we need a framework that has some bright-line rules based, but which allows for flexibility in determining the scope of exceptions and in the application of the rules. Candidate principles to be embodied in the regulation include: transparency, non-exclusivity, limiting unjust discrimination. - The harms emerging from walled gardens can be mitigated in a number of ways. On zero-rating the form of regulation must depend on the specific model and the potential harms that result from that model. Zero-rating can be: paid for by the end consumer or subsidized by ISPs or subsidized by content providers or subsidized by government or a combination of these; deal-based or criteria-based or government-imposed; ISP-imposed or offered by the ISP and chosen by consumers; Transparent and understood by consumers vs. non-transparent; based on content-type or agnostic to content-type; service-specific or service-class/protocol-specific or service-agnostic; available on one ISP or on all ISPs. Zero-rating by a small ISP with 2% penetration will not have the same harms as zero-rating by the largest incumbent ISP. For service-agnostic / content-type agnostic zero-rating, which Mozilla terms ‘equal rating’, CIS advocates for no regulation.

- CIS believes that Net Neutrality regulation for mobile and fixed-line access must be different recognizing the fundamental differences in technologies.

- On specialized services CIS believes that there should be logical separation and that all details of such specialized services and their impact on the Internet must be made transparent to consumers both individual and institutional, the general public and to the regulator. Further, such services should be available to the user only upon request, and not without their active choice, with the requirement that the service cannot be reasonably provided with ‘best efforts’ delivery guarantee that is available over the Internet, and hence requires discriminatory treatment, or that the discriminatory treatment does not unduly harm the provision of the rest of the Internet to other customers.

- On incentives for telecom operators, CIS believes that the government should consider different models such as waiving contribution to the Universal Service Obligation Fund for prepaid consumers, and freeing up additional spectrum for telecom use without royalty using a shared spectrum paradigm, as well as freeing up more spectrum for use without a licence.

- On reasonable network management CIS still does not have a common institutional position.

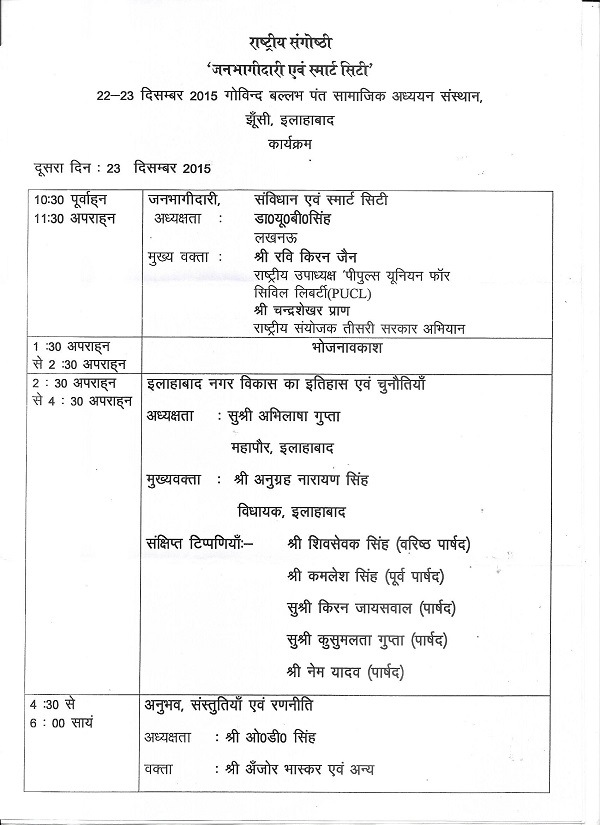

Smart Cities in India: An Overview

Overview of the 100 Smart Cities Mission

The Government of India announced its flagship programme- the 100 Smart Cities mission in the year 2014 and was launched in June 2015 to achieve urban transformation, drive economic growth and improve the quality of life of people by enabling local area development and harnessing technology. Initially, the Mission aims to cover 100 cities across the countries (which have been shortlisted on the basis of a Smart Cities Proposal prepared by every city) and its duration will be five years (FY 2015-16 to FY 2019-20). The Mission may be continued thereafter in the light of an evaluation to be done by the Ministry of Urban Development (MoUD) and incorporation of the learnings into the Mission. The Mission aims to focus on area-based development in the form of redevelopment of existing spaces, or the development of new areas (Greenfield) to accommodate the growing urban population and ensure comprehensive planning to improve quality of life, create employment and enhance incomes for all - especially the poor and the disadvantaged. [1] On 27th August 2015 the Centre unveiled 98 smart cities across India which were selected for this Project. Across the selected cities, 13 crore population ( 35% of the urban population will be included in the development plans. [2] The mission has been developed for the purpose of achieving urban transformation. The vision is to preserve India's traditional architecture, culture & ethnicity while implementing modern technology to make cities livable, use resources in a sustainable manner and create an inclusive environment. [3]

The promises of the Smart City mission include reduction of carbon footprint, adequate water and electricity supply, proper sanitation, including solid waste management, efficient urban mobility and public transport, affordable housing, robust IT connectivity and digitalization, good governance, citizen participation, security of citizens, health and education.

Questions unanswered

- Why and How was the Smart Cities project conceptualized in India? What was the need for such a project in India?

- What was the role of the public/citizens at the ideation and conceptualization stage of the project?

- Which actors from the Government, Private industry and the civil society are involved in this mission? Though the smart cities mission has been initiated by the Government of India under the Ministry of Urban Development, there is no clarity about the involvement of the associated offices and departments of the Ministry.

How are the Smart Cities being selected?

The 100 cities were supposed to be selected on the basis of Smart cities challenge[4] involving two stages. Stage I of the challenge involved Intra-State city selection on objective criteria to identify cities to compete in stage-II. In August 2015, The Ministry of Urban Development, Government of India announced 100 smart cities [5] evaluated on parameters such as service levels, financial and institutional capacity, past track record, called as the 'shortlisted cities' for this purpose. The selected cities are now competing for selection in the Second stage of the challenge, which is an All India competition. For this crucial stage, the potential 100 smart cities are required to prepare a Smart City Proposal (SCP) stating the model chosen (retrofitting, redevelopment, Greenfield development or a mix), along with a Pan-City dimension with Smart Solutions. The proposal must also include suggestions collected by way of consultations held with city residents and other stakeholders, along with the proposal for financing of the smart city plan including the revenue model to attract private participation. The country saw wide participation from the citizens to voice their aspirations and concerns regarding the smart city. 15th December 2015 has been declared as the deadline for submission of the SCP, which must be in consonance with evaluation criteria set by The MoUD, set on the basis of professional advice. [6] On the basis of this, 20 cities will be selected for the first year. According to the latest reports, the Centre is planning to fund only 10 cities for the first phase in case the proposals sent by the states do not match the expected quality standards and are unable to submit complete area-development plans by the deadline, i.e. 15th December, 2015. [7]

Questions unanswered

- Who would be undertaking the task of evaluating and selecting the cities for this project?

- What are the criteria for selection of a city to qualify in the first 20 (or 10, depending on the Central Government) for the first phase of implementation?

How are the smart cities going to be Funded?

The Smart City Mission will be operated as a Centrally Sponsored Scheme (CSS) and the Central Government proposes to give financial support to the Mission to the extent of Rs. 48,000 crores over five years i.e. on an average Rs. 100 crore per city per year. [8] The additional resources will have to be mobilized by the State/ ULBs from external/internal sources. According to the scheme, once list of shortlisted Smart Cities is finalized, Rs. 2 crore would have been disbursed to each city for proposal preparation.[9]

According to estimates of the Central Government, around Rs 4 lakh crore of funds will be infused mainly through private investments and loans from multilateral institutions among other sources, which accounts to 80% of the total spending on the mission. [10] For this purpose, the Government will approach the World Bank and the Asian Development Bank (ADB) for a loan costing £500 million and £1 billion each for 2015-20. If ADB approves the loan, it would be it will be the bank's highest funding to India's urban sector so far.[11] Foreign Direct Investment regulations have been relaxed to invite foreign capital and help into the Smart City Mission. [12]

Questions unanswered

- The Government notes on Financing of the project mentions PPPs for private funding and leveraging of resources from internal and external resources. There is lack of clarity on the external resources the Government has/will approach and the varied PPP agreements the Government is or is planning to enter into for the purpose of private investment in the smart cities.

How is the scheme being implemented?

Under this scheme, each city is required to establish a Special Purpose Vehicle (SPV) having flexibility regarding planning, implementation, management and operations. The body will be headed by a full-time CEO, with nominees of Central Government, State Government and ULB on its Board. The SPV will be a limited company incorporated under the Companies Act, 2013 at the city-level, in which the State/UT and the Urban Local Body (ULB) will be the promoters having equity shareholding in the ratio 50:50. The private sector or financial institutions could be considered for taking equity stake in the SPV, provided the shareholding pattern of 50:50 of the State/UT and the ULB is maintained and the State/UT and the ULB together have majority shareholding and control of the SPV. Funds provided by the Government of India in the Smart Cities Mission to the SPV will be in the form of tied grant and kept in a separate Grant Fund.[13]

For the purpose of implementation and monitoring of the projects, the MoUD has also established an Apex Committee and National Mission Directorate for National Level Monitoring[14], a State Level High Powered Steering Committee (HPSC) for State Level Monitoring[15] and a Smart City Advisory Forum at the City Level [16].

Also, several consulting firms[17] have been assigned to the 100 cities to help them prepare action plans.[18] Some of them include CRISIL, KPMG, McKinsey, etc. [19]

Questions unanswered

- What policies and regulations have been put in place to account for the smart cities, apart from policies looking at issues of security, privacy, etc.?

- What international/national standards will be adopted while development of the smart cities? Though the Bureau of Indian Standards is in the process of formulating standardized guidelines for the smart cities in India[20], yet there is lack of clarity on adoption of these national standards, along with the role of international standards like the ones formulated by ISO.

What is the role of Foreign Governments and bodies in the Smart cities mission?

Ever since the government's ambitious project has been announced and cities have been shortlisted, many countries across the globe have shown keen interest to help specific shortlisted cities in building the smart cities and are willing to invest financially. Countries like Sweden, Malaysia, UAE, USA, etc. have agreed to partner with India for the mission.[21] For example, UK has partnered with the Government to develop three India cities-Pune, Amravati and Indore.[22] Israel's start-up city Tel Aviv also entered into an agreement to help with urban transformation in the Indian cities of Pune, Nagpur and Nashik to foster innovation and share its technical know-how.[23] France has piqued interest for Nagpur and Puducherry, while the United States is interested in Ajmer, Vizag and Allahabad. Also, Spain's Barcelona Regional Agency has expressed interest in exchanging technology with the Delhi. Apart from foreign government, many organizations and multilateral agencies are also keen to partner with the Indian government and have offered financial assistance by way of loans. Some of them include the UK government-owned Department for International Development, German government KfW development bank, Japan International Cooperation Agency, the US Trade and Development Agency, United Nations Industrial Development Organization and United Nations Human Settlements Programme. [24]

Questions unanswered

- Do these governments or organization have influence on any other component of the Smart cities?

- How much are the foreign governments and multilateral bodies spending on the respective cities?

- What kind of technical know-how is being shared with the Indian government and cities?

What is the way ahead?

On the basis of the SCP, the MoUD will evaluate, assess the credibility and select 20 smart cities out of the short-listed ones for execution of the plan in the first phase. The selected city will set up a SPV and receive funding from the Government.

Questions unanswered

- Will the deadline of submission of the Smart Cities Proposal be pushed back?

- After the SCP is submitted on the basis of consultation with the citizens and public, will they be further involved in the implementation of the project and what will be their role?

- How will the MoUD and other associated organizations as well as actors consider the implementation realities of the project, like consideration of land displacement, rehabilitation of the slum people, etc.

- How are ICT based systems going to be utilized to make the cities and the infrastructure "smart"?

- How is the MoUD going to respond to the concerns and criticism emerging from various sections of the society, as being reflected in the news items?

- How will the smart cities impact and integrate the existing laws, regulations and policies? Does the Government intend to use the existing legislations in entirety, or update and amend the laws for implementation of the Smart Cities Mission?

[1] Smart Cities, Mission Statement and Guidelines, Ministry of Urban Development, Government of India, June 2015, Available at : http://smartcities.gov.in/writereaddata/SmartCityGuidelines.pdf

[2] http://articles.economictimes.indiatimes.com/2015-08-27/news/65929187_1_jammu-and-kashmir-12-cities-urban-development-venkaiah-naidu

[3] http://india.gov.in/spotlight/smart-cities-mission-step-towards-smart-india

[4] http://smartcities.gov.in/writereaddata/Process%20of%20Selection.pdf

[5] Full list : http://www.scribd.com/doc/276467963/Smart-Cities-Full-List

[6] http://smartcities.gov.in/writereaddata/Process%20of%20Selection.pdf

[7] http://www.ibtimes.co.in/modi-govt-select-only-10-cities-under-smart-city-project-this-year-report-658888

[8] http://smartcities.gov.in/writereaddata/Financing%20of%20Smart%20Cities.pdf

[9] Smart Cities presentation by MoUD : http://smartcities.gov.in/writereaddata/Presentation%20on%20Smart%20Cities%20Mission.pdf

[10] http://indianexpress.com/article/india/india-others/smart-cities-projectfrom-france-to-us-a-rush-to-offer-assistance-funds/

[11] http://indianexpress.com/article/india/india-others/funding-for-smart-cities-key-to-coffer-lies-outside-india/#sthash.5lnW9Jsq.dpuf

[12] http://india.gov.in/spotlight/smart-cities-mission-step-towards-smart-india

[13] http://smartcities.gov.in/writereaddata/SPVs.pdf

[14] http://smartcities.gov.in/writereaddata/National%20Level%20Monitoring.pdf

[15] http://smartcities.gov.in/writereaddata/State%20Level%20Monitoring.pdf

[16] http://smartcities.gov.in/writereaddata/City%20Level%20Monitoring.pdf

[17] http://smartcities.gov.in/writereaddata/List_of_Consulting_Firms.pdf

[18] http://pib.nic.in/newsite/PrintRelease.aspx?relid=128457

[19] http://economictimes.indiatimes.com/articleshow/49242050.cms?utm_source=contentofinterest&utm_medium=text&utm_campaign=cppst

[20] http://www.business-standard.com/article/economy-policy/in-a-first-bis-to-come-up-with-standards-for-smart-cities-115060400931_1.html

[21] http://accommodationtimes.com/foreign-countries-have-keen-interest-in-development-of-smart-cities/

[22] http://articles.economictimes.indiatimes.com/2015-11-20/news/68440402_1_uk-trade-three-smart-cities-british-deputy-high-commissioner

[23] http://www.jpost.com/Business-and-Innovation/Tech/Tel-Aviv-to-help-India-build-smart-cities-435161?utm_campaign=shareaholic&utm_medium=twitter&utm_source=socialnetwork

[24] http://indianexpress.com/article/india/india-others/smart-cities-projectfrom-france-to-us-a-rush-to-offer-assistance-funds/#sthash.nCMxEKkc.dpuf

ISO/IEC/ JTC 1/SC 27 Working Groups Meeting, Jaipur

The Bureau of Indian Standards (BIS) in collaboration with Data Security Council of India (DSCI) hosted the global standards’ meeting – ISO/IEC/ JTC 1/SC 27 Working Groups Meeting in Jaipur, Rajasthan at Hotel Marriott from 26th to 30th of October, 2015, followed by a half day conference on Friday, 30th October on the importance of Standards in the domain. The event witnessed experts from across the globe deliberating on forging international standards on Privacy, Security and Risk management in IoT, Cloud Computing and many other contemporary technologies, along with updating existing standards. Under SC 27, 5 working groups parallely held the meetings on varied Projects and Study periods respectively. The 5 Working Groups are as follows:

- WG1: Information Security Management Systems;

- WG 2 :Cryptography and Security Mechanisms;

- WG 3 : Security Evaluation, Testing and Specification;

- WG 4 : Security Controls and Services; and

- WG 5 :Identity Management and Privacy technologies; competence of security management

This key set of Working Groups (WG)met in India for the first time. Professionals discussed and debated development of standards under each working group to develop international standards to address issues regarding security, identity management and privacy.

CIS had the opportunity to attend meetings under Working Group 5. This group further had parallel meetings on several topics namely:

- Privacy enhancing data de-identification techniques ISO/IEC NWIP 20889 : Data de-identification techniques are important when it comes to PII to enable the exploitation of the benefits of data processing while maintaining compliance with regulatory requirements and the relevant ISO/IEC 29100 privacy principles. The selection, design, use and assessment of these techniques need to be performed appropriately in order to effectively address the risks of re-identification in a given context. There is thus a need to classify known de-identification techniques using standardized terminology, and to describe their characteristics, including the underlying technologies, the applicability of each technique to reducing the risk of re-identification, and the usability of the de-identified data. This is the main goal of this International Standard. Meetings were conducted to resolve comments sent by organisations across the world, review draft documents and agree on next steps.

- A study period on Privacy Engineering framework : This session deliberated upon contributions, terms of reference and discuss the scope for the emerging field of privacy engineering framework. The session also reviewed important terms to be included in the standard and identify possible improvements to existing privacy impact assessment and management standards. It was identified that the goal of this standard is to integrate privacy into systems as part of the systems engineering process. Another concern raised was that the framework must be consistent with Privacy framework under ISO 29100 and HL7 Privacy and security standards.

- A study period on user friendly online privacy notice and consent: The basic purpose of this New Work Item Proposal is to assess the viability of producing a guideline for PII Controllers on providing easy to understand notices and consent procedures to PII Principals within WG5. At the Meeting, a brief overview of the contributions received was given,along with assessment of liaison to ISO/IEC JTC 1/SC 35 and other entities. This International Standard gives guidelines for the content and the structure of online privacy notices as well as documents asking for consent to collect and process personally identifiable information (PII) from PII principals online and is applicable to all situations where a PII controller or any other entity processing PII informs PII principals in any online context.

- Some of the other sessions under Working Group 5 were on Privacy Impact Assessment ISO/IEC 29134, Standardization in the area of Biometrics and Biometric information protection, Code of Practise for the protection of personally identifiable information, Study period on User friendly online privacy notice and consent, etc.

ISO/IEC/JTC 1/ SC27 is a joint technical committee of the international standards bodies – ISO and IEC on Information Technology security techniques which conducts regular meetings across the world. JTC 1 has over 2600 published standards developed under the broad umbrella of the committee and its 20 subcommittees. Draft International Standards adopted by the joint technical committees are circulated to the national bodies for voting. Publication as an International Standard requires approval by at least 75% of the national bodies casting a vote in favour of the same. In India, the Bureau of Indian Standards (BIS) is the National Standards Body. Standards are formulated keeping in view national priorities, industrial development, technical needs, export promotion, health, safety etc. and are harmonized with ISO/IEC standards (wherever they exist) to the extent possible, in order to facilitate adoption of ISO/IEC standards by all segments of industry and business.BIS has been actively participating in the Technical Committee work of ISO/IEC and is currently a Participating member in 417 and 74 Technical Committees/ Subcommittees and Observer member in 248 and 79 Technical Committees/Subcommittees of ISO and IEC respectively. BIS holds Secretarial responsibilities of 2 Technical Committees and 6 Subcommittees of ISO.

The last meeting was held in the month of May, 2015 in Malaysia, followed by this meeting in October, 2015 Jaipur. 51 countries play an active role as the ‘Participating Members, India being one, while a few countries as observing members. As a part of these sessions, the participating countries also have rights to vote in all official ballots related to standards. The representatives of the country work on the preparation and development of the International Standards and provide feedback to their national organizations.

There was an additional study group meeting on IoT to discuss comments on the previous drafts, suggest changes , review responses and identify standard gaps in SC 27.

On October 30, 2015 BIS-DSCI hosted a half day International conference on 30 October, 2015 on Cyber Security and Privacy Standards, comprising of keynotes and panel discussions, bringing together national and international experts to share experience and exchange views on cyber security techniques and protection of data and privacy in international standards, and their growing importance in their society. The conference looked at various themes like the Role of standards in smart cities, Responding to the Challenges of Investigating Cyber Crimes through Standards, etc. It was emphasised that due to an increasing digital world, there is a universal agreement for the need of cyber security as the infrastructure is globally connected, the cyber threats are also distributed as they are not restricted by the geographical boundaries. Hence, the need for technical and policy solutions, along with standards was highlighted for future protection of the digital world which is now deeply embedded in life, businesses and the government. Standards will help in setting crucial infrastructure for in data security and build associated infrastructure on these lines.

The importance of standards was highlighted in context of smart cities wherein the need for standards was discussed by experts. Harmonization of regulations with standards must be looked at, by primarily creating standards which could be referred to by the regulators. Broadly, the challenges faced by smart cities are data security, privacy and digital resilience of the infrastructure. It was suggested that in the beginning, these areas must be looked at for development of standards in smart cities. Also, the ISO/IEC has a Working Group and a Strategic Group focussing on Smart Cities. The risks of digitisation, network, identity management, etc. must be looked at to create the standards.

The next meeting has been scheduled for April 2016 in Tampa (USA).

This meeting was a good opportunity to interact with experts from various parts of the World and understand the working of ISO Meetings which are held twice/thrice every year. The Centre for Internet and Society will be continuing work and becoming involved in the standard setting process at the future Working group meetings.

RTI PDF

RTI.pdf

—

PDF document,

412 kB (422252 bytes)

RTI.pdf

—

PDF document,

412 kB (422252 bytes)

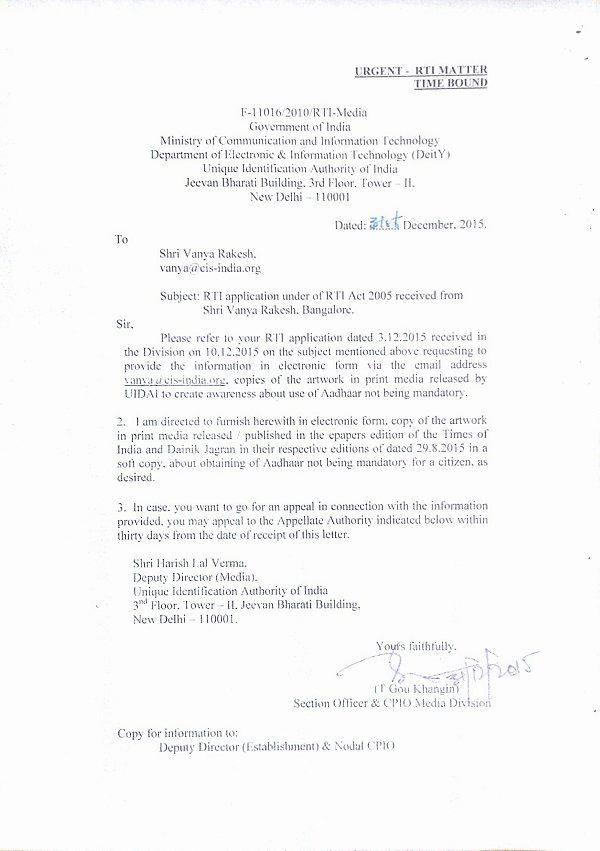

RTI response regarding the UIDAI

The Supreme Curt of India, by virtue of an order dated 11th August 2015, directed the Government to widely publicize in electronic and print media, including radio and television networks that obtaining Aadhar card is not mandatory for the citizens to avail welfare schemes of the Government. (until the matter is resolved). CIS filed an RTI to get information about the steps taken by Government in this regard, the initiatives taken, and details about the expenditure incurred to publicize and inform the public about Aadhar not being mandatory to avail welfare schemes of the Government.

Response: It has been informed that an advisory was issued by UIDAI headquarters to all regional offices to comply with the order, along with several advertisement campaigns. The total cost incurred so far by UIDAI for this is Rs. 317.30 lakh.

Benefits and Harms of "Big Data"

Introduction

In 2011 it was estimated that the quantity of data produced globally would surpass 1.8 zettabyte[1]. By 2013 that had grown to 4 zettabytes[2], and with the nascent development of the so-called 'Internet of Things' gathering pace, these trends are likely to continue. This expansion in the volume, velocity, and variety of data available[3] , together with the development of innovative forms of statistical analytics, is generally referred to as "Big Data"; though there is no single agreed upon definition of the term. Although still in its initial stages, Big Data promises to provide new insights and solutions across a wide range of sectors, many of which would have been unimaginable even 10 years ago.