Blog

ICANN’s Problems with Accountability and the .WEB Controversy

Chronological Background of the .WEB Auction

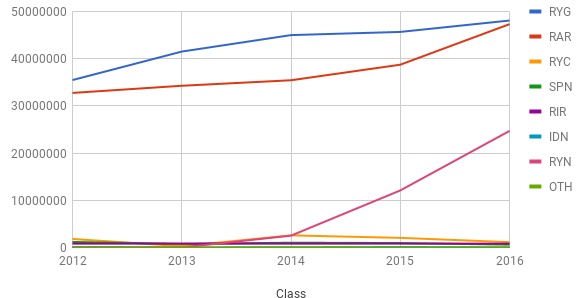

In June 2012, ICANN launched a new phase for the creation and operation of Generic Top-Level Domains (gTLDs). After confirming the eligibility of seven applicants for the rights of the .WEB domain name, ICANN placed them in a string contention set (a group of applications with similar or identical applied for gTLDs).[1]

[Quick Note: ICANN procedure encourages the resolving of this contention set by voluntary settlement amongst the contending applicants (also referred to as a private auction), wherein individual participation fees of US $185,000 go to ICANN and the auction proceeds are distributed among the bidders. If a private auction fails, the provision for a last resort auction conducted by ICANN is invoked - here the total auction proceeds go to ICANN along with the participation fees.[2]]

In June 2016, NuDotCo LLC, a bidder that had previously participated in nine private auctions without any objection, withdrew its consent to the voluntary settlement. Ruby Glen LLC, another bidder, contacted NDC to ask if it would reconsider its withdrawal, and was made aware of changes in NDC’s Board membership, financial position, management and a potential change in ownership, by NDC’s Chief Financial Officer.[3] Concerned about the transparency of the auction process, Ruby Glen requested ICANN to postpone the auction on June 22, in order to investigate the discrepancies between NDC’s official application and its representation to Ruby Glen.[4] The Vice President of ICANN’s gTLD Operations and the independent ICANN Ombudsman led separate investigations, both of which were limited to few e-mails seeking NDC’s confirmation of status quo. On the basis of NDC’s denial of any material changes, ICANN announced that the auction would proceed as planned, as no grounds had been found for its postponement.[5]

On July 27, NDC’s winning bid – USD 135 million – beat the previous record by $90 million, doubling ICANN’s total net proceeds from the past fifteen auctions it had conducted.[6] Soon after NDC’s win, Verisign, Inc., the market giant that owns the .com and .net domain names, issued a public statement that it had used NDC as a front for the auction, and that it had been involved in its funding from the very beginning. Verisign agreed to transfer USD 130 million to NDC, allowing the latter to retain a $5 million stake in .WEB.[7]

Ruby Glen LLC filed for an injunction against the transfer of .WEB rights to NDC, and sought expedited discovery[8] against ICANN and NDC in order to gather evidentiary support for the temporary restraining order.[9] Donuts Inc., the parent company of Ruby Glen, simultaneously filed for recovery of economic loss due to negligence, fraud and breach of bylaws among other grounds, and Affilias, the second highest bidder, demanded that the .WEB rights be handed over by ICANN.[10] Furthermore, at ICANN57, Affilias publicly brought up the issue in front of ICANN’s Board, and Verisign followed with a rebuttal. However, ICANN’s Board refused to comment on the issue at that point as the matter was still engaged in ongoing litigation.[11]

Issues Regarding ICANN’s Assurance of Accountability

The Post-Transition IANA promised enhanced transparency and accountability to the global multistakeholder community. The series of events surrounding the .WEB auction has stirred up issues relating to the lack of transparency and accountability of ICANN. ICANN’s arbitrary enforcement of policies that should have been mandatory, with regard to internal accountability mechanisms, fiduciary responsibilities and the promotion of competition, has violated Bylaws that obligate it to operate ‘consistently, neutrally, objectively, and fairly, without singling out any particular party for discriminatory treatment’.[12]

Though the US court ruled in favour of ICANN, the discrepancies that were made visible with regard to ICANN’s differing emphasis on procedural and substantive compliance with its rules and regulations, have forced the community to acknowledge that corporate strategies, latent interests and financial advantages undermine ICANN’s commitment to accountability. The approval of NDC’s ridiculously high bid with minimal investigation or hesitation, even after Verisign’s takeover, signifies pressing concerns that stand in the way of a convincing commitment to accountability, such as:

- The Lack of Substantive Fairness and Accountability at ICANN (A Superficial Investigation)

- ICANN’s Sketchy Tryst with Legal Conformity

- The Financial Accountability of ICANN’s Auction Proceeds

- The Lack of Substantive Fairness and Accountability in its Screening Processes:

Ruby Glen’s claim that ICANN conducted a cursory investigation of NDC’s misleading and unethical behaviour brought to light the ease and arbitrariness with which applications are deemed valid and eligible.

- Disclosure of Significant Details Unique to Applicant Profiles: In the initial stage, applications for the gTLD auctions require disclosure of background information such as proof of legal establishment, financial statements, primary and secondary contacts to represent the company, officers, directors, partners, major shareholders, etc. At this stage, TAS User Registration IDs, which require VAT/tax/business IDs, principal business address, phone, fax, etc. of the applicants, are created to build unique profiles for different parties in an auction.[13] Any important change in an applicant’s details would thus significantly alter the unique profile, leading to uncertainty regarding the parties involved and the validity of transactions undertaken. NDC’s application clearly didn’t meet the requirements here, as its financial statements, secondary contact, board members and ownership all changed at some point before the auction took place (either prior to or post submission of the application).[14]

- Mandatory Declaration of Third Party Funding: Applications presupposing a future joint venture or any organisational unpredictability are not deemed eligible by ICANN, and if any third party is involved in the funding of the applicant, the latter is to provide evidence of such commitment to funding at the time of submission of its financial documents.[15] Verisign’s public announcement that it was involved in NDC’s funding from the very beginning (well before the auction) and its management later, proves that NDC’s failure to notify ICANN made its application ineligible, or irregular at the very least.[16]

- Vague Consequences of Failure to Notify ICANN of Changes: If in any situation, certain material changes occur in the composition of the management, ownership or financial position of the applicant, ICANN is liable to be notified of the changes by the submission of updated documents. Here, however, the applicant may be subjected to re-evaluation if a material change is concerned, at ICANN’s will (there is no mention of what a material change might be). In the event of failure to notify ICANN of changes that would lead the previous information submitted to be false or misleading, ICANN may reject or deny the application concerned.[17] NDC’s absolute and repeated denial of any changes, during the extremely brief e-mail ‘investigation’ conducted by ICANN and the Ombudsman, show that at no point was NDC planning on revealing its intimacy with Verisign. No extended evaluation was conducted by ICANN at any point.[18] Note: The arbitrary power allowed here and the vague use of the term ‘material’ obstruct any real accountability on ICANN’s part to ensure that checks are carried out to discourage dishonest behaviour, at all stages.

- Arbitrary Enforcement of Background Checks: In order to confirm the eligibility of all applicants, ICANN conducts background screening during its initial evaluation process to verify the information disclosed, at the individual and entity levels.[19] The applicants may be asked to produce any and all documents/evidence to help ICANN complete this successfully, and any relevant information received from ‘any source’ may be taken into account here. However, this screening is conducted only with regard to two criteria: general business diligence and criminal history, and any record of cybersquatting behaviour.[20] In this case, ICANN’s background screening was clearly not thorough, in light of Verisign’s confirmed involvement since the beginning, and at no point was NDC asked to submit any extra documents (apart from the exchange of e-mails between NDC and ICANN and its Ombudsman) to enable ICANN’s inquiry into its business diligence.[21] Further, ICANN also said that it was not required to conduct background checks or a screening process, as the provisions only mention that ICANN is allowed to do so, when it feels the need.[22] This ludicrous loophole hinders transparency efforts by giving ICANN the authority to ignore any questionable details in applications it desires to deem eligible, based on its own strategic leanings, advantageous circumstances or any other beneficial interests.

ICANN’s deliberate avoidance of discussing or investigating the ‘allegations’ against NDC (that were eventually proved true), as well as a visible compromise in fairness and equity of the application process point to the conclusion it desired.

ICANN’s Sketchy Tryst with Legal Conformity:

ICANN’s lack of substantive compliance, with California’s laws and its own rules and regulations, leave us with the realisation that efforts towards transparency, enforcement and compliance (even with emphasis on the IANA Stewardship and Accountability Process) barely meet the procedural minimum.

- Rejection of Request for Postponement of Auction: ICANN’s intent to ‘initiate the Auction process once the composition of the set is stabilised’ implies that there must be no pending accountability mechanisms with regard to any applicant.[23] When ICANN itself determines the opening and closing of investigations or reviews concerning applicants, arbitrariness on ICANN’s part in deciding on which date the mechanisms are to be deemed as pending, may affect an applicant’s claim about procedural irregularity. In this case, ICANN had already scheduled the auction for July 27, 2016, before Ruby Glen sent in a request for postponement of the auction and inquiry into NDC’s eligibility on June 22, 2016.[24] Even though the ongoing accountability mechanisms had begun after initiation of the auction process, ICANN confirmed the continuance of the process without assurance about the stability of the contention set as required by procedure. Ruby Glen’s claim about this violation in auction rules was dismissed by ICANN on the basis that there must be no pending accountability mechanisms at the time of scheduling of the auction.[25] This means that if any objection is raised or any dispute resolution or accountability mechanism is initiated with regard to an applicant, at any point after fixing the date of the auction, the auction process continues even though the contention set may not be stabilised. This line of defence made by ICANN is not in conformity with the purpose behind the wording of its auction procedure as discussed above.

- Lack of Adequate Participation in the Discovery Planning Process: In order to gather evidentiary support and start the discovery process for the passing of the injunction, ICANN was required to engage with Ruby Glen in a conference, under Federal law. However, due to a disagreement as to the extent of participation required from both parties involved in the process, ICANN recorded only a single appearance at court, after which it refused to engage with Ruby Glen.[26] ICANN should have conducted a thorough investigation, based on both NDC’s and Verisign’s public statements, and engaged more cooperatively in the conference, to comply substantively with its internal procedure as well jurisdictional obligations. Under ICANN’s Bylaws, it is to ensure that an applicant does not assign its rights or obligations in connection with the application to another party, as NDC did, in order to promote a competitive market and ensure certainty in transactions.[27] However, due to its lack of substantive compliance with due procedure, such bylaws have been rendered weak.

- Demand to Dismiss Ruby Glen’s Complaint: ICANN demanded the dismissal of Ruby Glen’s complaint on the basis that the complaint was vague and unsubstantiated.[28] After the auction, Ruby Glen’s allegations and suspicions about NDC’s dishonest behaviour were confirmed publicly by Verisign, making the above demand for dismissal of the complaint ridiculous.

- Inapplicability of ICANN’s Bylaws to its Contractual Relationships: ICANN maintained that its bylaws are not part of application documents or contracts with applicants (as it is a not-for-profit public benefit corporation), and that ICANN’s liability, with respect to a breach of ICANN’s foundational documents, extends only to officers, directors, members, etc.[29] In addition, it said that Ruby Glen had not included any facts that suggested a duty of care arose from the contractual relationship with Ruby Glen and Donuts Inc.[30] Its dismissal of and considerable disregard for fiduciary obligations like duty of care and duty of inquiry in contractual relationships, prove the contravention of promised commitments and core values (integral to its entire accountability process), which are to ‘apply in the broadest possible range of circumstances’.[31]

- ICANN’s Legal Waiver and Public Policy: Ruby Glen had submitted that, under the California Civil Code 1668, a covenant not to sue was against policy, and that the legal waiver all applicants were made to sign in the application was unenforceable.[32] This waiver releases ICANN from ‘any claims arising out of, or related to, any action or failure to act’, and the complaint claimed that such an agreement ‘not to challenge ICANN in court, irrevocably waiving the right to sue on basis of any legal claim’ was unconscionable.[33] However, ICANN defended the enforceability of the legal waiver, saying that only a covenant not to sue that is specifically designed to avoid responsibility for own fraud or willful injury is invalidated under the provisions of the California Civil Code.[34] A waiver, incorporating the availability of accountability mechanisms ‘within ICANN’s bylaws to challenge any final decision of ICANN’s with respect to an application’, was argued as completely valid under California’s laws. It must be kept in mind that challenges to ICANN’s final decisions can make headway only through its own accountability mechanisms (including the Reconsideration Requests Process, the Independent Review Panel and the Ombudsman), which are mostly conducted by, accountable to and applicable at the discretion of the Board.[35] This means that the only recourse for dissatisfied applicants is through processes managed by ICANN, leaving no scope for independence and impartiality in the review or inquiry concerned, as the .WEB case has shown.

- Note: ICANN has also previously argued that its waivers are not restricted by S. 1668 because the parties involved are sophisticated - without an element of oppression, and that these transactions don’t involve public interest as ICANN doesn’t provide necessary services such as health, transportation, etc.[36] Such line of argument shows its continuous refusal to acknowledge responsibility for ensuring access to an essential good, in a diverse community, justifying concerns about ICANN’s commitment to accessibility and human rights.

Required to remain accountable to the stakeholders of the community through mechanisms listed in its Bylaws, ICANN’s repeated difficulty in ensuring these mechanisms adhere to the purpose behind jurisdictional regulations confirm hindrances to impartiality, independence and effectiveness.

The Financial Accountability of ICANN’s Auction Proceeds:

The use and distribution of significant auction proceeds accruing to ICANN have been identified by the internet community as issues central to financial transparency, especially in a future of increasing instances of contention sets.

- Private Inurement Prohibition and Legal Requirements of Tax-Exempted Organisations: Subject to California’s state laws as well as federal laws, tax exemptions and tax-deductible charitable donations (available to not-for-profit public benefit corporations) are dependent on the fulfillment of jurisdictional obligations by ICANN, including avoiding contracts that may result in excessive economic benefit to a party involved, or lead to any deviation from purely charitable and scientific purposes.[37] ICANN’s Articles require that it ‘shall pursue the charitable and public purposes of lessening the burdens of government and promoting the global public interest in the operational stability of the Internet’.[38] Due to this, ICANN’s accumulation of around USD 60 million (the total net proceeds from over 14 contention sets) since 2014 has been treated with unease, making it impossible to ignore the exponential increase in the same after the .WEB controversy.[39] With its dedication to a bottom-up, multi-stakeholder policy development process, the use of a single and ambiguous footnote, in ICANN’s Guidebook, to tackle the complications involving significant funds that accrue from last resort auctions (without even mentioning the arbiters of their ‘appropriate’ use) is grossly insufficient.[40]

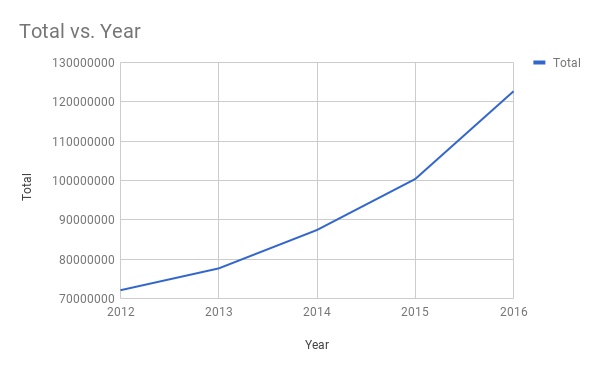

- Need for Careful and Inclusive Deliberation Over the Use of Auction Proceeds: At the end of the fiscal year 2016, ICANN’s balance sheet showed a total of USD 399.6 million. However, the .WEB sale amount was not included in this figure, as the auction happened after the last date (June 30, 2016).[41] Around seven times the average winning bid, a USD 135 million hike in ICANN’s accounts shows the need for greater scrutiny on ICANN’s process of allocation and distribution of these auction proceeds.[42] While finding an ‘appropriate purpose’ for these funds, it is important that ICANN’s legal nature under US jurisdiction as well as its vision, mission and commitments be adhered to, in order to help increase public confidence and financial transparency.

- The CCWG Charter on New gTLD Auction Proceeds: ICANN has always maintained that it recognised the concern of ‘significant funds accruing as a result of several auctions’ at the outset.[43] In March 2015, the GNSO brought up issues relating to the distribution of auction proceeds at ICANN52, to address growing concerns of the community.[44] A Charter was then drafted, proposing the formation of a Cross-Community Working Group on New gTLD Auction Proceeds, to help ICANN’s Board in allocating these funds.[45] After being discussed in detail at ICANN56, the draft charter was forwarded to the various supporting organisations for comments.[46] The Charter received no objections from 2 organisations and was adopted by the ALAC, ASO, ccNSO and GNSO, following which members and co-chairs were identified from the organisations to constitute the CCWG.[47] It was decided that while ICANN’s Board will have final responsibility in disbursement of the proceeds, the CCWG will be responsible for the submission of proposals regarding the mechanism for the allocation of funds, keeping ICANN’s fiduciary and legal obligations in mind.[48] While creating proposals, the CCWG must recommend how to avoid possible conflicts of interest, maintain ICANN’s tax-exempt status, and ensure diversity and inclusivity in the entire process.[49] It is important to note that the CCWG cannot make recommendations ‘regarding which organisations are to be funded or not’, but is to merely submit a proposal for the process by which allocation is undertaken.[50] ICANN’s Guidebook mentions possible uses for proceeds, such as ‘grants to support new gTLD applications or registry operators from communities’, the creation of a fund for ‘specific projects for the benefit of the Internet community’, the ‘establishment of a security fund to expand use of secure protocols’, among others, to be decided by the Board.[51]

- A Slow Process and the Need for More Official Updates: The lack of sufficient communication/updates about any allocation or the process behind such, in light of ICANN’s current total net auction proceeds of USD 233,455,563, speaks of an urgent need for a decision by the Board (based on a recommendation by CCWG), regarding a timeframe for the allocation of such proceeds.[52] However, the entire process has been very slow, with the first CCWG meeting on auction proceeds scheduled for 26 January 2016, and the lists of members and observers being made public only recently.[53] Here, even parties interested in applying for the same funds at a later stage are allowed to participate in meetings, as long as they include such information in a Statement of Interest and Declaration of Intention, to satisfy CCWG’s efforts towards transparency and accountability.[54]

The worrying consequences of ICANN’s lack of financial as well as legal accountability (especially in light of its controversies), reminds us of the need for constant reassessment of its commitment to substantive transparency, enforcement and compliance with its rules and regulations. Its current obsessive courtship with only procedural regularity must not be mistaken for a greater commitment to accountability, as assured by the post-transition IANA.

[1] DECLARATION OF CHRISTINE WILLETT IN SUPPORT OF ICANN’S OPPOSITION TO PLAINTIFF’S EX PARTE APPLICATION FOR TEMPORARY RESTRAINING ORDER, 2. (https://www.icann.org/en/system/files/files/litigation-ruby-glen-declaration-willett-25jul16-en.pdf)

[2] 4.3, gTLD Applicant Guidebook ICANN, 4-19. (https://newgtlds.icann.org/en/applicants/agb)

[3] NOTICE OF AND EX PARTE APPLICATION FOR TEMPORARY RESTRAINING ORDER; MEMORANDUM OF POINTS AND AUTHORITIES IN SUPPORT THEREOF, 15. (https://www.icann.org/en/system/files/files/litigation-ruby-glen-ex-parte-application-tro-memo-points-authorities-22jul16-en.pdf)

[4] NOTICE OF AND EX PARTE APPLICATION FOR TEMPORARY RESTRAINING ORDER; MEMORANDUM OF POINTS AND AUTHORITIES IN SUPPORT THEREOF, 15. (https://www.icann.org/en/system/files/files/litigation-ruby-glen-ex-parte-application-tro-memo-points-authorities-22jul16-en.pdf)

[5] DECLARATION OF CHRISTINE WILLETT IN SUPPORT OF ICANN’S OPPOSITION TO PLAINTIFF’S EX PARTE APPLICATION FOR TEMPORARY RESTRAINING ORDER, 4-7. (https://www.icann.org/en/system/files/files/litigation-ruby-glen-declaration-willett-25jul16-en.pdf)

[6] PLAINTIFF RUBY GLEN, LLC’S NOTICE OF MOTION AND MOTION FOR LEAVE TO TAKE THIRD PARTY DISCOVERY OR, IN THE ALTERNATIVE, MOTION FOR THE COURT TO ISSUE A SCHEDULING ORDER, 3.

[7](https://www.verisign.com/en_US/internet-technology-news/verisign-press-releases/articles/index.xhtml?artLink=aHR0cDovL3ZlcmlzaWduLm5ld3NocS5idXNpbmVzc3dpcmUuY29tL3ByZXNzLXJlbGVhc2UvdmVyaXNpZ24tc3RhdGVtZW50LXJlZ2FyZGluZy13ZWItYXVjdGlvbi1yZXN1bHRz)

[8] An expedited discovery request can provide the required evidentiary support needed to meet the Plaintiff’s burden to obtain a preliminary injunction or temporary restraining order. (http://apps.americanbar.org/litigation/committees/businesstorts/articles/winter2014-0227-using-expedited-discovery-with-preliminary-injunction-motions.html)

[9] NOTICE OF AND EX PARTE APPLICATION FOR TEMPORARY RESTRAINING ORDER; MEMORANDUM OF POINTS AND AUTHORITIES IN SUPPORT THEREOF, 2. (https://www.icann.org/en/system/files/files/litigation-ruby-glen-ex-parte-application-tro-memo-points-authorities-22jul16-en.pdf)

[10] (http://domainincite.com/20789-donuts-files-10-million-lawsuit-to-stop-web-auction); (https://www.thedomains.com/2016/08/15/afilias-asks-icann-to-disqualify-nu-dot-cos-135-million-winning-bid-for-web/)

[11] (http://www.domainmondo.com/2016/11/news-review-icann57-hyderabad-india.html)

[12] Art III, Bylaws of Public Technical Identifiers, ICANN. (https://pti.icann.org/bylaws)

[13] 1.4.1.1, gTLD Applicant Guidebook ICANN, 1-39.(https://newgtlds.icann.org/en/applicants/agb)

[14] NOTICE OF AND EX PARTE APPLICATION FOR TEMPORARY RESTRAINING ORDER; MEMORANDUM OF POINTS AND AUTHORITIES IN SUPPORT THEREOF, 15. (https://www.icann.org/en/system/files/files/litigation-ruby-glen-ex-parte-application-tro-memo-points-authorities-22jul16-en.pdf)

[15] 1.2.1; 1.2.2, gTLD Applicant Guidebook ICANN, 1-21. (https://newgtlds.icann.org/en/applicants/agb)

[16](https://www.verisign.com/en_US/internet-technology-news/verisign-press-releases/articles/index.xhtml?artLink=aHR0cDovL3ZlcmlzaWduLm5ld3NocS5idXNpbmVzc3dpcmUuY29tL3ByZXNzLXJlbGVhc2UvdmVyaXNpZ24tc3RhdGVtZW50LXJlZ2FyZGluZy13ZWItYXVjdGlvbi1yZXN1bHRz)

[17] 1.2.7, gTLD Applicant Guidebook ICANN, 1-30. (https://newgtlds.icann.org/en/applicants/agb)

[18] DECLARATION OF CHRISTINE WILLETT IN SUPPORT OF ICANN’S OPPOSITION TO PLAINTIFF’S EX PARTE APPLICATION FOR TEMPORARY RESTRAINING ORDER, 4. (https://www.icann.org/en/system/files/files/litigation-ruby-glen-declaration-willett-25jul16-en.pdf)

[19] 1.1.2.5, gTLD Applicant Guidebook ICANN, 1-8. (https://newgtlds.icann.org/en/applicants/agb)

[20] 1.2.1, gTLD Applicant Guidebook ICANN, 1-21. (https://newgtlds.icann.org/en/applicants/agb)

[21] DECLARATION OF CHRISTINE WILLETT IN SUPPORT OF ICANN’S OPPOSITION TO PLAINTIFF’S EX PARTE APPLICATION FOR TEMPORARY RESTRAINING ORDER, 7. (https://www.icann.org/en/system/files/files/litigation-ruby-glen-declaration-willett-25jul16-en.pdf)

[22] 6.8; 6.11, gTLD Applicant Guidebook ICANN, 6-5 (https://newgtlds.icann.org/en/applicants/agb);

DEFENDANT INTERNET CORPORATION FOR ASSIGNED NAMES AND NUMBERS’ MEMORANDUM OF POINTS AND AUTHORITIES IN SUPPORT OF MOTION TO DISMISS FIRST AMENDED COMPLAINT, 10. (http://domainnamewire.com/wp-content/icann-donuts-motion.pdf)

[23] 1.1.2.10, gTLD Applicant Guidebook ICANN. (https://newgtlds.icann.org/en/applicants/agb)

[24] NOTICE OF AND EX PARTE APPLICATION FOR TEMPORARY RESTRAINING ORDER; MEMORANDUM OF POINTS AND AUTHORITIES IN SUPPORT THEREOF, 15. (https://www.icann.org/en/system/files/files/litigation-ruby-glen-ex-parte-application-tro-memo-points-authorities-22jul16-en.pdf)

[25] DEFENDANT INTERNET CORPORATION FOR ASSIGNED NAMES AND NUMBERS’ MEMORANDUM OF POINTS AND AUTHORITIES IN SUPPORT OF MOTION TO DISMISS FIRST AMENDED COMPLAINT, 8. (http://domainnamewire.com/wp-content/icann-donuts-motion.pdf)

[26] 26(f); 65, Federal Rules of Civil Procedure (https://www.federalrulesofcivilprocedure.org/frcp/title-viii-provisional-and-final-remedies/rule-65-injunctions-and-restraining-orders/); (https://www.federalrulesofcivilprocedure.org/frcp/title-v-disclosures-and-discovery/rule-26-duty-to-disclose-general-provisions-governing-discovery/)

[27] 6.10, gTLD Applicant Guidebook ICANN, 6-6. (https://newgtlds.icann.org/en/applicants/agb); (https://www.icann.org/resources/reviews/specific-reviews/cct)

[28] 12(b)(6), Federal Rules of Civil Procedure; DEFENDANT INTERNET CORPORATION FOR ASSIGNED NAMES AND NUMBERS’ MEMORANDUM OF POINTS AND AUTHORITIES IN SUPPORT OF MOTION TO DISMISS FIRST AMENDED COMPLAINT, 6. (http://domainnamewire.com/wp-content/icann-donuts-motion.pdf)

[29] DEFENDANT INTERNET CORPORATION FOR ASSIGNED NAMES AND NUMBERS’ MEMORANDUM OF POINTS AND AUTHORITIES IN SUPPORT OF MOTION TO DISMISS FIRST AMENDED COMPLAINT, 8. (http://domainnamewire.com/wp-content/icann-donuts-motion.pdf)

[30] PLAINTIFF RUBY GLEN, LLC’S OPPOSITION TO DEFENDANT INTERNET CORPORATION FOR ASSIGNED NAMES AND NUMBERS’ MOTION TO DISMISS FIRST AMENDED COMPLAINT; MEMORANDUM OF POINTS AND AUTHORITIES, 12.

[31] (https://archive.icann.org/en/accountability/frameworks-principles/legal-corporate.htm); Art. 1(c), Bylaws for ICANN. (https://www.icann.org/resources/pages/governance/bylaws-en)

[32] (http://leginfo.legislature.ca.gov/faces/codes_displaySection.xhtml?lawCode=CIV§ionNum=1668); NOTICE OF AND EX PARTE APPLICATION FOR TEMPORARY RESTRAINING ORDER: MEMORANDUM OF POINTS AND AUTHORITIES IN SUPPORT THEREOF, 24. (https://www.icann.org/en/system/files/files/litigation-ruby-glen-ex-parte-application-tro-memo-points-authorities-22jul16-en.pdf)

[33] 6.6, gTLD Applicant Guidebook ICANN, 6-4. (https://newgtlds.icann.org/en/applicants/agb)

[34] DEFENDANT INTERNET CORPORATION FOR ASSIGNED NAMES AND NUMBERS’ MEMORANDUM OF POINTS AND AUTHORITIES IN SUPPORT OF MOTION TO DISMISS FIRST AMENDED COMPLAINT, 18. (http://domainnamewire.com/wp-content/icann-donuts-motion.pdf)

[35] (https://www.icann.org/resources/pages/mechanisms-2014-03-20-en)

[36] AMENDED REPLY MEMORANDUM IN SUPPORT OF ICANN’S MOTION TO DISMISS FIRST AMENDED COMPLAINT, 4. (https://www.icann.org/en/system/files/files/litigation-dca-reply-memo-support-icann-motion-dismiss-first-amended-complaint-14apr16-en.pdf)

[37] 501(c)(3), Internal Revenue Code, USA. (https://www.irs.gov/charities-non-profits/charitable-organizations/exemption-requirements-section-501-c-3-organizations)

[38] Art. II, Public Technical Identifiers, Articles of Incorporation, ICANN. (https://pti.icann.org/articles-of-incorporation)

[39](https://community.icann.org/display/alacpolicydev/At-Large+New+gTLD+Auction+Proceeds+Discussion+Paper+Workspace)

[40] (https://www.icann.org/policy); 4.3, gTLD Applicant Guidebook ICANN, 4-19. (https://newgtlds.icann.org/en/applicants/agb)

[41]5, Internet Corporation for ASsigned Names and Numbers, Fiscal Statements As of and for the Years Ended June 30, 2016 and 2015. (https://www.icann.org/en/system/files/files/financial-report-fye-30jun16-en.pdf);

[42] (http://www.theregister.co.uk/2016/07/28/someone_paid_135m_for_dot_web)

[43](https://community.icann.org/display/CWGONGAP/Cross-Community+Working+Group+on+new+gTLD+Auction+Proceeds+Home)

[44] (https://www.icann.org/public-comments/new-gtld-auction-proceeds-2015-09-08-en)

[45] (https://www.icann.org/news/announcement-2-2016-12-13-en)

[46] (https://www.icann.org/news/announcement-2-2016-12-13-en)

[47](https://www.icann.org/news/announcement-2-2016-12-13-en);

[48] (https://ccnso.icann.org/workinggroups/ccwg-charter-07nov16-en.pdf); (https://www.icann.org/news/announcement-2-2016-12-13-en)

[49] (https://www.icann.org/public-comments/new-gtld-auction-proceeds-2015-09-08-en)

[50] (https://community.icann.org/display/CWGONGAP/CCWG+Charter)

[51] 4.3, gTLD Applicant Guidebook ICANN, 4-19. (https://newgtlds.icann.org/en/applicants/agb)

[52] (https://newgtlds.icann.org/en/applicants/auctions/proceeds)

[53] (https://community.icann.org/pages/viewpage.action?pageId=63150102)

[54] (https://www.icann.org/news/announcement-2-2016-12-13-en)

CIS’ Efforts Towards Greater Financial Disclosure by ICANN

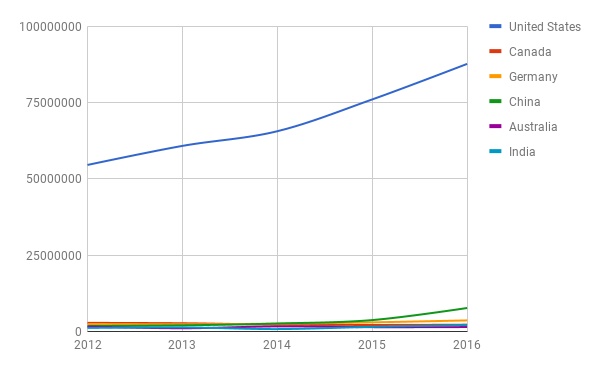

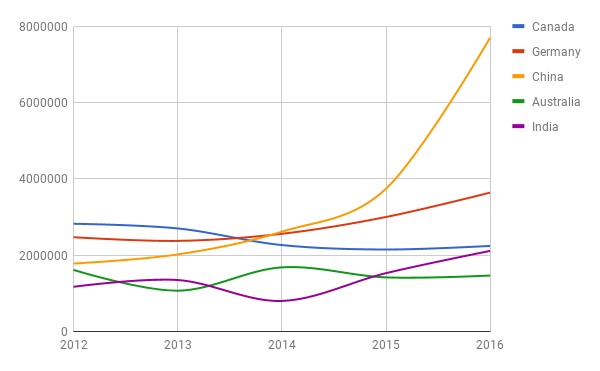

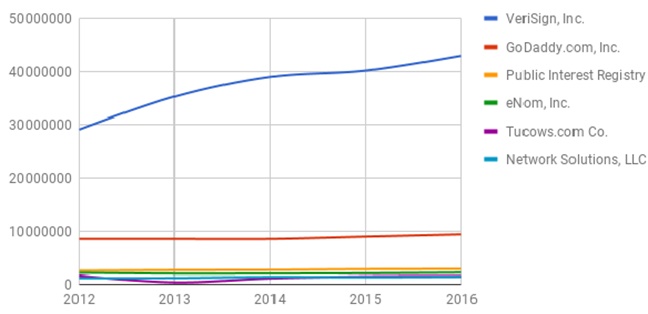

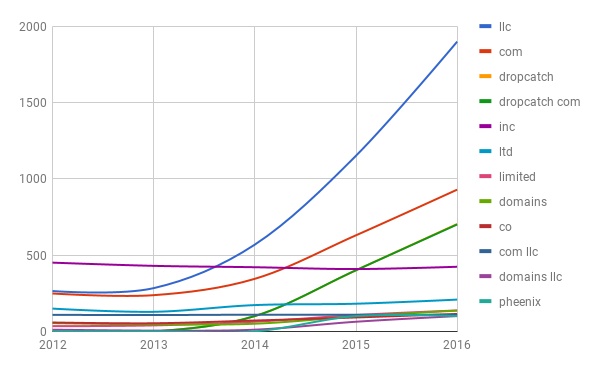

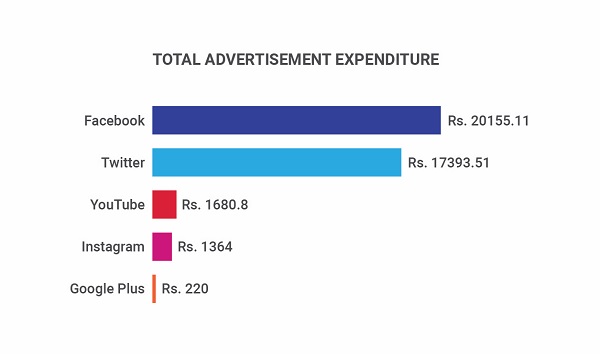

With the $135 million sale of .web,[1] the much protested renewal of the .net agreement[2] and the continued annual increase in domain name registrations,[3] among other things, it is no surprise that there are still transparency and accountability concerns within the ICANN Community. CIS, as part of its efforts to examine the functioning of ICANN’s accountability mechanisms, has filed many DIDP requests till date, in a bid for greater transparency of the organisation’s sources of revenues.

1.Efforts towards disclosure of revenue break-up by ICANN

- 2014

- 2015

- 2017

2.The need for granularity regarding historical revenues

-----

1.Efforts towards disclosure of revenue break-up by ICANN

- 2014

In 2014, CIS’ Sunil Abraham demanded greater financial transparency of ICANN at both the Asia Pacific IGF and the ICANN Open Forum at the IGF. Later that year, CIS was provided with a list of ICANN’s sources of revenue for the financial year 2014, including payments from registries, registrars, sponsors, among others, by ICANN India Head Mr. Samiran Gupta.[4] This was a big step for CIS and the Internet community, as before this, no details on granular income had ever been publicly divulged by ICANN on request.

However, as no details of historical revenue had been provided, CIS filed a DIDP request in December 2014, seeking financial disclosure of revenues for the years 1999 to 2014, in a detailed manner - similar to the 2014 report that had been provided.[5] It sought a list of individuals and entities who had contributed to ICANN’s revenues over the mentioned time period.

In its response, ICANN stated that it possessed no documents in the format that CIS had requested, that is, it had no reports that broke down domain name income and revenue received by each legal entity and individual.[6] It stated that as the data for years preceding 2012 were on a different system, compiling reports of the raw data for these years would be time-consuming and overly burdensome. ICANN denied the request citing this specific provision for non-disclosure of information under the DIDP.[7]

- 2015

In July 2015, CIS filed a request for disclosure of raw data regarding granular income for the years 1999 to 2014.[8] ICANN again said that it would be a huge burden ‘to access and review all the raw data for the years 1999 to 2014 in order to identify the raw data applicable to the request’.[9] However, it mentioned its commitment to preparing detailed reports on a go-forward basis - all of which would be uploaded on its Financials page.[10]

- 2017

To follow up on ICANN’s commitment to granularity, CIS sought a detailed report on historical data for income and revenue contributions from domain names for FY 2015 and FY 2016 in June 2017.[11] In its reply, ICANN stated that the Revenue Detail by Source reports for the last two years would be out by end July and that the report for FY 2012 would be out by end September.[12]

2.The need for granularity regarding historical revenues

In 2014, CIS asked for disclosure of a list of ICANN’s sources of revenue and detailed granular income for the years 1999 to 2014. ICANN published the first but cited difficulty in preparing reports of the second. In 2015, CIS again sought detailed reports of historical granular revenue for the same period, and ICANN again denied disclosure claiming that it was burdensome to handle the raw data for those years. However, as ICANN agreed to publish detailed reports for future years, CIS recently asked for publication of reports for the FYs 2012, 2015 and 2016. Reports for these three years were uploaded according to the timeline provided by ICANN.

CIS appreciates ICANN’s cooperation with its requests and is grateful for their efforts to make the reports for FYs 2012 to 2016 available (and on a continued basis). However, it is important that detailed information of historical revenue and income from domain names for the years 1999 to 2014 be made publicly available. It is also crucial that consistent accounting and disclosure practices are adopted and made known to the Community, in order to avoid omissions of statements such as Detail Revenue by Source and Lobbying Disclosures, among many others, in the annual reports - as has evidently happened for the years preceding 2012. This is necessary to maintain financial transparency and accountability, as an organisation’s sources of revenues can inform the dependant Community about why it functions the way it does.

It will also allow more informed discussions about problems that the Community has faced in the past and continues to struggle with. For example, while examining problems such as ineffective market competition or biased screening processes for TLD applicants, among others, this data can be useful in assessing the long-term interests, motives and influences of different parties involved.

[1] https://www.icann.org/news/announcement-2-2016-07-28-en

[2] Report of Public Comment Proceeding on the .net Renewal. https://www.icann.org/en/system/files/files/report-comments-net-renewal-13jun17-en.pdf

[3] https://www.icann.org/resources/pages/cct-metrics-domain-name-registration-2016-06-27-en

[4] https://cis-india.org/internet-governance/blog/cis-receives-information-on-icanns-revenues-from-domain-names-fy-2014

[5] DIDP Request no - 20141222-1, 22 December 2014. https://cis-india.org/internet-governance/blog/didp-request-2

[6] https://www.icann.org/en/system/files/files/cis-response-21jan15-en.pdf

[7] Defined Conditions for Non-Disclosure - Information requests: (i) which are not reasonable; (ii) which are excessive or overly burdensome; (iii) complying with which is not feasible; or (iv) are made with an abusive or vexatious purpose or by a vexatious or querulous individual.

https://www.icann.org/resources/pages/didp-2012-02-25-en

[8] DIDP Request no - 20150722-2, 22 July 2015. https://cis-india.org/internet-governance/blog/didp-request-12-revenues

[9] https://www.icann.org/en/system/files/files/didp-response-20150722-2-21aug15-en.pdf

[10] https://www.icann.org/en/system/files/files/didp-response-20150722-2-21aug15-en.pdf; https://www.icann.org/resources/pages/governance/financials-en

[11] DIDP Request No. 20170613-1, 14 June 2017.

[12] https://www.icann.org/en/system/files/files/didp-20170613-1-marda-obo-cis-response-13jul17-en.pdf

Why Presumption of Renewal is Unsuitable for the Current Registry Market Structure

With the recent renewal of the .net legacy Top-Level-Domain (TLD), the question of the appropriate method of renewal is worth reconsidering. When we talk about presumption of renewal for registry agreements, it means that the agreement has a reasonable renewal expectancy at the end of its contractual term. According to the current base registry agreement, it shall be renewed for 10-year periods, upon expiry of the initial (and successive) term, unless the operator commits a fundamental and material breach of the operator’s covenants or breach of its payment obligations to ICANN.

A Comparison of Legal and Regulatory Approaches to Cyber Security in India and the United Kingdom

This report compares laws and regulations in the United Kingdom and India to see the similarities and disjunctions in cyber security policy between them. The first part of this comparison will outline the methodology used to compare the two jurisdictions. Next, the key points of convergence and divergence are identified and the similarities and differences are assessed, to see what they imply about cyber space and cyber security in these jurisdictions. Finally, the report will lay out recommendations and learnings from policy in both jurisdictions.

Read the full report here

Breach Notifications: A Step towards Cyber Security for Consumers and Citizens

Electronic data processing has awarded societies with lots of opportunities for improvements that would not have been possible without them. Low market entrance barriers for new innovators have caused a flood of applications and automations that have the potential to improve citizens’ and consumers’ lives, as well as government operations. But while the increasing prevalence of electronic hardware and programmable software in many different parts of society and industry, combined with the intricate value chains of international communications networks, devices and equipment markets and software markets, have created a large number of opportunities for economic, social and public activity, they have also brought with them a number of specific problems pertaining to consumer rights.

Counter Comments on TRAI's Consultation Paper on Privacy, Security and Ownership of Data in Telecom Sector

The submission is divided in three main parts. The first part 'Preliminary' introduces the document. The second part 'About CIS' is an overview of the organization. The third part contains the 'Counter Comments' on the Consultation Paper taking into account the submission made by other stakeholders.

Download the full submission here

GDPR and India: A Comparative Analysis

The post is written by Aditi Chaturvedi and edited by Amber Sinha

High administrative fines in case of non-compliance with GDPR provisions are a driving force behind these concerns as they can lead to loss of business for various countries such as India.

To a large extent, future of business will depend on how well India responds to the changing regulatory changes unfolding globally. India will have to assess her preparedness and make convincing changes to retain the status as a dependable processing destination. This document gives a brief overview of data protection provisions of the Information Technology Act, 2000 followed by a comparative analysis of the key provisions of GDPR and Information Technology Act and the Rules notified under it.

Breeding misinformation in virtual space

The phenomenon of fake news has rece-ived significant sc-holarly and media attention over the last few years. In March, Sir Tim Berners Lee, inventor of the World Wide Web, has called for a crackdown on fake news, stating in an open letter that “misinformation, or fake news, which is surprising, shocking, or designed to appeal to our biases, can spread like wildfire.”

Gartner, which annually predicts what the next year in technology will look like, highlighted ‘increased fake news’ as one of its predictions.

The report states that by 2022, “majority of individuals in mature economies will consume more false information than true information. Due to its wide popularity and reach, social media has come to play a central role in the fake news debate.”

Researchers have suggested that rumours penetrate deeper within a social network than outside, indicating the susceptibility of this medium. Social networks such as Facebook and communities on messaging services such as Whats-App groups provide the perfect environment for spreading rumours. Information received via friends tends to be trusted, and online networks allow in-dividuals to transmit information to many friends at once.

In order to understand the recent phenomenon of fake news, it is important to recognise that the problem of misinformation and propaganda has existed for a long time. The historical examples of fake news go back centuries where, prior to his coronation as Roman Emperor, Octavian ran a disinformation campaign against Marcus Antonius to turn the Roman populace against him.

The advent of the printing press in the 15th century led to widespread publication; however, there were no standards of verification and journalistic ethics. Andrew Pettigrew wri-tes in his The Invention of News, that news reporting in the 16th and 17th centuries was full of portents about “comets, celestial apparitions, freaks of nature and natural disasters.”

In India, the immediate cause for the 1857 War of Indepen-dence was rumours that the bones of cows and pigs were mixed with flour and used to grease the cartridges used by the sepoys.

Leading up to the Second World War, the radio emerged as a strong medium for dissemination of disinformation, used by the Nazis and other Axis powers. More recently, the milk miracle in the mid-1990s consisting of stories of the idol of Ganesha drinking milk was a popular fake news phenomenon. In 2008, rumours about the popular snack, Kurkure, being made out of plastic became so widespread that Pepsi, its holding company, had to publicly rebut them.

A quick survey by us at the Centre of Internet and Society, for a forthcoming report, of the different kinds of misinformation being circulated in India, suggested four different kinds of fake news.

The first is a case of manufactured primary content. This includes instances where the entire premise on which an argument is based is patently false. In August 2017, a leading TV channel reported that electricity had been cut to the Jama Masjid in New Delhi for non-payment of bills. This was based on a false report carried by a news portal.

The second kind of fake news involves manipulation or editing of primary content so as to misrepresent it as something else. This form of fake news is often seen with respect to multimedia content such as images, pictures, audios and videos. These two forms of fake news tend to originate outside traditional media such as newspapers and television channels, and can be often sourced back to social media and WhatsApp forwards.

However, we see such unverified stories being picked up by traditional media. Further, there are instances where genuine content such as text and pictures are shared with fallacious contexts and descriptions. Earlier this year, several dailies pointed out that an image shared by the ministry of home affairs, purportedly of the floodlit India-Pakistan border, was actually an image of the Spain-Morocco border. In this case, the image was not doctored but the accompanying information was false.

Third, more complicated cases of misinformation involve the primary content itself not being false or manipulated, but the facts when they are reported may be quoted out of context. Most examples of misinformation spread by mainstream media, which has more evolved systems of fact checking and verification, and editorial controls, would tend to fall under this.

Finally, there are instances of lack of diligence in fully understanding the issues before reporting. Such misrepresentations are often encountered while reporting in fields that require specialised knowledge, such as science and technology, law, finance etc. Such forms of misinformation, while not suggestive of malafide intent can still prove to be quite dangerous in shaping erroneous opinions.

While the widespread dissemination of fake news contributes greatly to its effectiveness, it also has a lot to do with the manner in which it is designed to pander to our cognitive biases. Directionally motivated reasoning prompts people confronted with political information to process it with an intention to reach a certain pre-decided conclusion, and not with the intention to assess it in a dispassionate manner. This further results in greater susceptibility to confirmation bias, disconfirmation bias and prior attitude effect.

Fake news is also linked to the idea of “naïve realism,” the belief people have that their perception of reality is the only accurate view, and those in disagreement are necessarily uninformed, irrational, or biased. This also explains why so much fake news simply does not engage with alternative points of view.

A well-informed citizenry and institutions that provide good information are fundamental to a functional democracy. The use of the digital medium for fast, unhindered and unchecked spread of information presents a fertile ground for those seeking to spread misinformation. How we respond to this issue will be vital for democratic societies in our immediate future. Fake news presents a complex regulatory challenge that requires the participation of different stakeholders such as the content disseminators, platforms, norm guardians which include institutional fact checkers, trade organisations, and “name-and-shaming” watchdogs, regulators and consumers.

AI and Healthcare in India: Looking Forward

Edited by Roshni Ranganathan

The Roundtable consisted of participants from different sides of the AI and healthcare spectrum, from medical practitioners, medical startups to think tanks. The Roundtable discussed various questions regarding AI and healthcare with a special focus on India.

The Roundtable discussion began with the results of the primary research conducted by CIS on AI and healthcare. CIS, in its research, identified three main uses of AI in healthcare - supporting diagnosis, early identification and imaging diagnosis. The benefits of AI were - faster diagnosis, personalised treatment and the bridging of manpower gap. Questions regarding medical ethics, privacy, regulatory certainty, social acceptance and trust were identified as the issues or barriers to the use of AI in healthcare. The cases chosen for study were IBM Watson (used by Manipal Hospitals in India), Deep Blue (used by the NHS UK), Google Brain (used in the Aravind Healthcare in India) and Sig-Tuple (an AI based pathologist assistant). CIS wished to explore the ethical side of this topic,the question of public interest and the need to protect the patient from harm. The session was then opened for discussion on the following issues.

Artificial Intelligence - Literature Review

Edited by Amber Sinha and Udbhav Tiwari; Research Assistance by Sidharth Ray

With origins dating back to the 1950s Artificial Intelligence (AI) is not necessarily new. With an increasing number of real-world implications over the last few years, however, interest in AI has been reignited over the last few years.

The rapid and dynamic pace of development of AI have made it difficult to predict its future path and is enabling it to alter our world in ways we have yet to comprehend. This has resulted in law and policy having stayed one step behind the development of the technology.

Understanding and analyzing existing literature on AI is a necessary precursor to subsequently recommending policy on the matter. By examining academic articles, policy papers, news articles, and position papers from across the globe, this literature review aims to provide an overview of AI from multiple perspectives.

The structure taken by the literature review is as follows:

- Overview of historical development

- Definitional and compositional analysis

- Ethical & Social, Legal, Economic and Political impact and sector-specific solutions

- The regulatory way forward

This literature review is a first step in understanding the existing paradigms and debates around AI before narrowing the focus to more specific applications and subsequently, policy-recommendations.

Should Aadhaar be mandatory?

The article was published in Deccan Herald on December 9, 2017.

Getting their day in the court to hear interim matters is but a small victory in what has been a long and frustrating fight for the petitioners. In 2012, Justice K S Puttaswamy, a former Karnataka High Court judge, filed a petition before the Supreme Court questioning the validity of the Aadhaar project due its lack of legislative basis (the Aadhaar Act was passed by Parliament in 2016) and its transgressions on our fundamental rights.

Over time, a number of other petitions also made their way to the apex court challenging different aspects of the Aadhaar project. Since then, five different interim orders of the Supreme Court have stated that no person should suffer because they do not have an Aadhaar number.

Aadhaar, according to the Supreme Court, could not be made mandatory to avail benefits and services from government schemes. Further, the court has limited the use of Aadhaar to only specific schemes, namely LPG, PDS, MNREGA, National Social Assistance Program, the Pradhan Mantri Jan Dhan Yojna and EPFO.

The then Attorney General, Mukul Rohatgi, in a hearing before the court in July 2015 stated that there is no constitutionally guaranteed right to privacy. But the judgement by the nine-judge bench earlier this year was an emphatic endorsement of the constitutional right to privacy.

In the course of a 547-page judgement, the bench affirmed the fundamental nature of the right to privacy, reading it into the values of dignity and liberty.

Yet months after the judgement, the Supreme Court has failed to hear arguments in the Aadhaar matter. The reference to a larger bench and subsequent deferrals have since delayed the entire matter, even as the government has moved to make Aadhaar mandatory for a number of government schemes.

At this point, up to 140 government services have made linking with Aadhaar mandatory to avail these services. Chief Justice of India Dipak Misra has promised a constitution bench this week, likely to look only into interim matters of stay on the deadline of Aadhaar-linking. It is likely that the hearings for the final arguments are still some months away. The refusal of the court to adjudicate on this issue has been extremely disappointing, and a grave disservice to the court's intended role as the champion of individual rights.

It is worth noting that the interim orders by the Supreme Court that no person should suffer because they do not have an Aadhaar number, and limiting its use only to specified schemes, still stand.

However, since the passage of the Aadhaar Act, which allows the use of Aadhaar by both private and public parties, permits making it mandatory for availing any benefits, subsidies and services funded by the Consolidated Fund of India, the spate of services for which Aadhaar has been made mandatory suggests that as per the government, the Aadhaar Act has, in effect, nullified the orders by the Supreme Court.

This was stated in so many words by Union Law Minister Ravi Shankar Prasad in the Rajya Sabha in April. This view is an erroneous one. While acts of Parliament can supersede previous judicial orders, they must do so either through an express statement in the objects of the Act, or implied when the two are mutually incompatible. In this case, the Aadhaar Act, while permitting the government authorities to make Aadhaar mandatory, does not impose a clear duty to do so.

Therefore, reading the orders and the legislation together leads one to the conclusion that all instances of Aadhaar being made mandatory under the Aadhaar Act are void.

The question may be more complicated for cases where Aadhaar has been made mandatory through other legislations, such as Prevention of Money Laundering Act, as they clearly mandate the linking of Aadhaar numbers, rather than merely allowing it. However, despite repeated appeals of the petitioners, the court has so far refused to engage with the question of the legality of such instances.

How may the issues finally be resolved? When the court deigns to hear final arguments, the Aadhaar case will be instructive in how the court defines the contours of the right to privacy. The right to privacy judgement, while instructive in its exposition of the different aspects of privacy, does not delve deeply into the question of what may be legitimate limitations on this right.

In one of the passages of the judgement, "ensuring that scarce public resources are not dissipated by the diversion of resources to persons who do not qualify as recipients" is mentioned as an example of a legitimate incursion into the right to privacy. However, it must be remembered that none of the opinions in the privacy judgement were majority judgements.

Therefore, in future cases, lawyers and judges must parse through the various opinions to arrive at an understanding of the majority opinion, supported by five or more judges. While the privacy judgement was a landmark one, its actual impact on the rights discourse and on matters like Aadhaar will depend extensively on the how the judges choose to interpret it.

It Hurts Them Too

Srinagar, J&K: For Mahender*, a member of the Central Reserve Police Force (CRPF) posted in Srinagar for the last two years, the internet has been a way to feel virtually close to his children and wife in Bihar, nearly 1,900 kms away. After duty every day, he finds a quiet corner to start video-calling his wife. At the other end, she ensures their two children are beside her. “We discuss how our day went. Most of our conversations revolve around the kids, their schooling and food, and about my parents who live near our house,” says Mahender, who identified himself only with his first name.

However, Mahender and thousands of security personnel like him posted in the Kashmir Valley haven't found this easy connectivity always reliable, courtesy the government's frequent internet shutdowns, phone data connectivity cuts, and social media bans.

Jammu & Kashmir has faced 55 internet shutdowns between 2012 and 2017, as recorded by the Software Freedom Law Centre. The administration justifies this crackdown by citing "law-and-order situations" that occur during encounters of security forces with militants and, later, when protests and marches are carried out by civilians during militants' funerals.

Hizbul Mujahideen commander Burhan Wani was killed by security forces and police on 8 July 2016, triggering a six-month-long “uprising” among civilians in Kashmir. Immediately after the shootout, security agencies shut the internet down. With 55 internet shutdowns in 2017 itself, it is something of a standard practice in Kashmir today to block social media or internet in a district or entire Valley each time there is an encounter. It is also a recurring practice of precaution against protests on Independence and Republic Day every year.

Security forces and police are not untouched by these shutdowns though. There are 47 CRPF battalions posted in the Kashmir region. “Our jawans experience difficulties during internet bans as they are not able to communicate with their families and friends as frequently as they do when internet is working,” says Srinagar-based CRPF Public Relations Officer Rajesh Yadav.

The J&K police, who are at the forefront of quelling protests and maintaining law & order in the Valley with a strength of nearly 100,000, also suffer. There have been growing instances of clashes between the Kashmiri police and protesters who believe their home force is being brutal during crowd control. The policemen have had to hide or operate in plain clothes. A senior police officer in Srinagar, who does not want to be named, says, “Our families are worried about our well-being when we are dealing with frequent agitations. In such a situation, when there is a ban, we find it difficult to stay in touch with our families.”

More dangerously, internet bans also hit the official communication of cops in action. Their offices are equipped with BSNL landline connections, which are rarely shut down, and they usually communicate through wireless; but for mobile internet most of them depend on private internet service providers, owing to their better connectivity, as the rest of the state. A senior police officer who deals with counter-insurgency in Kashmir speaks of the impact of cutting off phone data connectivity. "We have our own WhatsApp groups for quick official communication. We use broadband in offices only and can’t take it to sites of counter-insurgency operations.”

Yadav of the CRPF says, “While we have several effective means of communication for official purposes, social media is one that has accentuated our communication network. During internet bans, our work is not entirely hampered, but there is a little bit of pinch, since that speed and ease of working is not there.” Nevertheless, he defends the ban, insisting that Facebook and WhatsApp are handy tools for people to "flare up" the situation and "mobilise youths" during protests. "So, it becomes a compulsion for the administration to impose the ban."

Counter-insurgency forces have in the last few years created social media monitoring and surveillance cells. They say it is to equally match the extremists, including those in Pakistan, who use social media services like Telegram, Facebook and WhatsApp now, instead of their phones which can be tapped. It is also to keep an eye on suspected rumour-mongers and propagandists. For instance, 22-year-old Burhan Wani had gained the attention of security forces precisely because of the way he used his huge following, amassed through Facebook posts and gun-toting pictures, to inspire young Kashmiris to militancy.

“There is always monitoring and surveillance. If militants are using it, then they are within the loop,” says Yadav.

There is widespread public outrage against the state government and agencies who impose frequent net bans in Kashmir, but the CRPF official says it hampers their attempts to build an image and do public relations in Kashmir too. “We promote and highlight programmes like Civic Action and Sadhbhavana online, and that's not possible when there's no social media.”

"The public's criticism of the ban is justified,” the counter-insurgency official says. But they are compelled to use it in situations like during the recent scare around braid chopping, which was caused due to “rumour-mongering by persons with vested interests”. Kashmiri civil society had suggested that the police keep the internet up to issue online clarifications trashing the rumours, but it was not to be.

"The internet has made it possible to identify culprits while sitting in an office. But we have to shut it down in case of communal tensions which have the tendency to engulf the whole state,” says the senior cop. “When we have no option left, we go back to traditional human intelligence.”

Name changed to protect identity.

Mir Farhat is a journalist from Jammu & Kashmir, with an experience of reporting politics, conflict, environment, development and governance issues. His primary interests lie in reporting environment and development. He is a member of 101Reporters.com, a pan-India network of grassroots reporters.

Shutdown stories are the output of a collaboration between 101 Reporters and CIS with support from Facebook.

Digital Banking Dreams: Interrupted

Srinagar, J&K: Inside a buzzing branch of the Jammu & Kashmir Bank in Srinagar, 27-year-old Falak Akhtar is busy processing routine transactions. A member of the technical team, this young banker says that almost half of the branch's customers have registered their accounts with the M-Pay mobile app. However, the application built for convenience is not always dependable. As she attends to the rush of customers inside the branch, Falak reminds us that whenever there is an internet shutdown, the app is of no use. “The customers have to resort to traditional banking.” she says.

Every day, Falak’s branch executes 53% of its transactions online. “If the customers do online transactions, the cost per transaction for the bank is only Rs 7. But every time an internet ban is enforced in Kashmir, the cost of each transaction goes up to Rs 54,” she says.

Given that internet shutdowns in Kashmir are usually accompanied by an imposition of a physical curfew, simply going to the bank can be impossible. Ironically, it is during political tensions that Kashmiris, stuck indoors due to curfew or avoiding the streets to keep safe, need internet banking the most.

Zahid Maqbool, an information officer with the J&K government, uses the J&K Bank’s mobile app regularly to transfer money or do transactions. “But last year, when my brother studying outside the state needed money, I couldn’t use the app because of the internet ban,” he says. “During the tense situation and curfew, I took a huge risk to reach to the branch in Tral, where only two employees were present." It took him around three hours to transfer Rs 12,000 ($185) to his brother’s account "because the bank’s internet line was also running very slow”.

Showkat (name changed), manager of an ICICI Bank branch in Srinagar, says they use internet facilities of BSNL and Airtel during normal days. “Our branch has 20,000 customers, and around 40% of them use digital banking through an app called I-Mobile,” he says. Last year, as Kashmir plunged into a six-month-long political unrest after the killing of Hizbul Mujahideen commander Burhan Wani on July 8, internet was snapped immediately and remained suspended for several months. The bank was not able to do online transactions throughout the summer. “And whenever there was a relaxation in curfew or strike, there used to be a huge rush of customers in the branch,” Showkat says.

“Whenever an internet ban is on in Kashmir, we suffer huge losses because we don’t manage to get new account holders,” says Showkat. “Since we run most of our operations online, the ban blocks the account holder from accessing the net and uploading scanned ID proofs.”

On an average, his branch opens 100 accounts per month. “But last year, amid the internet ban, we managed to open only 40 accounts in six months,” he says. For processing these account opening applications, the bank had to courier the forms to Chandigarh, the bank's nerve centre in North India. Account openings take 24 hours online, but here, the forms took six days to reach Chandigarh, after which it took another 8 days to process it.

To overcome hurdles faced during last year’s internet gag, the bank used the Indian Army’s VSAT network on lease. Showkat says such a line can be used for commercial purposes after clearance from the Army and a payment of Rs 15,000 per month. "Our ATMs were connected through that lease line," he says. "But the problem was that the gag had slowed down the VSAT as well.”

The slow-speed internet hampered cash withdrawals from ATMs, which created quite a furore. “The already frustrated customers started shouting that the bank employees were cheats, that we were irresponsible. It is very difficult to make them understand the technical aspects of it,” he says.

Although banks suffer during frequent internet gags, their plight is often overshadowed by the bigger political crisis in Kashmir. What's clear is that disrupted banking, fee payments, purchases and withdrawals, all severely cripple the everyday life of Kashmiris.

In 2016, angry customers, barred from e-banking due to internet clampdown, thronged banks after months, demanding they be given some respite on EMIs (monthly loan repayments) and other banking schemes. An official from the branch of a nationalised bank outside Srinagar says that when they refused to entertain such requests on procedural grounds, the customers entered into heated exchanges.

Showkat says that customers who had taken loans were neither able to repay the installments online, nor were they able to visit the branch because of unrest. “These customers then end up having to bear the high interest rate, and some of them had to face penalties.”

Mudasir Ahmad, the owner of a Kashmir Art Emporium in Central Kashmir’s Budgam, says that he had borrowed a loan of Rs 40 lakh ($62,400) from J&K Bank as capital for his handicraft business, but he had missed seven loan instalments last summer due to the internet clampdown. “I usually pay my loan installments through e-banking. Last year, when the internet was not working, I had to visit the bank to repay it. There are such long queues. It took me a whole day last year to pay one installment, which I otherwise pay within minutes through e-banking.”

Digital banking was introduced in Kashmir few years ago in an effort to reduce footfall in banks and increase online transactions. Online banking done through cards and apps was hailed as a step towards cashless economy. Abdul Rashid, a relationship executive of a State Bank of India branch in Srinagar, says, “But because of the internet gag at most times, we are not able to be a part of it."

Safeena Wani is an independent journalist from Kashmir. Her work has appeared in Al-Jazeera, Kashmir Reader and other regional publications. She is a member of 101Reporters.com, a pan-India network of grassroots reporters.

Shutdown stories are the output of a collaboration between 101 Reporters and CIS with support from Facebook.

Amid Unrest in the Valley, Students See a Dark Wall

Srinagar, J&K: On November 18, Srinagar lost 3G and 4G connectivity after a militant and a sub-inspector of the Jammu & Kashmir police force were killed, and one militant caught alive in a brief encounter on the outskirts of the city, near Zakoora crossing. District authorities said data connectivity was snapped to “maintain law and order”.

|  |  |  |

|---|

Students in Srinagar’s SPS Library. Picture Courtesy: Aakash Hassan

But to Jasif Ayoub, an aspiring chartered accountant, it seemed like an obstruction to his exam preparations. Not being able to access lectures and texts online, Ayoub was perturbed. He had moved from Anantnag in south Kashmir, to Srinagar, only to have an easy access to the vast pool of information on the world wide web. “My hometown witnesses internet shutdowns very frequently. That is why I moved to live with relatives in Srinagar to prepare for my exams. But the internet speed here too is getting worse by the day,” says Ayoub.

The internet is usually the first administrative casualty when any law & order situation arises in the Kashmir Valley, which has been restive and agitated over the last two decades. Despite the frequency of shutdowns, the state still does not issue a prior warning, or offer emergency connectivity measures. Residents know the pattern now: the mobile internet and SMS are the first to go down, and then broadband and other lease-line service providers follow.

J&K tops the list of Indian states that have witnessed most number of internet shutdowns, with 27 being the count from 2012 to 2017, according to internetshutdowns.in, run by Software Freedom Law Centre. There has been a sharp rise in the curbs on internet imposed this year, with over 30 shutdowns until November 22. Government authorities who issue and implement these bans say it is the only way to undercut the strength of social media in organising movements and resistance. The prime example is Burhan Wani, the 21-year-old Hizb-ul-Mujahideen commander who had used his Facebook account to popularise and justify militant resistance. Wani’s death saw protests erupting across the Valley, which made the state snap internet services for about six months on prepaid mobile networks. For four months, there was no internet access on postpaid mobile networks too. These have been the longest intervals of ban. However, day-long, hour-long and even week-long periods of non-connectivity are alarmingly common.

The incessant disruption of internet services prevents students from accessing online education resources. Class IX student Haiba Jaan in Srinagar depends on lectures from Khan Academy, an online coaching centre, to clarify a lot of concepts. A resident of Hyderpora in Srinagar, Haiba points to the i-Pad in her hand. “This is the best way of learning," she says. "I was not satisfied with my teachers in school or tuition classes. I found studying on the internet quite useful. But, the problem with that is the regular internet shutdowns." Her parents got a postpaid broadband connection the previous year to help Haiba. "But even that gives up many times during total internet shutdowns," says Haiba.

In May this year, the government suspended the use of 22 social media and messaging platforms in Kashmir for a month. Skype was one of the messaging services banned. This put Mehraj Din through great trouble. Shortlisted for a summer programme at Istanbul, Turkey, this scholar of Islamic Studies at Kashmir University, had to appear for the final interview via Skype. "The ban could have ended all my chances to get selected had the organisers not agreed to an audio interview considering the ground situation here," says Mehraj, who is currently compiling his dissertation for the university. "I have a deadline to meet, but repeated shutdowns have affected my work," he says. "This a punishment from the State."

Full libraries, half studies

When home and mobile internet connections are snapped, the state government's e-learning initiative in public libraries provides some respite. Mehrosha Rasool wants to secure an MBBS seat through the NEET competitive exam. She visits the SPS library in Srinagar religiously to access the study material that has been downloaded and made available on computers. The 17-year-old resident of Nishat in Srinagar says libraries are useful since one never knows how long the internet services at home will stay stable. Irshad Ahmad, another student utilising the facilities at SPS library, says he moved to Srinagar from Pattan town of north Kashmir because "this facility of accessing education material is not available at the library in my tehsil."

Most prominent libraries in Srinagar have computers and tablets for students’ access, "But the rooms often become overcrowded as hundreds of students have registered at the libraries for internet facilities," says Mehrosha.

Schools in the Valley, meanwhile, rely on traditional means in the absence of the e-learning systems. Javaid Ahmad Wani, a political science teacher from south Kashmir’s Anantnag, believes that with little time in the year to even complete the basic syllabus thanks to frequent and sudden school closures during periods of unrest, supplementary e-learning is a distant possibility. Even when teachers and students do have access to these resources to stay updated, internet shutdowns make them unreliable. Therefore, teachers and schools stick to conventional means. Javaid admits that he has himself lost opportunities to an internet shutdown. “I could not submit the form for the main exam of the J&K public service last year because there was no Internet,” he says.

Curbs pinch civil service aspirants

Many among the civil service aspirants are dependent on the internet for preparations. Anees Malik, a resident of Shopian, is preparing for the civil service exams. "I cannot afford coaching, so I rely on the internet," he says, especially for mock exams and previous question papers. "In such a situation, losing connectivity almost every other week is the worst thing to happen.”

Sakib Wani, a Kupwara resident who is currently studying chemistry in Uttarakhand, notices a marked indifference in Kashmir to using online resources. "Those applying for scholarships and pursuing higher education may be using it but not to the extent that students in other states of India do it,” Sakib says. He believes that the repeated internet ban could be a possible reason for students to not opt for online educational resources. With colleges and schools shut for weeks during conflict periods, the internet could have been a great way to continue education formally and personally, but the repeated shutdowns have closed that door of opportunity too.

Aakash Hassan is a Srinagar-based freelance writer and a member of 101Reporters.com, a pan-India network of grassroots reporters. He has reported on conflict, environment, health and other issues for different publications across India.

Shutdown stories are the output of a collaboration between 101 Reporters and CIS with support from Facebook.

Online or Offline, Protest Goes On

Srinagar, J&K: Ahead of the Srinagar parliamentary by-polls held on 9 April 2017, the Jammu & Kashmir state government suspended mobile data services to prevent protests around the election. The constituency went to polls with strict restrictions on movement, and with no access to mobile internet. As soon as the electoral staff reached their respective polling booths, however, there were protests. People at dozens of locations in central Kashmir’s Budgam district began to gather to demonstrate against the central and state governments, which they believed had not safeguarded Kashmiri interests.

Faizan, a 12-year-old schoolboy, was killed in the Dalwan shooting |

Abbas, 21, was one of the victims of the shooting in Dalwan |

Abbas’ home in Dalwan |

The school in Dalwan where the shooting occurred |

Picture Courtesy: Junaid Nabi Bazaz

In Dalwan village, a picture-postcard village atop a hill 35 kms from Budgam town, no votes were cast: the officers fled the polling station, and the paramilitary forces and police shot at protesters. Two people – a 21-year-old son of a policeman and a 12-year-old schoolboy – died on the spot.

People of Dalwan have been voting in droves in every parliamentary, legislative and local body election, even on occasions where much of Kashmir boycotted polls. But in April, residents said they were fed up with legislators not working to ensure uninterrupted power, water supply, concrete roads, or even a permanent doctor at its only dispensary. So, a village that has never demonstrated or produced any militants in the last 30 years of uprisings in the Kashmir Valley erupted in protest that election day. Now, the cemetery in which the two killed civilians are buried has been renamed as Martyr’s Graveyard.

Bazil Ahmad, a resident of Dalwan, says that nothing could have prevented the protests that day. “We protested against state, it was a spontaneous response,” says 22-year-old Ahmad who threw his first stones that day. “If the government believes that an internet blockade could prevent protests, they’re living in a fool’s paradise.” He sees the internet only as a free platform to express his anger and disappointment. “The actual trigger for the anger comes from the denial of rights and state aggression, not because of the internet,” says Ahmad.

As the news about the killings spread to neighbouring villages word-of-mouth, residents there too protested. Journalists in these villages updated their newsrooms. In a few days, all newspapers in Kashmir carried the news of eight deaths, scores of injuries, and the appalling 6.5% voter turnout in Budgam and Ganderbal districts.

After the ban was lifted, videos captured on polling day were posted on Facebook, Twitter and WhatsApp. One of them was a video of Farooq Dar, a voter returning from the polling booth, tied to the front bumper of a military vehicle as it patrolled villages. A paper with his name was tied to his chest, and a soldier announced on the loudspeaker, “Look at the fate of the stonepelter.” The video created an uproar internationally. The armed forces were accused of using a civilian as “a human shield”, pushing it to hold an inquiry, and the police to lodge an FIR.

After these videos emerged, the government on April 26 officially banned 22 social media sites and apps, including Facebook, WhatsApp and Twitter, for over a month. Once again, it seemed to have little effect on the protests – and protestors.

Sajad, who has been throwing stones for the past eight years at the armed forces, says, “The government is miscalculating the use of internet and the occurrence of protests.” The 28-year-old refers to the protests using the Kashmiri phrase kani jung, loosely translated as ‘stone battle’, which to him conveys a revolutionary zeal. Youths like Sajad who participate in the protests insist that they are provoked each time by an instance of human rights violation that exacerbates the long experience of militarisation, aspiration for “azadi”, and conflict in Kashmir. Internet shutdowns do nothing to erase this trigger, he says, and sometimes heighten their anger.