AI for Good

The report was edited by Elonnai Hickok.

The workshop was aimed at examining the current narratives around AI and imagining how these may transform with time. It raised questions about how we can build an AI for the future, and traced the implications relating to social impact, policy, gender, design, and privacy.

Methodology

The rationale for conducting this workshop in a design festival was to ensure a diverse mix of participants. The participants in the workshop came from varied educational and professional backgrounds who had different levels of understanding of technology. The workshop began with a discussion on the existing applications of artificial intelligence, and how people interact and engage with it on a daily basis. This was followed by an activity where the participants were provided with a form and were asked to conceptualise their own AI application which could be used for social good. The participants were asked to think about a problem that they wanted the AI application to address and think of ways in which it would solve the problem. They were also asked to mention who will use the application. It prompted participants to provide details of the AI application in terms of the form, colour, gender, visual design, and medium of interaction (voice/ text). This was intended to nudge the participants into thinking about the characteristics of the application, and how it will lend to the overall purpose. The form was structured and designed to enable participants to both describe and draw their ideas. The next section of the form gave them multiple pairs of principles. They were asked to choose one principle from each pair. These were conflicting options such as ‘Openness’ or ‘Proprietary’, and ‘Free Speech’ or ‘Moderated Speech’. The objective of this section was to illustrate how a perceived ideal AI that satisfies all stakeholders can be difficult to achieve, and that the AI developers at times may be faced with a decision between profitability and user rights.

Participants were asked to keep their responses anonymous. These responses were then collected and discussed with the group. The activity led to the participants engaging in a discussion on the principles mentioned in the form. Questions around where the input data to train the AI would come from, or what type of data the application will collect were discussed. The responses were used to derive implications on gender, privacy, design, and accessibility.

Responses

Analysis

Even as the responses were varied, they had a few key similarities and observations.

Participants’ Familiarity with AI

The participants’ understanding of AI was based on what they read and heard from various sources. While discussing the examples of AI, the participants were familiar with not just the physical manifestation of AI such as robots, but also AI software. However when asked to define an AI the most common explanations were, bots, software, and the use of algorithms to make decisions using large amounts of data. The participants were optimistic of the way AI could be used for social good. However, some of them showed concern about the implications on privacy.

Perception of AI Among Participants

With the workshop, our aim was to have the participants reflect on their perception of AI based on their exposure to the narratives around AI by companies and the government.

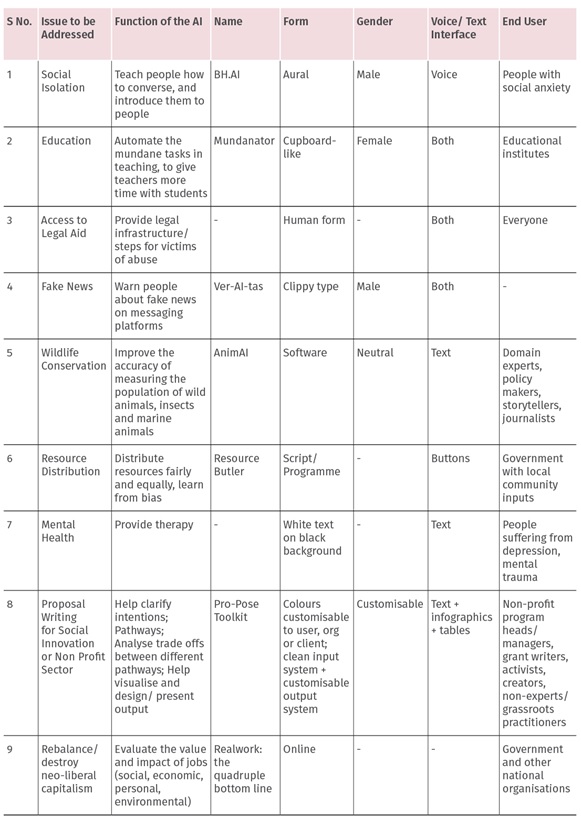

The participants were given the brief to imagine an AI that could solve a problem or be used for social good. Most participants considered AI to be a positive tool for social impact. It was seen as a problem solver. The ideas conceptualised by the participants varied from countering fake news, wildlife conservation, resource distribution, and mental health. This brought to focus the range of areas that were seen as pertinent for an AI intervention. Most of the responses dealt with concerns that affect humans directly, the one aimed at wildlife conservation being the only exception.

On being asked, who will use the AI application, it was interesting to note that all the responses considered different stakeholders such as individuals, non profits, governments and private companies to be the end user. However, it was interesting that through the discussion the harms that might be caused by the use of AI by these stakeholders were not brought up. For example, the use of AI for resource distribution did not take into consideration the fact that the government could provide unequal distribution based on the existing biased datasets. Several of the AI applications were conceptualised to work without any human intervention. For example, one of the ideas proposed was to use AI as a mental health counsellor which was conceptualised as a chatbot that would learn more about human psychology with each interaction. It was assumed that such a service would be better than a human psychologist who can be emotionally biased. Similarly, while discussing the idea behind the use of AI for preventing the spread of fake news, the participant believed that the indication coming from an AI would have greater impact than one coming from a human. They believed that the AI could provide the correct information and prevent the spread of fake news. By discussing these cases we were able to highlight that the complete reliance on technology could have severe consequences.

Form and Visual Design of the AI Concepts

In most cases, the participants decided the form and visual design of their AI concepts keeping in mind its purpose. For instance, the therapy providing AI mentioned earlier, was envisioned as a textual platform, while a ‘clippy type’ add on AI tool was thought of for detecting fake news. Most participants imagined the AI application to have a software form, while the legal aid AI application was conceptualised to have a human form. This revealed that the participants perceived AI to be both a software and a physical device such as a robot.

Accessibility of the Interfaces

The purpose of including the type of interface (voice or text) while conceptualising the AI application was to push the participants towards thinking about accessibility features. We aimed to have the participants think about the default use of the interface, both in terms of language and accessibility. The participants though cognizant of the need to have a large number of users, preferred to have only textual input into the interface, not anticipating the accessibility concerns.

The choices between access vs cost, and accessibility vs scalability were also questioned by the participants during the workshop. They enquired about the meaning of the terms as well as discussed the difficulty in having an all inclusive interface. Some of the responses consisted only of text inputs, especially for sensitive issues involving interactions, such as for therapy or helplines. This exercise made the participants think about the end user as well as the ‘AI for all’ narrative. We decided to add these questions that made the participants think about how the default ability, language, and technological capability of the user is taken for granted, and how simple features could help more people interact with the application. This discussion led to the inference that there is a need to think about accessibility by design during the creation of the application and not as an afterthought.

Biases Based on Gender

We intended for the participants to think about the inherent biases that creep into creating an AI concept. These biases were evident from deciding identifiably male names, to deciding a male voice when the application needed to be assertive, or a female voice and name for when it was dealing with school children. Most of the other participants either did not mention the gender or they said that the AI could be gender neutral or changeable.

These observations are also revealing of the existing narrative around AI. The popular AI interfaces have been noted to exemplify existing gender stereotypes. For example, the virtual assistants were given female identifiable names and default female voices such as Siri, Alexa, and Cortana. The more advanced AI were given male identifiable names and default male voices such as Watson, Holmes etc. Although these concerns have been pointed out by several researchers, there needs to be a visible shift towards moving away from existing gender biases.

Concerns around Privacy

Though the participants were aware of the privacy implications of data driven technologies, they were unsure of how their own AI concept could deal with questions of privacy. The participants voiced concerns about how they would procure the data to train the AI but were uncertain about their data processing practices. This included how they would store the data, anonymise the data, or prevent third parties from accessing it. For example, during the activity, it was pointed out to the participants that there would be sensitive data collected in applications such as therapy provision, legal aid for victims of abuse, and assistance for people with social anxiety. In these cases, the participants stated that they would ensure that the data was shared responsibly, but did not consider the potential uses or misuses of this shared data.

Choices between Principles

This part of the exercise was intended to familiarise the participants with certain ethical and policy questions about AI, as well as to look at the possible choices that AI developers have to make. Along with discussing the broader questions around the form and interface of AI, we wanted the participants to also look at making decisions about the way the AI would function. The intent behind this component of the exercise was to encourage the participants to question the practices of AI companies, as well as understand the implications of choices while creating an AI. As the language in this section was based on law and policy, we spent some time describing the terms to the participants. Even as some of the options presented by us were not exhaustive or absolute extremes, we placed this section to demonstrate the complexity in creating an AI that is beneficial for all. We intended for the participants to understand that an AI that is profitable to the company, free for people, accessible, privacy respecting, and open source, though desirable may be in competition with other interests such as profitability and scalability.

The participants were urged to think about how decisions regarding who can use the service, how much transparency and privacy the company will provide, are also part of building an AI. Taking an example from the responses, we talked about how having a closed proprietary software in case of AI applications such as providing legal aid to victims of abuse would deter the creation of similar applications. However, after the terms were explained, the participants mostly chose openness over proprietary software, and access over paid services.

Conclusion

The aim of this exercise was to understand the popular perception of AI. The participants had varied understanding of AI, but were familiar with the term. They also knew of the popular products that claim to use AI. Since the exercise was designed for people as an introduction to AI policy, we intended to keep questions around data practices out of the concept form. Eventually, with this exercise, we, along with the participants, were able to look at how popular media sells AI as an effective and cheaper solution to social issues. The exercise also allowed the participants to understand certain biases with gender, language, and ability. It also shed light on how questions of access and user rights should be placed before the creation of a technological solution. New technologies such as AI are being featured as problem solvers by companies, the media and governments. However, there is a need to also think about how these technologies can be exclusionary, misused, or how they amplify existing socio economic inequities.