We need a better AI vision

The blog post by Arindrajit Basu was published by Fountainink on October 12, 2019.

he dawn of Artificial Intelligence (AI) has policy-makers across the globe excited. In India, it is seen as a tool to overleap structural hurdles and better understand a range of organisational and management processes while improving the implementation of several government tasks. Notwithstanding the apparent enthusiasm in the government and private sectors, an adequate technological, infrastructural, and financial capacity to develop these models at scale is still in the works.

A number of policy documents with direct or indirect references to India’s AI future—to be powered by vast troves of data—have been released in the past year and a half. These include the National Strategy for Artificial Intelligence (which I will refer to as National Strategy) authored by NITI Aayog, the AI Taskforce Report, Chapter 4 of the Economic Survey, the Draft e-Commerce Bill and the Srikrishna Committee Report.

While they extol the virtues of data-driven analytics, references to the preservation or augmentation of India’s constitutional ethos through AI has been limited though it is crucial for safeguarding the rights and liberties of citizens while paving the way for the alleviation of societal oppression.

In this essay, I outline the variety of AI use cases that are in the works. I then highlight India’s AI vision by culling the relevant aspects of policy instruments that impact the AI ecosystem and identify lacunae that can be rectified. Finally, I attempt to “constitutionalise AI policy” by grounding it in a framework of constitutional rights that guarantee protection to the most vulnerable sections of society.

In the manufacturing industry, AI adoption is not uniform across all sectors. But there has been a notable transformation in electronics, heavy electricals and automobiles.

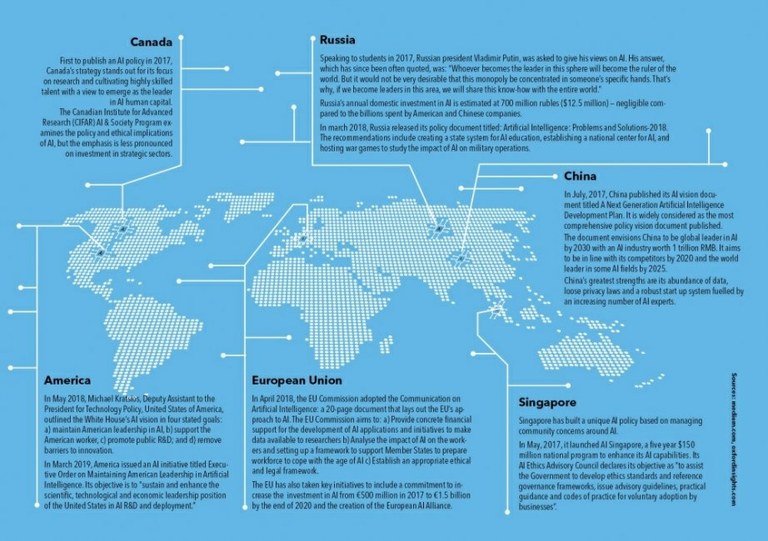

It is crucial to note that these cases, still emerging in India, have been implemented at scale in other countries such as the United Kingdom, United States and China. Projects were rolled out to the detriment of ethical and legal considerations. Hindsight should make the Indian policy ecosystem much wiser. By closely studying the research produced in these diverse contexts, Indian policy-makers should try to find ways around the ethical and legal challenges that cropped up elsewhere and devise policy solutions that mitigate the concerns raised.

***

Before anything else we need to define AI—an endeavour fraught with multiple contestations. My colleagues and I at the Centre for Internet & Society ducked this hurdle when conducting our research by adopting a function-based approach. An AI system (as opposed to one that automates routine, cognitive or non-cognitive tasks) is a dynamic learning system that allows for the delegation of some level of human decision-making to the system. This definition allows us to capture some of the unique challenges and prospects that stem from the use of AI.

The research I contributed to at CIS identified key trends in the use of AI across India. In healthcare, it is used for descriptive and predictive purposes.

For example, the Manipal Group of Hospitals tied up with IBM’s Watson for Oncology to aid doctors in the diagnosis and treatment of seven types of cancer. It is also being used for analytical or diagnostic services. Niramai Health Analytix uses AI to detect early stage breast cancer and Adveniot Tecnosys detects tuberculosis through chest X-rays and acute infections using ultrasound images. In the manufacturing industry, AI adoption is not uniform across all sectors. But there has been a notable transformation in the electronics, heavy electricals and automobiles sector gradually adopting and integrating AI solutions into their products and processes.

It is also used in the burgeoning online lending segment in order to source credit score data. As many Indians have no credit scores, AI is used to aggregate data and generate scores for more than 80 per cent of the population who have no credit scores. This includes Credit Vidya, a Hyderabad-based data underwriting start-up that provides a credit score to first time loan-seekers and feeds this information to big players such as ICICI Bank and HDFC Bank, among others. It is also used by players such as Mastercard for fraud detection and risk management. In the finance world, companies such as Trade Rays are being used to provide user-friendly algorithmic trading services.

AI is also being increasingly used in the education sector for providing services to students such as decision-making assistance and also for student-progress monitoring.

The next big development is in law enforcement. Predictive policing is making great strides in various states, including Delhi, Punjab, Uttar Pradesh and Maharashtra. A brainchild of the Los Angeles Police Department, predictive policing is the use of analytical techniques such as Machine Learning to identify probable targets for intervention to prevent crime or to solve past crime through statistical predictions.

Conventional approaches to predictive policing start with the mapping of locations where crimes are concentrated (hot spots) by using algorithms to analyse aggregated data sets. Police in Uttar Pradesh and Delhi have partnered with the Indian Space Research Organisation (ISRO) in a Memorandum of Understanding to allow ISRO’s Advanced Data Processing Research Institute to map, visualise and compile reports about crime-related incidents.

There are aggressive developments also on the facial recognition front. Punjab Police, in association with Gurugram-based start-up Staqu has started implementing the Punjab Artificial Intelligence System (PAIS) which uses digitised criminal records and automated facial recognition to retrieve information on the suspected criminal. At the national level, on June 28, the National Crime Records Bureau (NCRB) called for tenders to implement a centralised Automated Facial Recognition System (AFRS), defining the scope of work in broad terms as the “supply, installation and commissioning of hardware and software at NCRB.”

AI is also being increasingly used in the education sector for providing services to students such as decision-making assistance and also for student-progress monitoring. The Andhra Pradesh government had started collecting information from a range of databases and processes the information through Microsoft’s Machine Learning Platform to monitor children and devote student focussed attention on identifying and curbing school drop-outs.

In Andhra Pradesh, Microsoft collaborated with the International Crop Institute for Semi-Arid Tropics (ICRISAT) to develop an AI Sowing App powered by Microsoft’s Cortana Intelligence Suite. It aggregated data using Machine Learning and sent advisories to farmers regarding optimal dates to sow. This was done via text messages on feature phones after ground research revealed that not many farmers owned or were able to use smart phones. The NITI Aayog AI Strategy specifically cited this use case and reported that this resulted in a 10-30 per cent increase in crop yield. The government of Karnataka has entered into a similar arrangement with Microsoft.

Finally, in the defence sector, our research found enthusiasm for AI in intelligence, surveillance and reconnaissance (ISR) functions, cyber defence, robot soldiers, risk terrain analysis and moving towards autonomous weapons systems. These projects are being developed by the Defence Research and Development Organisation but the level of trust and support in AI-driven processes reposed by the wings of the armed forces is yet to be publicly clarified. India also had the privilege of leading the global debate on Lethal Autonomous Weapons Systems (LAWS) with Amandeep Singh Gill chairing the United Nations Group of Governmental Experts (UN-GGE) on the issue. However, ‘lethal’ autonomous weapons systems at this stage appear to be a speck in the distant horizon.

***

Along with the range of use cases described above, a patchwork of policy imperatives is emerging to support this ecosystem. The umbrella document is the National Strategy for Artificial Intelligence published by the NITI Aayog in June 2018. Despite certain lacunae in its scope, the existence of a cohesive and robust document that lends a semblance of certainty and predictability to a rapidly emerging sphere is in itself a boon. The document focuses on how India can leverage AI for both economic growth and social inclusion. The contents of the document can be divided into a few themes, many of which have also found their way into multiple other instruments.

NITI Aayog provides over 30 policy recommendations on investment in scientific research, reskilling, training and enabling the speedy adoption of AI across value chains. The flagship research initiative is a two-tiered endeavour to boost AI research in India. First, new centres of research excellence (COREs) will develop fundamental research. The COREs will act as feeders for international centres for transformational AI which will focus on creating AI-based applications across sectors.

This is an impressive theoretical objective but questions surrounding implementation and structures of operation remain to be answered. China has not only conceptualised an ecosystem but through the Three Year Action Plan to Promote the Development of New Generation Artificial Intelligence Industry, it has also taken a whole-of-government approach to propelling the private sector to an e-leadership position. It has partnered with national tech companies and set clear goals for funding, such as the $2.1 billion technology park for AI research in Beijing.

The contents of the NITI document can be divided into a few themes, many of which have also found their way into multiple other instruments. First, it proposes an “AI+X” approach that captures the long-term vision for AI in India. Instead of replacing the processes in their entirety, AI is understood as an enabler of efficiency in processes that already exist. NITI Aayog therefore looks at the process of deploying AI-driven technologies as taking an existing process (X) and adding AI to them (AI+X). This is a crucial recommendation all AI projects should heed. Instead of waving AI as an all-encompassing magic wand across sectors, it is necessary to identify specific gaps AI can seek to remedy and then devise the process underpinning this implementation.

A cacophony of policy instruments by multiple government departments seeks to reconceptualise data to construct a theoretical framework that allows for its exploitation for AI-driven analytics.

The AI-driven intervention to develop sowing apps for farmers in Karnataka and Andhra Pradesh are examples of effective implementation of this approach. Instead of other knee-jerk reactions to agrarian woes such as a hasty raising of Minimum Support Price, effective research was done in this use-case to identify a lack of predictability in weather patterns as a key factor in productive crop yields. They realised that aggregation of data through AI could provide farmers with better information on weather patterns. As internet penetration was relatively low in rural Karnataka, text messages to feature phones that had a far wider presence was indispensable to the end game.

***

This is in contrast to the ill-conceived path adopted by the Union ministry of electronics and information technology in guidelines for regulating social media platforms that host content (“intermediaries”). Rule 3(9) of the Draft of the Information Technology [Intermediary Guidelines (Amendment) Rules] 2018 mandates intermediaries to use “automated tools or appropriate mechanisms, with appropriate controls, for proactively identifying and removing or disabling public access to unlawful information or content”.

Proposed in light of the fake news menace and the unbridled spread of “extremist” content online, the use of the phrase “automated tools or appropriate mechanisms” is reflective of an attitude that fails to consider ground realities that confront companies and users alike. They ignore, for instance, the cost of automated tools: whether automated content moderation techniques developed in the West can be applied to Indic languages or grievance redress mechanisms users can avail of if their online speech is unduly restricted. This is thus a clear case of the “AI” mantra being drawn out of a hat without studying the “X” it is supposed to remedy.

The second focus of the National Strategy that has since morphed into a technology policy mainstay across instruments is on data governance, access and utilisation. The document says the major hurdle to the large scale adoption of AI in India is the difficulty in accessing structured data. It recommends developing big annotated data sets to “democratise data and multi-stakeholder marketplaces across the AI value chain”. It argues that at present only one per cent of data can be analysed as it exists in various unconnected silos. Through the creation of a formal market for data, aggregators such as diagnostic centres in the healthcare sector would curate datasets and place them in the market, with appropriate permissions and safeguards. AI firms could use available datasets rather than wasting effort sourcing and curating the sets themselves.

A cacophony of policy instruments by multiple government departments seeks to reconceptualise data to construct a theoretical framework that allows for its exploitation for AI-driven analytics.The first is “community data” and appears both in the Srikrishna Report that accompanied the draft Data Protection Bill in 2018 and the draft e-commerce policy.

But there appears to be some conflict between its usage in the two. Srikrishna endorses a collective protection of privacy by protecting an identifiable community that has contributed to community data. This requires the fulfilment of three key conditions: first, the data belong to an identifiable community; second, individuals in the community consent to being a part of it, and third, the community as a whole consents to its data being treated as community data. On the other hand, the Department of Promotion of Industry and Internal Trade’s (DPIIT) draft e-commerce policy looks at community data as “societal commons” or a “national resource” that gives the community the right to access it but government has ultimate and overriding control of the data. This configuration of community data brings into question the consent framework in the Srikrishna Bill.

The government’s attempt to harness data as a national resource for the development of AI-based solutions may be well-intentioned but is fraught with core problems in implementation.

The matter is further confused by treating “data as a public good”. This is projected in Chapter 4 of the 2019 Economic Survey published by the Ministry of Finance. It explicitly states that any configuration needs to be deferential to privacy norms and the upcoming privacy law. The “personal data” of an individual in the custody of a government is also a “public good” once the datasets are anonymised. At the same time, it pushes for the creation of a government database that links several individual databases, which leads to the “triangulation” problem, where matching different datasets together allows for individuals to be identified despite their anonymisation in seemingly disparate databases.

“Building an AI ecosystem” was also one of the ostensible reasons for data localisation—the government’s gambit to mandate that foreign companies store the data of Indian citizens within national borders. In addition to a few other policy instruments with similar mandates, Section 40 of the Draft Personal Data Protection Bill mandates that all “critical data” (this is to be notified by the government) be stored exclusively in India. All other data should have a live, serving copy stored in India even if transfer abroad is allowed. This was an attempt to ensure foreign data processors are not the sole beneficiaries of AI-driven insights.

The government’s attempt to harness data as a national resource for the development of AI-based solutions may be well intentioned but is fraught with core problems in implementation. First, the notion of data as a national resource or as a public good walks a tightrope with constitutionally guaranteed protections around privacy, which will be codified in the upcoming Personal Data Protection Bill. My concerns are not quite so grave in the case of genuine “public data” like traffic signal data or pollution data. However, the Economic Survey manages to crudely amalgamate personal data into the mix.

It also states that personal data in the custody of a government is a public good once the datasets are anonymised. This includes transactions data in the User Payments Interface (UPI), administrative data including birth and death records, and institutional data including data in public hospitals or schools on pupils or patients. At the same time, it pushes for a government database that will lead to the triangulation problem outlined above. The chapter also suggests that said data may be sold to private firms (unclear if this includes foreign or domestic firms). This not only contradicts the notion of public good but is also a serious threat to the confidentiality and security of personal data.

***

Therefore, along with the concerted endeavour to create data marketplaces, it is crucial for policy-makers to differentiate between public data and personal data individuals may consent to be made public. The parameters for clearly defining free and informed consent, as codified in the Draft Personal Data Protection Bill need to be strictly followed as there is a risk of de-anonymisation of data once it finds its way into the marketplace. Second, it is crucial for policy-makers to define clearly a community and parameters for what constitutes individual consent to be part of a community. Finally, along with technical work on setting up a national data marketplace, there must be protracted efforts to guarantee greater security and standards of anonymisation.

The National Strategy mentions that India should position itself as a “garage” for AI in emerging economies. This could mean Indian citizens are used as guinea pigs for AI-driven solutions at the cost of their rights.

Assuming that a constitutionally valid paradigm may be created, the excessive focus on data access by tech players dodges the question of the capabilities of analytic firms to process this data and derive meaningful insights from the information. Scholars on China, arguably the poster-child of data-driven economic growth, have sent mixed messages. Ding argues that despite having half the technical capabilities of the US, easy access to data gives China a competitive edge in global AI competition. On the contrary, Andrew Ng has argued that operationalising a sufficient number of relevant datasets still remains a challenge. Ng’s views are backed up by insiders at Chinese tech giant Tencent who say the company still finds it difficult to integrate data streams due to technical hurdles. NITI Aayog’s idea of a multi-stream data marketplace may theoretically be a solution to these potential hurdles but requires sustained funding and research innovation to be converted into reality.

The National Strategy suggests that government should create a multi-disciplinary committee to set up this marketplace and explore levers for its implementation. This is certainly the need of the hour. It also rightly highlights the importance of research partnerships between academia and the private sector, and the need to support start-ups. There is therefore an urgent need for innovative allied policy instruments that support the burgeoning start-up sector. Proposals such as data localisation may hurt smaller players as they will have to bear the increased fixed costs of setting up or renting data centres.

The National Strategy also incongruously mentions that India should position itself as a “garage” for the use of AI in emerging economies. This could mean Indian citizens are used as guinea pigs for AI-driven solutions at the cost of their fundamental rights. It could also imply that India should occupy a leadership position and work with other emerging economies to frame the global rights based discourse to seek equitable solutions for the application of AI that works to improve the plight of the most vulnerable in society.

***

Our constitutional ethos places us in a unique position to develop a framework that enables the actualisation of this equitable vision—a goal the policy instruments put out thus far appear to have missed. While the National Strategy includes a section on privacy, security and ethical implications of AI, it stops short of rooting it in fundamental rights and constitutional principles. As a centralised policy instrument, the National Strategy deserves praise for identifying key levers in the future of India’s AI ecosystem and, with the exception of the concerns I outlined above, it is at par with the policy-making thought process in any other nation.

When we start the process of using constitutional principles for AI governance, we must remember that as per Article 12, an individual can file a writ against the state for violation of a fundamental right if the action is taken under the aegis of a “public function”. To combat discrimination by private actors, the state can enact legislation compelling private actors to comply with constitutional mandates. In July, Rajeev Chandrashekhar, a Rajya Sabha MP, suggested a law to combat algorithmic discrimination along the lines of the Algorithmic Accountability Bill proposed in the US Senate. There are three core constitutional questions along the lines of the “golden triangle” of the Indian Constitution any such legislation will need to answer—those of accountability and transparency, algorithmic discrimination and the guarantee of freedom of expression and individual privacy.

Algorithms are developed by human beings who have their own cognitive biases. This means ostensibly neutral algorithms can have an unintentional disparate impact on certain, often traditionally disenfranchised groups.

In the MIT Technology Review, Karen Hao explains three stages at which bias might creep in. The first stage is the framing of the problem itself. As soon as computer scientists create a deep-learning model, they decide what they want the model to finally achieve. However, frequently desired outcomes such as “profitability”, “creditworthiness” or “recruitability” are subjective and imprecise concepts subject to human cognitive bias. This makes it difficult to devise screening algorithms that fairly portray society and the complex medley of identities, attributes and structures of power that define it.

The second stage Hao mentions is the data collection phase. Training data could lead to bias if it is unrepresentative of reality or represents entrenched prejudice or structural inequality. For example, most Natural Language Processing systems used for Parts of Speech (POS) tagging in the US are trained on the readily available data sets from the Wall Street Journal. Accuracy would naturally decrease when the algorithm is applied to individuals—largely ethnic minorities—who do not mimic the speech of the Journal.

According to Hao, the final stage for algorithmic bias is data preparation, which involves selecting parameters the developer wants the algorithm to consider. For example, when determining the “risk-profile” of car owners seeking insurance premiums, geographical location could be one parameter. This could be justified by the ostensibly neutral argument that those residing in inner-city areas with narrower roads are more likely to have scratches on their vehicles. But as inner cities in the US have a disproportionately high number of ethnic minorities or other vulnerable socio-economic groups, “pin code” becomes a facially neutral proxy for race or class-based discrimination.

***

The right to equality has been carved into multiple international human rights instruments and into the Equality Code in Articles 14-18 of the Indian Constitution. The dominant approach to interpreting the right to equality by the Supreme Court has been to focus on “grounds” of discrimination under Article 15(1), thus resulting in a lack of recognition of unintentional discrimination and disparate impact.

A notable exception, as constitutional scholar Gautam Bhatia points out, is the case of N.M. Thomas which pertained to reservation in promotions. Justice Mathew argued that the test for inequality in Article 16(4) is an effects-oriented test independent of the formal motivation underlying a specific act. Justice Krishna Iyer and Mathew also articulated a grander vision wherein they saw the Equality Code as transcending the embedded individual disabilities in class driven social hierarchies. This understanding is crucial for governing data driven decision-making that impacts vulnerable communities. Any law or policy on AI-related discrimination must also include disparate impact within its definition of “discrimination” to ensure that developers think about the adverse consequences even of well-intentioned decisions.

AI driven assessments have been challenged on grounds of constitutional violations in other jurisdictions. In 2016, the Wisconsin Supreme Court considered the legality of using risk assessment tools such as COMPAS for sentencing criminals. It affirmed the trial court’s findings and held that using COMPAS did not violate constitutional due process standards. Eric Loomis had argued that using COMPAS infringed both his right to an individualised sentence and to accurate information as COMPAS provided data for specific groups and kept the methodology used to prepare the report a trade secret. He additionally argued that the court used unconstitutional gendered assessments as the tool used gender as one of the parameters.

The Wisconsin Supreme Court disagreed with Loomis arguing that COMPAS only used publicly available data and data provided by the defendant, which apparently meant Loomis could have verified any information contained in the report. On the question of individualisation, the court argued that COMPAS provided only aggregate data for groups similarly placed to the offender. However, it went on to argue as the report was not the sole basis for a decision by the judge, a COMPAS assessment would be sufficiently individualised as courts retained the discretion and information necessary to disagree.

By assuming that Loomis could have genuinely verified all the data collected about similarly placed groups and that judges would exercise discretion to prevent the entrenchment of inequalities through COMPAS’s decision-making patterns, the judges ignored social realities. Algorithmic decision-making systems are an extension of unequal decision-making that re-entrenches prevailing societal perceptions around identity and behaviour. An instance of discrimination cannot be looked at as a single instance but as one in a menagerie of production systems that define, modulate and regulate social existence.

The policy-making ecosystem needs, therefore, to galvanise the “transformative” vision of India’s democratic fibre and study existing systems and power structures AI could re-entrench or mitigate. For example, in the matter of bank loans there is a presumption against the credit-worthiness of those working in the informal sector. The use of aggregated decision-making may lead to more equitable outcomes given that there is concrete thought on the organisational structures making these decisions and the constitutional safeguards provided.

Most case studies on algorithmic discrimination in Virgina Eubanks’ Automating Inequality or Safiya Noble’s Algorithms of Oppression are based on western contexts. There is an urgent need for publicly available empirical studies on pilot cases in India to understand the contours of discrimination. Primary research questions should explore three related subjects. Are specified ostensibly neutral variables being used to exclude certain communities from accessing opportunities and resources or having a disproportionate impact on their civil liberties? Is there diversity in the identities of the coders themselves? Are the training data sets used representative and diverse and, finally, what role does data driven decision-making play in furthering the battle against embedded structural hierarchies?

***

A key feature of AI-driven solutions is the “black box” that processes inputs and generates actionable outputs behind a veil of opacity to the human operator. Essentially, the black box denotes that aspect of the human neural decision-making function that has been delegated to the machine. A lack of transparency or understanding could lead to what Frank Pasquale terms a “Black Box Society” where algorithms define the trajectories of daily existence unless “the values and prerogatives of the encoded rules hidden within black boxes” are challenged.

Ex-post facto assessment is often insufficient for arriving at genuine accountability. For example, the success of predictive policing in the US was drawn from the fact that police have indeed found more crimes in areas deemed “high risk”. But this assessment does not account for the fact that this is a product of a vicious cycle through which more crime is detected in an area simply because more policemen are deployed. Here, the National Strategy rightly identifies that simply opening up code may not deconstruct the black box as not all stakeholders impacted by AI solutions may understand the code. The constant aim should be explicability which means the human developer should be able to explain how certain factors may be used to arrive at a certain cluster of outcomes in a given set of situations.

The requirement of accountability stems from the Right to Life provision under Article 21. As stated in the seven-judge bench in Maneka Gandhi vs. Union of India, any procedure established by law must be seen to be “fair, just and reasonable” and not “fanciful, oppressive or arbitrary.”

The Right to Privacy was recognised as a fundamental right by the nine-judge bench in K.S. Puttaswamy (Retd.) vs. Union of India. Mass surveillance can lead to the alteration of behavioural patterns which may in turn be used for the suppression of dissent by the State. Pulling vast tracts of data on all suspected criminals—as in facial recognition systems like PAIS—create a “presumption of criminality” that can have a chilling effect on democratic values.

Therefore, any use, particularly by law enforcement would need to satisfy the requirements for infringing on the right to privacy: the existence of a law, necessity—a clearly defined state objective—and proportionality between the state object and the means used restricting fundamental rights the least. Along with centralised policy instruments such as the National Strategy, all initiatives taken in pursuance of India’s AI agenda must pay heed to the democratic virtues of privacy and free speech and their interlinkages.

India needs a law to regulate the impact of Artificial Intelligence and enable its development without restricting fundamental rights. However, regulation should not adopt a “one-size-fits-all” approach that views all uses with the same level of rigidity. Regulatory intervention should be based on questions around power asymmetries and the likelihood of the use case adversely affronting human dignity captured by India’s constitutional ethos.

As an aspiring leader in global discourse, India can lay the rules of the road for other emerging economies not only by incubating, innovating and implementing AI powered technologies but by grounding it in a lattice of rich constitutional jurisprudence that empowers the individual.

The High Level Task Force on Artificial Intelligence (AI HLEG) set up by the European Commission in June 2018 published a report on “Ethical Guidelines for Trustworthy AI” earlier this year. They feature seven core requirements which include human agency and oversight; technical robustness and safety; privacy and data governance; transparency; diversity, non-discrimination and fairness; societal and environmental well-being; and accountability. While the principles are comprehensive, this document stops short of referencing any domestic or international constitutional law that helps cement these values. The Indian Constitution can help define and concretise each of these principles and could be used as a vehicle to foster genuine social inclusion and mitigation of structural injustice through AI.

At the centre of the vision must be the inherent rights of the individual. The constitutional moment for data driven decision-making emerges therefore when we conceptualise a way through which AI can be utilised to preserve and improve the enforcement of rights while also ensuring that data does not become a further avenue for exploitation.

National vision transcends the boundaries of policy and to misuse Peter Drucker, “eats strategy for breakfast”. As an aspiring leader in global discourse, India can lay the rules of the road for other emerging economies not only by incubating, innovating and implementing AI powered technologies but by grounding it in a lattice of rich constitutional jurisprudence that empowers the individual, particularly the vulnerable in society. While the multiple policy instruments and the National Strategy are important cogs in the wheel, the long-term vision can only be framed by how the plethora of actors, interest groups and stakeholders engage with the notion of an AI-powered Indian society.