Blog

Notes for India as the digital trade juggernaut rolls on

The article by Arindrajit Basu was published in the Hindu on February 8, 2022

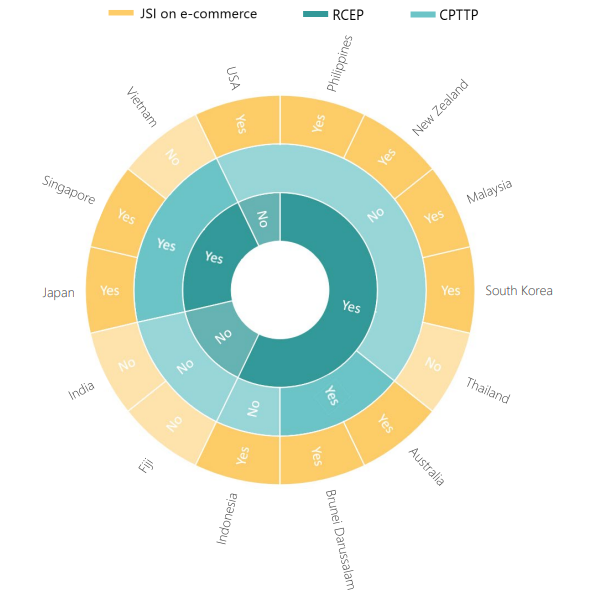

Despite the cancellation of the Twelfth Ministerial Conference (MC12) of the World Trade Organization (WTO) late last year (scheduled date, November 30, 2021-December 3, 2021) due to COVID-19, digital trade negotiations continue their ambitious march forward. On December 14, Australia, Japan, and Singapore, co-convenors of the plurilateral Joint Statement Initiative (JSI) on e-commerce, welcomed the ‘substantial progress’ made at the talks over the past three years and stated that they expected a convergence on more issues by the end of 2022.

Holding out

But therein lies the rub: even though JSI members account for over 90% of global trade, and the initiative welcomes newer entrants, over half of WTO members (largely from the developing world) continue to opt out of these negotiations. They fear being arm-twisted into accepting global rules that could etiolate domestic policymaking and economic growth. India and South Africa have led the resistance and been the JSI’s most vocal critics. India has thus far resisted pressures from the developed world to jump onto the JSI bandwagon, largely through coherent legal argumentation against the JSI and a long-term developmental vision. Yet, given the increasingly fragmented global trading landscape and the rising importance of the global digital economy, can India tailor its engagement with the WTO to better accommodate its economic and geopolitical interests?

Global rules on digital trade

The WTO emerged in a largely analogue world in 1994. It was only at the Second Ministerial Conference (1998) that members agreed on core rules for e-commerce regulation. A temporary moratorium was imposed on customs duties relating to the electronic transmission of goods and services. This moratorium has been renewed continuously, to consistent opposition from India and South Africa. They argue that the moratorium imposes significant costs on developing countries as they are unable to benefit from the revenue customs duties would bring.

The members also agreed to set up a work programme on e-commerce across four issue areas at the General Council: goods, services, intellectual property, and development. Frustrated by a lack of progress in the two decades that followed, 70 members brokered the JSI in December 2017 to initiate exploratory work on the trade-related aspects of e-commerce. Several countries, including developing countries, signed up in 2019 despite holding contrary views to most JSI members on key issues. Surprise entrants, China and Indonesia, argued that they sought to shape the rules from within the initiative rather than sitting on the sidelines.

India and South Africa have rightly pointed out that the JSI contravenes the WTO’s consensus-based framework, where every member has a voice and vote regardless of economic standing. Unlike the General Council Work Programme, which India and South Africa have attempted to revitalise in the past year, the JSI does not include all WTO members. For the process to be legally valid, the initiative must either build consensus or negotiate a plurilateral agreement outside the aegis of the WTO.

India and South Africa’s positioning strikes a chord at the heart of the global trading regime: how to balance the sovereign right of states to shape domestic policy with international obligations that would enable them to reap the benefits of a global trading system.

A contested regime

There are several issues upon which the developed and developing worlds disagree. One such issue concerns international rules relating to the free flow of data across borders. Several countries, both within and outside the JSI, have imposed data localisation mandates that compel corporations to store and process data within territorial borders. This is a key policy priority for India. Several payment card companies, including Mastercard and American Express, were prohibited from issuing new cards for failure to comply with a 2018 financial data localisation directive from the Reserve Bank of India. The Joint Parliamentary Committee (JPC) on data protection has recommended stringent localisation measures for sensitive personal data and critical personal data in India’s data protection legislation. However, for nations and industries in the developed world looking to access new digital markets, these restrictions impose unnecessary compliance costs, thus arguably hampering innovation and supposedly amounting to unfair protectionism.

There is a similar disagreement regarding domestic laws that mandate the disclosure of source codes. Developed countries believe that this hampers innovation, whereas developing countries believe it is essential for algorithmic transparency and fairness — which was another key recommendation of the JPC report in December 2021.

India’s choices

India’s global position is reinforced through narrative building by political and industrial leaders alike. Data sovereignty is championed as a means of resisting ‘data colonialism’, the exploitative economic practices and intensive lobbying of Silicon Valley companies. Policymaking for India’s digital economy is at a critical juncture. Surveillance reform, personal data protection, algorithmic governance, and non-personal data regulation must be galvanised through evidenced insights,and work for individuals, communities, and aspiring local businesses — not just established larger players.

Hastily signing trading obligations could reduce the space available to frame appropriate policy. But sitting out trade negotiations will mean that the digital trade juggernaut will continue unchecked, through mega-regional trading agreements such as the Regional Comprehensive Economic Partnership (RCEP) and the Comprehensive and Progressive Agreement for Trans-Pacific Partnership (CPTPP). India could risk becoming an unwitting standard-taker in an already fragmented trading regime and lose out on opportunities to shape these rules instead.

Alternatives exist; negotiations need not mean compromise. For example, exceptions to digital trade rules, such as ‘legitimate public policy objective’ or ‘essential security interests’, could be negotiated to preserve policymaking where needed while still acquiescing to the larger agreement. Further, any outcome need not be an all-or-nothing arrangement. Taking a cue from the Digital Economy Partnership Agreement (DEPA) between Singapore, Chile, and New Zealand, India can push for a framework where countries can pick and choose modules with which they wish to comply. These combinations can be amassed incrementally as emerging economies such as India work through domestic regulations.

Despite its failings, the WTO plays a critical role in global governance and is vital to India’s strategic interests. Negotiating without surrendering domestic policy-making holds the key to India’s digital future.

Arindrajit Basu is Research Lead at the Centre for Internet and Society, India. The views expressed are personal. The author would like to thank The Clean Copy for edits on a draft of this article.

CIS Comments and Recommendations on the Data Protection Bill, 2021

After nearly two years of deliberations and a few changes in its composition, the Joint Parliamentary Committee (JPC), on 17 December 2021, submitted its report on the Personal Data Protection Bill, 2019 (2019 Bill). The report also contains a new version of the law titled the Data Protection Bill, 2021 (2021 Bill). Although there were no major revisions from the previous version other than the inclusion of all data under the ambit of the bill, some provisions were amended.

This document is a revised version of the comments we provided on the 2019 Bill on 20 February 2020, with updates based on the amendments in the 2021 Bill. Through this document we aim to shed light on the issues that we highlighted in our previous comments that have not yet been addressed, along with additional comments on sections that have become more relevant since the pandemic began. In several instances our previous comments have either not been addressed or only partially been addressed; in such instances, we reiterate them.

These general comments should be read in conjunction with our previous recommendations for the reader to get a comprehensive overview of what has changed from the previous version and what has remained the same. This document can also be read while referencing the new Data Protection Bill 2021 and the JPC’s report to understand some of the significant provisions of the bill.

Read on to access the comments | Review and editing by Arindrajit Basu. Copy editing: The Clean Copy; Shared under Creative Commons Attribution 4.0 International license

How Function Of State May Limit Informed Consent: Examining Clause 12 Of The Data Protection Bill

The blog post was published in Medianama on February 18, 2022. This is the first of a two-part series by Amber Sinha.

In 2018, hours after the Committee of Experts led by Justice Srikrishna Committee released their report and draft bill, I wrote an opinion piece providing my quick take on what was good and bad about the bill. A section of my analysis focused on Clause 12 (then Clause 13) which provides for non-consensual processing of personal data for state functions. I called this provision a ‘carte-blanche’ which effectively allowed the state to process a citizen’s data for practically all interactions between them without having to deal with the inconvenience of seeking consent. My former colleague, Pranesh Prakash pointed out that this was not a correct interpretation of the provision as I had missed the significance of the word ‘necessary’ which was inserted to act as a check on the powers of the state. He also pointed out, correctly, that in its construction, this provision is equivalent to the position in European General Data Protection Regulation (Article 6 (i) (e)), and is perhaps even more restrictive.

While I agree with what Pranesh says above (his claims are largely factual, and there can be no basis for disagreement), my view of Clause 12 has not changed. While Clause 35 has been a focus of considerable discourse and analysis, for good reason, I continue to believe that Clause 12 remains among the most dangerous provisions of this bill, and I will try to unpack here, why.

The Data Protection Bill 2021 has a chapter on the grounds for processing personal data, and one of those grounds is consent by the individual. The rest of the grounds deal with various situations in which personal data can be processed without seeking consent from the individual. Clause 12 lays down one of the grounds. It allows the state to process data without the consent of the individual in the following cases —

a) where it is necessary to respond to a medical emergency

b) where it is necessary for state to provide a service or benefit to the individual

c) where it is necessary for the state to issue any certification, licence or permit

d) where it is necessary under any central or state legislation, or to comply with a judicial order

e) where it is necessary for any measures during an epidemic, outbreak or public health

f) where it is necessary for safety procedures during disaster or breakdown of public order

In order to carry out (b) and (c), there is also the added requirement that the state function must be authorised by law.

Twin restrictions in Clause 12

The use of the words ‘necessary’ and ‘authorised by law’ is intended to pose checks on the powers of the state. The first restriction seeks to limit actions to only those cases where the processing of personal data would be necessary for the exercise of the state function. This should mean that if the state function can be exercised without non-consensual processing of personal data, then it must be done so. Therefore, while acting under this provision, the state should only process my data if it needs to do so, to provide me with the service or benefit. The second restriction means that this would apply to only those state functions which are authorised by law, meaning only those functions which are supported by validly enacted legislation.

What we need to keep in mind regarding Clause 12 is that the requirement of ‘authorised by law’ does not mean that legislation must provide for that specific kind of data processing. It simply means that the larger state function must have legal backing. The danger is how these provisions may be used with broad mandates. If the activity in question is non-consensual collection and processing of, say, demographic data of citizens to create state resident hubs which will assist in the provision of services such as healthcare, housing, and other welfare functions; all that may be required is that the welfare functions are authorised by law.

Scope of privacy under Puttaswamy

It would be worthwhile, at this point, to delve into the nature of restrictions that the landmark Puttaswamy judgement discussed that the state can impose on privacy. The judgement clearly identifies the principles of informed consent and purpose limitation as central to informational privacy. As discussed repeatedly during the course of the hearings and in the judgement, privacy, like any other fundamental right, is not absolute. However, restrictions on the right must be reasonable in nature. In the case of Clause 12, the restrictions on privacy in the form of denial of informed consent need to be tested against a constitutional standard. In Puttaswamy, the bench was not required to provide a legal test to determine the extent and scope of the right to privacy, but they do provide sufficient guidance for us to contemplate how the limits and scope of the constitutional right to privacy could be determined in future cases.

The Puttaswamy judgement clearly states that “the right to privacy is protected as an intrinsic part of the right to life and personal liberty under Article 21 and as a part of the freedoms guaranteed by Part III of the Constitution.” By locating the right not just in Article 21 but also in the entirety of Part III, the bench clearly requires that “the drill of various Articles to which the right relates must be scrupulously followed.” This means that where transgressions on privacy relate to different provisions in Part III, the different tests under those provisions will apply along with those in Article 21. For instance, where the restrictions relate to personal freedoms, the tests under both Article 19 (right to freedoms) and Article 21 (right to life and liberty) will apply.

In the case of Clause 12, the three tests laid down by Justice Chandrachud are most operative —

a) the existence of a “law”

b) a “legitimate State interest”

c) the requirement of “proportionality”.

The first test is already reflected in the use of the phrase ‘authorised by law’ in Clause 12. The test under Article 21 would imply that the function of the state should not merely be authorised by law, but that the law, in both its substance and procedure, must be ‘fair, just and reasonable.’ The next test is that of ‘legitimate state interest’. In its report, the Joint Parliamentary Committee places emphasis on Justice Chandrachud’s use of “allocation of resources for human development” in an illustrative list of legitimate state interests. The report claims that the ground, functions of the state, thus satisfies the legitimate state interest. We do not dispute this claim.

Proportionality and Clause 12

It is the final test of ‘proportionality’ articulated by the Puttaswamy judgement, which is most operative in this context. Unlike Clauses 42 and 43 which include the twin tests of necessity and proportionality, the committee has chosen to only employ one ground in Clause 12. Proportionality is a commonly employed ground in European jurisprudence and common law countries such as Canada and South Africa, and it is also an integral part of Indian jurisprudence. As commonly understood, the proportionality test consists of three parts —

a) the limiting measures must be carefully designed, or rationally connected, to the objective

b) they must impair the right as little as possible

c) the effects of the limiting measures must not be so severe on individual or group rights that the legitimate state interest, albeit important, is outweighed by the abridgement of rights.

The first test is similar to the test of proximity under Article 19. The test of ‘necessity’ in Clause 12 must be viewed in this context. It must be remembered that the test of necessity is not limited to only situations where it may not be possible to obtain consent while providing benefits. My reservations with the sufficiency of this standard stem from observations made in the report, as well as the relatively small amount of jurisprudence on this term in Indian law.

The Srikrishna Report interestingly mentions three kinds of scenarios where consent should not be required — where it is not appropriate, necessary, or relevant for processing. The report goes on to give an example of inappropriateness. In cases where data is being gathered to provide welfare services, there is an imbalance in power between the citizen and the state. Having made that observation, the committee inexplicably arrives at a conclusion that the response to this problem is to further erode the power available to citizens by removing the need for consent altogether under Clause 12. There is limited jurisprudence on the standard of ‘necessity’ under Indian law. The Supreme Court has articulated this test as ‘having reasonable relation to the object the legislation has in view.’ If we look elsewhere for guidance on how to read ‘necessity’, the ECHR in Handyside v United Kingdom held it to be neither “synonymous with indispensable” nor does it have the “flexibility of such expressions as admissible, ordinary, useful, reasonable or desirable.” In short, there must be a pressing social need to satisfy this ground.

However, the other two tests of proportionality do not find a mention in Clause 12 at all. There is no requirement of ‘narrow tailoring’, that the scope of non-consensual processing must impair the right as little as possible. It is doubly unfortunate that this test does not find a place, as unlike necessity, ‘narrow tailoring’ is a test well understood in Indian law. This means that while there is a requirement to show that processing personal data was necessary to provide a service or benefit, there is no requirement to process data in a way that there is minimal non-consensual processing. The fear is that as long as there is a reasonable relation between processing data and the object of the function of state, state authorities and other bodies authorised by it, do not need to bother with obtaining consent.

Similarly, the third test of proportionality is also not represented in this provision. It provides a test between the abridgement of individual rights and legitimate state interest in question, and it requires that the first must not outweigh the second. The absence of the proportionality test leaves Clause 12 devoid of any such consideration. Therefore, as long as the test of necessity is met under this law, it need not evaluate the denial of consent against the service or benefit that is being provided.

The collective implication of leaving out ‘proportionality’ from Clause 12 is to provide very wide discretionary powers to the state, by setting the threshold to circumvent informed consent extremely low. In the next post, I will demonstrate the ease with which Clause 12 can allow indiscriminate data sharing by focusing on the Indian government’s digital healthcare schemes.

Clause 12 Of The Data Protection Bill And Digital Healthcare: A Case Study

The blog post was published in Medianama on February 21, 2022. This is the second in a two-part series by Amber Sinha.

In the previous post, I looked at provisions on non-consensual data processing for state functions under the most recent version of recommendations by the Joint Parliamentary Committee on India’s Data Protection Bill (DPB). The true impact of these provisions can only be appreciated in light of ongoing policy developments and real-life implications.

To appreciate the significance of the dilutions in Clause 12, let us consider the Indian state’s range of schemes promoting digital healthcare. In July 2018, NITI Aayog, a central government policy think tank in India released a strategy and approach paper (Strategy Paper) on the formulation of the National Health Stack which envisions the creation of a federated application programming interface (API)-enabled health information ecosystem. While the Ministry of Health and Family Welfare has focused on the creation of Electronic Health Records (EHR) Standards for India during the last few years and also identified a contractor for the creation of a centralised health information platform (IHIP), this Strategy Paper advocates a completely different approach, which is described as a Personal Health Records (PHR) framework. In 2021, the National Digital Health Mission (NDHM) was launched under which a citizen shall have the option to obtain a digital health ID. A digital health ID is a unique ID and will carry all health records of a person.

A Stack Model for Big Data Ecosystem in Healthcare

A stack model as envisaged in the Strategy Paper, consists of several layers of open APIs connected to each other, often tied together by a unique health identifier. The open nature of APIs has the advantage that it allows public and private actors to build solutions on top of it, which are interoperable with all parts of the stack. It is however worth considering both the ‘openness’ and the role that the state plays in it.

Even though the APIs are themselves open, they are a part of a pre-decided technological paradigm, built by private actors and blessed by the state. Even though innovators can build on it, the options available to them are limited by the information architecture created by the stack model. When such a technological paradigm is created for healthcare reform and health data, the stack model poses additional challenges. By tying the stack model to the unique identity, without appropriate processes in place for access control, siloed information, and encrypted communication, the stack model poses tremendous privacy and security concerns. The broad language under Clause 12 of the DPB needs to be looked at in this context.

Clause 12 allows non-consensual processing of personal data where it is necessary “for the performance of any function of the state authorised by law” in order to provide a service or benefit from the State. In the previous post, I had highlighted the import of the use of only ‘necessity’ to the exclusion of ‘proportionality’. Now, we need to consider its significance in light of the emerging digital healthcare apparatus being created by the state.

The National Health Stack and National Digital Health Mission together envision an intricate system of data collection and exchange which in a regulatory vacuum would ensure unfettered access to sensitive healthcare data for both the state and private actors registered with the platforms. The Stack framework relies on repositories where data may be accessed from multiple nodes within the system. Importantly, the Strategy Paper also envisions health data fiduciaries to facilitate consent-driven interaction between entities that generate the health data and entities that want to consume the health records for delivering services to the individual. The cast of characters involve the National Health Authority, health care providers and insurers who access the National Health Electronic Registries, unified data from different programmes such as National Health Resource Repository (NHRR), NIN database, NIC and the Registry of Hospitals in Network of Insurance (ROHINI), private actors such as Swasth, iSpirt who assist the Mission as volunteers. The currency that government and private actors are interested in is data.

The promised benefits of healthcare data in an anonymised and aggregate form range from Disease Surveillance to Pharmacovigilance as well as Health Schemes Management Systems and Nutrition Management, benefits which have only been more acutely emphasised during the pandemic. However, the pandemic has also normalised the sharing of sensitive healthcare data with a variety of actors, without much thinking on much-needed data minimisation practises.

The potential misuses of healthcare data include greater state surveillance and control, predatory and discriminatory practices by private actors which rely on Clause 12 to do away with even the pretense of informed consent so long as the processing of data is deemed necessary by the state and its private sector partners to provide any service or benefit.

Subclause (e) in Clause 12, which was added in the last version of the Bill drafted by MeitY and has been retained by the JPC, allows processing wherever it is necessary for ‘any measures’ to provide medical treatment or health services during an epidemic, outbreak or threat to public health. Yet again, the overly-broad language used here is designed to ensure that any annoyances of informed consent can be easily brushed aside wherever the state intends to take any measures under any scheme related to public health.

Effectively, how does the framework under Clause 12 alter the consent and purpose limitation model? Data protection laws introduce an element of control by tying purpose limitation to consent. Individuals provide consent to specified purposes, and data processors are required to respect that choice. Where there is no consent, the purposes of data processing are sought to be limited by the necessity principle in Clause 12. The state (or authorised parties) must be able to demonstrate necessity to the exercise of state function, and data must only be processed for those purposes which flow out of this necessity. However, unlike the consent model, this provides an opportunity to keep reinventing purposes for different state functions.

In the absence of a data protection law, data collected by one agency is shared indiscriminately with other agencies and used for multiple purposes beyond the purpose for which it was collected. The consent and purpose limitation model would have addressed this issue. But, by having a low threshold for non-consensual processing under Clause 12, this form of data processing is effectively being legitimised.

Nothing to Kid About – Children's Data Under the New Data Protection Bill

The article was originally published in the Indian Journal of Law and Technology

For children, the internet has shifted from being a form of entertainment to a medium to connect with friends and seek knowledge and education. However, each time they access the internet, data about them and their choices are inadvertently recorded by companies and unknown third parties. The growth of EdTech apps in India has led to growing concerns regarding children's data privacy. This has led to the creation of a self-regulatory body, the Indian EdTech Consortium. More recently, the Advertising Standard Council of India has also started looking at passing a draft regulation to keep a check on EdTech advertisements.

The Joint Parliamentary Committee (JPC), tasked with drafting and revising the Data Protection Bill, had to consider the number of changes that had happened after the release of the 2019 version of the Bill. While the most significant change was the removal of the term “personal data” from the title of the Bill, in a move to create a comprehensive Data Protection Bill that includes both personal and non personal data. Certain other provisions of the Bill also featured additions and removals. The JPC, in its revised version of the Bill has removed an entire class of data fiduciaries – guardian data fiduciary – which was tasked with greater responsibility for managing children's data. While the JPC justified the removal of the guardian data fiduciary stating that consent from the guardian of the child is enough to meet the end for which personal data of children are processed by the data fiduciary. While thought has been given to looking at how consent is given by the guardian on behalf of the child, there was no change in the age of children in the Bill. Keeping the age of consent under the Bill as the same as the age of majority to enter into a contract under the 1872 Indian Contract Act – 18 years – reveals the disconnect the law has with the ground reality of how children interact with the internet.

In the current state of affairs where Indian children are navigating the digital world on their own there is a need to look deeply at the processing of children’s data as well as ways to ensure that children have information about consent and informational privacy. By placing the onus of granting consent on parents, the PDP Bill fails to look at how consent works in a privacy policy–based consent model and how this, in turn, harms children in the long run.

1. Age of Consent

By setting the age of consent as 18 years under the Data Protection Bill, 2021, it brings all individuals under 18 years of age under one umbrella without making a distinction between the internet usage of a 5-year-old child and a 16-year-old teenager. There is a need to look at the current internet usage habits of children and assess whether requiring parental consent is reasonable or even practical. It is also pertinent to note that the law in the offline world does make the distinction between age and maturity. For example, it has been highlighted that Section 82 of the Indian Penal Code, read with Section 83, states that any act by a child under the age of 12 years shall not be considered an offence, while the maturity of those aged between 12–18 years will be decided by the court (individuals between the age of 16–18 years can also be tried as adults for heinous crimes). Similarly, child labour laws in the country allow children above the age of 14 years to work in non-hazardous industries, which would qualify them to fall under Section 13 of the Bill, which deals with employee data.

A 2019 report suggests that two-thirds of India’s internet users are in the 12–29 years age group, accounting for about 21.5% of the total internet usage in metro cities. With the emergence of cheaper phones equipped with faster processing and low internet data costs, children are no longer passive consumers of the internet. They have social media accounts and use several applications to interact with others and make purchases. There is a need to examine how children and teenagers interact with the internet as well as the practicality of requiring parental consent for the usage of applications.

Most applications that require age data request users to type in their date of birth; it is not difficult for a child to input a suitable date that would make it appear that they are over 18. In this case they are still children but the content that will be presented to them would be those that are meant for adults including content that might be disturbing or those involving use of alcohol and gambling. Additionally, in their privacy policies, applications sometimes state that they are not suited for and restricted from users under 18. Here, data fiduciaries avoid liability by placing the onus on the user to declare their age and properly read and understand the privacy policy.

Reservations about the age of consent under the Bill have also been highlighted by some members of the JPC through their dissenting opinions. MP Ritesh Pandey suggested that the age of consent should be reduced to 14 years keeping the best interest of the children in mind as well as to support children in benefiting from technological advances. Similarly, MP Manish Tiwari in his dissenting opinion suggested regulating data fiduciaries based on the type of content they provide or data they collect.

2. How is the 2021 Bill Different from the 2019 Bill?

The 2019 draft of the Bill consisted of a class of data fiduciaries called guardian data fiduciaries – entities that operate commercial websites or online services directed at children or which process large volumes of children’s personal data. This class of fiduciaries was barred from profiling, tracking, behavioural monitoring, and running targeted advertising directed at children and undertaking any other processing of personal data that can cause significant harm to the child. In the previous draft, such data fiduciaries were not allowed to engage in ‘profiling, tracking, behavioural monitoring of children, or direct targeted advertising at children’. There was also a prohibition on conducting any activities that might significantly harm the child. As per Chapter IV, any violation could attract a penalty of up to INR 15 crore of the worldwide turnover of the data fiduciary for the preceding financial year, whichever is higher. However, this separate class of data fiduciaries do not have any additional responsibilities. It is also unclear as to whether a data fiduciary that does not by definition fall within such a category would be allowed to engage in activities that could cause ‘significant harm’ to children.

The new Bill also does not provide any mechanisms for age verification and only lays down considerations that verification processes should be undertaken. Furthermore, the JPC has suggested that consent options available to the child when they attain the age of majority i.e. 18 years should be included within the rule frame by the Data Protection Authority instead of being an amendment in the Bill.

3. In the Absence of a Guardian Data Fiduciary

The 2018 and 2019 drafts of the PDP Bill consider a child to be any person below the age of 18 years. For a child to access online services, the data fiduciary must first verify the age of the child and obtain consent from their guardian. The Bill does not provide an explicit process for age verification apart from stating that regulations shall be drafted in this regard. The 2019 Bill states that the Data Protection Authority shall specify codes of practice in this matter. Taking best practices into account, there is a need for ‘user-friendly and privacy-protecting age verification techniques’ to encourage safe navigation across the internet. This will require looking at technological developments and different standards worldwide. There is a need to hold companies accountable for the protection of children’s online privacy and the harm that their algorithms cause children and to make sure that they are not continued.

The JPC in the 2021 version of the Bill removed provisions about guardian data fiduciaries, stating that there was no advantage in creating a different class of data fiduciary. As per the JPC, even those data fiduciaries that did not fall within the said classification would also need to comply with rules pertaining to the personal data of children i.e. with Section 16 of the Bill. Section 16 of the Bill requires the data fiduciary to verify the child’s age and obtain consent from the parent/guardian. The manner of age verification has also een spelt out. Furthermore, since ‘significant data fiduciaries’ is an existing class, there is still a need to comply with rules related to data processing. The JPC also removed the phrase “in the best interests of, the child” and “is in the best interests of, the child” under sub-clause 16(1), implying that the entire Bill concerned the rights of the data principal and the use of such terms dilutes the purpose of the legislation and could give way to manipulation by the data fiduciary.

Conclusion

Over the past two years, there has been a significant increase in applications that are targeted at children. There has been a proliferation of EduTech apps, which ideally should have more responsibility as they are processing children's data. We recommend that instead of creating a separate category, such fiduciaries collecting children's data or providing services to children be seen as ‘significant data fiduciaries’ that need to take up additional compliance measures.

Furthermore, any blanket prohibition on tracking children may obstruct safety measures that could be implemented by data fiduciaries. These fears are also increasing in other jurisdictions as there is a likelihood to restrict data fiduciaries from using software that looks out for such as Child Sexual Abuse Material as well as online predatory behaviour. Additionally, concerning the age of consent under the Bill, the JPC could look at international best practices and come up with ways to make sure that children can use the internet and have rights over their data, which would enable them to grow up with more awareness about data protection and privacy. One such example to look at could be the Children's Online Privacy Protection Rule (COPPA) in the US, where the rules apply to operators of websites and online services that collect personal information from kids under 13 or provide services to children that are directed at a general audience, but have actual knowledge that they collect personal information from such children. A form of combination of this system and the significant data fiduciary classification could be one possible way to ensure that children’s data and privacy are preserved online.

The authors are researchers at the Centre for Internet and Society and thank their colleague Arindrajit Basu for his inputs.

Response to MeitY's India Digital Ecosystem Architecture 2.0 Comment Period

This submission presents a response by the Centre for Internet & Society (CIS) to MeitY's India Digital Ecosystem Architecture 2.0 Comment Period (hereinafter, the “Consultation”) released in February 2022. CIS appreciates MeitY's consultations, and is grateful for the opportunity to put forth its views and comments.

Read the response here

Cybernorms: Do they matter IRL (In Real Life): Event Report

During the first half of the year, multilateral forums including the United Nations made some progress in identifying norms, rules, and principles to guide responsible state behaviour in cyberspace, even though the need for political compromise between opposing geopolitical blocs stymied progress to a certain extent.

There is certainly a need to formulate more concrete rules and norms. However, at the same time, the international community must assess the extent to which existing norms are being implemented by states and non-state actors alike. Applying agreed norms to "real life" throws up challenges of interpretation and enforcement, to which the only long-term solution remains regular dialogue and exchange both between states and other stakeholders.

This was the thinking behind the session titled "Cybernorms: Do They Hold Up IRL (in Real Life)?", organised at RightsCon 2021 by four non-governmental organisations: the Association for Progressive Communications (APC), the Centre for Internet & Society (CIS), Global Partners Digital (GPD), and Research ICT Africa (RIA). Cyber norms do not work unless states and other actors call out violations of norms, actively observe and implement them, and hold each other accountable. As the organisers of the event, we devised hypothetical scenarios based on three real-life examples of large-scale incidents and engaged with discussants who sought to apply agreed cyber norms to them. We chose to create scenarios without referring to real states as we wanted the discussion to focus on the implementation and interpretation of norms rather than the specific political situation of each actor.

Through this interactive exercise involving an array of expert stakeholders (including academics, civil society, the technical community, and governments) and communities from different regions, we sought to answer whether and how the application of cyber norms can mitigate harms, especially to vulnerable communities, and identify possible gaps in current normative frameworks. For each scenario, we aimed to diagnose whether cyber norms have been violated, and if so, what could and should be done, by identifying the next steps that can be taken by all the stakeholders present. For each scenario, we highlight why we chose it, outline the main points of discussion, and articulate key takeaways for norm implementation and interpretation. We hope this exercise will feed into future conversations around both norm creation and enforcement by serving as a framework for guiding optimal norm enforcement.

Read the full report here

CIS Seminar Series

The first seminar series was held on 7th and 8th October on the theme of ‘Information Disorder: Mis-, Dis- and Malinformation’,

Theme for the Second Seminar (to be held online)

Moderating Data, Moderating Lives: Debating visions of (automated) content moderation in the contemporary

Artificial Intelligence (AI) and Machine Learning (ML) based approaches have become increasingly popular as “solutions” to curb the extent of mis-, dis- mal-information, hate speech, online violence and harassment on social media. The pandemic and the ensuing work from home policy forced many platforms to shift to automated moderation which further highlighted the inefficacy of existing models (Gillespie, 2020) to deal with the surge in misinformation and harassment. These efforts, however, raise a range of interrelated concerns such as freedom and regulation of speech on the privately public sphere of social media platforms; algorithmic governance, censorship and surveillance; the relation between virality, hate, algorithmic design and profits; and social, political and cultural implications of ordering social relations through computational logics of AI/ML.

On one hand, large-scale content moderation approaches (that include automated AI/ML-based approaches) have been deemed “necessary” given the enormity of data generated (Gillespie, 2020), on the other hand, they have been regarded as “technological fixtures” offered by the Silicon Valley (Morozov, 2013), or “tyrannical” as they erode existing democratic measures (Harari, 2018). Alternatively, decolonial, feminist and postcolonial approaches insist on designing AI/ML models that centre voices of those excluded to sustain and further civic spaces on social media (Siapera, 2022).

From the global south perspective, issues around content moderation foreground the hierarchies inbuilt in the existing knowledge infrastructures. First, platforms remain unwilling to moderate content in under-resourced languages of the global south citing technological difficulties. Second, given the scale and reach of social media platforms and inefficient moderation models, the work is outsourced to workers in the global south who are meant to do the dirty work of scavenging content off these platforms for the global north. Such concerns allow us to interrogate the techno-solutionist approaches as well as their critiques situated in the global north. These realities demand that we articulate a different relationship with AI/ML while also being critical of AI/ML as an instrument of social empowerment for those at the “bottom of the pyramid” (Arora, 2016).

The seminar invites scholars interested in articulating nuanced responses to content moderation that take into account the harms perpetrated by algorithmic governance of social relations and irresponsible intermediaries while being cognizant of the harmful effects of mis-, dis- mal-information, hate speech, online violence and harassment on social media.

We invite abstract submissions that respond to these complexities vis-a-vis content moderation models or propose provocations regarding automated moderation models and their in/efficacy in furthering egalitarian relationships on social media, especially in the global south.

Submissions can reflect on the following themes using legal, policy, social, cultural and political approaches. Also, the list is not exhaustive and abstracts addressing other ancillary concerns are most welcome:

- Metaphors of (content) moderation: mediating utopia, dystopia, scepticism surrounding AI/ML approaches to moderation.

- From toxic to healthy, from purity to impurity: Interrogating gendered, racist, colonial tropes used to legitimize content moderation

- Negotiating the link between content moderation, censorship and surveillance in the global south

- Whose values decide what is and is not harmful?

- Challenges of building moderation models for under resourced languages.

- Content moderation, algorithmic governance and social relations.

- Communicating algorithmic governance on social media to the not so “tech-savvy” among us.

- Speculative horizons of content moderation and the future of social relations on the internet.

- Scavenging abuse on social media: Immaterial/invisible labour for making for-profit platforms safer to use.

- Do different platforms moderate differently? Interrogating content moderation on diverse social media platforms, and multimedia content.

- What should and should not be automated? Understanding prevalence of irony, sarcasm, humour, explicit language as counterspeech.

- Maybe we should not automate: Alternative, bottom-up approaches to content moderation

Seminar Format

We are happy to welcome abstracts for one of two tracks:

Working paper presentation

A working paper presentation would ideally involve a working draft that is presented for about 15 minutes followed by feedback from workshop participants. Abstracts for this track should be 600-800 words in length with clear research questions, methodology, and questions for discussion at the seminar. Ideally, for this track, authors should be able to submit a draft paper two weeks before the conference for circulation to participants.

Coffee-shop conversations

In contrast to the formal paper presentation format, the point of the coffee-shop conversations is to enable an informal space for presentation and discussion of ideas. Simply put, it is an opportunity for researchers to “think out loud” and get feedback on future research agendas. Provocations for this should be 100-150 words containing a short description of the idea you want to discuss.

We will try to accommodate as many abstracts as possible given time constraints. We welcome submissions from students and early career researchers, especially those from under-represented communities.

All discussions will be private and conducted under the Chatham House Rule. Drafts will only be circulated among registered participants.

Please send your abstracts to [email protected].

Timeline

- Abstract Submission Deadline: 18th April

- Results of Abstract review: 25th April

- Full submissions (of draft papers): 25th May

- Seminar date: Tentative 31st May

References

Arora, P. (2016). Bottom of the Data Pyramid: Big Data and the Global South. International Journal of Communication, 10(0), 19.

Gillespie, T. (2020). Content moderation, AI, and the question of scale. Big Data & Society, 7(2), 2053951720943234. https://doi.org/10.1177/2053951720943234

Harari, Y. N. (2018, August 30). Why Technology Favors Tyranny. The Atlantic. https://www.theatlantic.com/magazine/archive/2018/10/yuval-noah-harari-technology-tyranny/568330/

Morozov, E. (2013). To save everything, click here: The folly of technological solutionism (First edition). PublicAffairs.

Siapera, E. (2022). AI Content Moderation, Racism and (de)Coloniality. International Journal of Bullying Prevention, 4(1), 55–65. https://doi.org/10.1007/s42380-021-00105-7

Personal Data Protection Bill must examine data collection practices that emerged during pandemic

The article by Shweta Mohandas and Anamika Kundu was originally published by news nine on November 29, 2021.

The Personal Data Protection Bill (PDP) is speculated to be introduced during the winter session of the parliament soon, and the report of the Joint Parliamentary Committee (JPC) has already been adopted by the committee on Monday. The Report of the JPC comes after almost two years of deliberation and secrecy over how the final version of the Personal Data Protection Bill will be. Since the publication of the 2019 version of the PDP Bill, the Covid 19 pandemic and the public safety measures have opened the way for a number of new organisations and reasons to collect personal data that was non-existent in 2019. Hence along with changes that have been suggested by multiple civil society organisations, the dissent notes submitted by the members of the JPC, the new version of the PDP Bill must also look at how data processing has changed over the span of two years.

Concerns with the bill

At the outset there are certain parts of the PDP Bill which need to be revised in order to uphold the spirit of privacy and individual autonomy laid out in the Puttaswamy judgement. The two sections that need to be in line with the privacy judgement are the ones that allow for non consensual processing of data by the government, and by employers. The PDP Bill in its current form provides wide-ranging exemptions which allow government agencies to process citizen's data in order to fulfil its responsibilities.

In the 2018 version of bill, drafted by the Justice Srikrishna Committee exemptions granted to the State with regard to processing of data was subject to a four pronged test which required the processing to be (i) authorised by law; (ii) in accordance with the procedure laid down by the law; (iii) necessary; and (iv) proportionate to the interests being achieved. This four pronged test was in line with the principles laid down by the Supreme Court in the Puttaswamy judgement. The 2019 version of the PDP Bill has diluted this principle by merely retaining the 'necessity principle' and removing the other requirements which is not in consonance with the test laid down by the Supreme Court in Puttaswamy.

Section 35 was also widely discussed in the panel meetings where members had argued the removal of 'public order' as a ground for exemption. The panel also insisted for 'judicial or parliamentary oversight' to grant such exemptions. The final report did not accept these suggestions stating a need to balance national security, liberty and privacy of an individual. There ought to be prior judicial review of the written order exempting the governmental agency from any provisions of the bill. Allowing the government to claim an exemption if it is satisfied to be "necessary or expedient" can be misused.

Another clause which gives the data principal a wide berth is with respect to employee data Section 13 of the current version of the bill provides the employer with a leeway into processing employee data (other than sensitive personal data) without consent based on two grounds: when consent is not appropriate, or when obtaining consent would involve disproportionate effort on the part of the employer.

The personal data so collected can only be collected for recruitment, termination, attendance, provision of any service or benefit, and assessing performance. This covers almost all of the activities that require data of the employee. Although the 2019 version of the bill excludes non-consensual collection of sensitive personal data (a provision that was missing in the 2018 version of the bill), there is still a lot of scope to improve this provision and provide employees further right to their data. At the outset the bill does not define employee and employer, which could result in confusion as there is no one definition of these terms across Indian Labour Laws.

Additionally, the bill distinguishes between employee and consumer, where the consumer of the same company or service has a greater right to their data than an employee. In the sense that the consumer as a data principal has the option to use any other product or service and also has the right to withdraw consent at any time, in the case of an employee the consequence of refusing consent or withdrawing consent would be being terminated from the employment. It is understood that there is a requirement for employee data to be collected, and that consent does not work the same way as it does in the case of a consumer.

The bill could ensure that employers have some responsibility towards the data they collect from the employees, such as ensuring that they are only used for the purpose for which they were collected, the employee knows how long their data will be retained, and know if the data is being processed by third parties. It is also worth mentioning that the Indian government is India's largest employer spanning a variety of agencies and public enterprises.

Concerns highlighted by JPC Members

Going back to the few members of the JPC who have moved dissent notes, specifically with regard to governmental exemptions. Jairam Ramesh filed a dissent note, to which many other opposition members followed suit. While Jairam Ramesh praised the JPC's functioning, he disagreed with certain aspects of the Report. According to him, the 2019 bill is designed in a manner where the right to privacy is given importance only in cases of private activities. He raised concerns regarding the unbridled powers given to the government to exempt itself from any of the provisions.

The amendment suggested by him would require parliamentary approval before exemption would take place. He also added that Section 12 of the bill which provided certain scenarios where consent was not needed for processing of personal data should have been made 'less sweeping'. Similarly, Gaurav Gogoi's note stated that the exemptions would create a surveillance state and similarly criticised Section 12 and 35 of the bill. He also mentioned that there ought to be parliamentary oversight for the exemptions provided in the bill.

On the same issue, Congress leader Manish Tiwari noted that the bill creates 'parallel universes' - one for the private sector which needs to be compliant and the other for the State which can exempt itself. He has opposed the entire bill stating there exists an "inherent design flaw". He has raised specific objections to 37 clauses and stated that any blanket exemptions to the state goes against the Puttaswamy Judgement.

In their joint dissent note, Derek O'Brien and Mahua Mitra have said that there is a lack of adequate safeguards to protect the data principals' privacy and the lack of time and opportunity for stakeholder consultations. They have also pointed out that the independence of the DPA will cease to exist with the present provision of allowing the government powers to choose members and the chairman. Amar Patnaik is to object to the lack of inclusion of state level authorities in the bill. Without such bodies, he says, there would be federal override.

Conclusion

While a number of issues were highlighted by civil society, the members of the JPC, and the media, the new version of the bill should also need to take into account the shifts that have taken place in view of the pandemic. The new version of the data protection bill should take into consideration the changes and new data collection practices that have emerged during the pandemic, be comprehensive and leave very little provisions to be decided later by the Rules.

Comments to the draft Motor Vehicle Aggregators Scheme, 2021

CIS, established in Bengaluru in 2008 as a non-profit organisation, undertakes interdisciplinary research on internet and digital technologies from public policy andacademic perspectives. Through its diverse initiatives, CIS explores, intervenes in, and advances contemporary discourse and regulatory practices around internet, technology,and society in India, and elsewhere.

CIS is grateful for the opportunity to submit its comments to the draft Scheme. Please find below our thematically organised comments.

Click here to read more.

Decoding India’s Central Bank Digital Currency (CBDC)

In her budget speech presented in the Parliament on 1 February 2022, the Finance Minister of India – Nirmala Sitharaman – announced that India will launch its own Central Bank Digital Currency (CBDC) from the financial year 2022–23. The lack of information regarding the Indian CBDC project has resulted in limited discussions in the public sphere. This article is an attempt to briefly discuss the basics of CBDCs such as the definition, necessity, risks, models, and associated technologies so as to shed more light on India’s CBDC project.

1. What is a CBDC?

Before delving into the various aspects of a CBDC, we must first define it. A CBDC in its simplest form has been described by the RBI as “the same as currency issued by a central bank but [which] takes a different form than paper (or polymer). It is sovereign currency in an electronic form and it would appear as liability (currency in circulation) on a central bank’s balance sheet. The underlying technology, form and use of a CBDC can be moulded for specific requirements. CBDCs should be exchangeable at par with cash.”

2. Policy Goals

Launching any CBDC involves the setting up of infrastructure, which comes with notable costs. It is therefore imperative that the CBDC provides significant advantages that can justify the investment it entails. Some of the major arguments in favour of CBDCs and their relevance in the Indian context are as follows.

Financial Inclusion: In countries with underdeveloped banking and payment systems, proponents believe that CBDCs can boost financial inclusion through the provision of basic accounts and an electronic payment system operated by the central bank. However, financial inclusion may not be a powerful motive in India, where at least one member in 99% of rural and urban households have a bank account, according to some surveys. Even the US Federal Reserve recognises that further research is needed to assess the potential of CBDCs to expand financial inclusion, especially among underserved and lower-income households.

Access to Payments: – It is claimed that CBDCs provide scope for improving the existing payments landscape by offering fast and efficient payment services to users. Further, supporters claim that a well-designed, robust, open CBDC platform could enable a wide variety of firms to compete to offer payment services. It could also enable them to innovate and generate new capabilities to meet the evolving needs of an increasingly digitalised economy. However, it is not yet clear exactly how CBDCs would achieve this objective and whether there would be any noticeable improvements in the payment systems space in India, which already boasts of a fairly advanced and well-developed payment systems market.

Increased System Resilience: Countries with a highly developed digital payments landscape are aware of their reliance on electronic payment systems. The operational resilience of these systems is of critical importance to the entire payments landscape. The CBDC would not only act as a backup to existing payment systems in case of an emergency but also reduce the credit risk and liquidity risk, i.e., the risk that payment system providers will turn insolvent and run out of liquidity. Such risks can also be mitigated through robust regulatory supervision of the entities in the payment systems space.

Increasing Competition: A CBDC has the potential to increase competition in the country’s payments sector in two main ways, (i) directly – by providing an alternative payment system that competes with existing private players, and (ii) by providing an open platform for private players, thereby reducing entry barriers for newer players offering more innovative services at lower costs.

Addressing Illicit Transactions: Cash offers a level of anonymity that is not always available with existing payment systems. If a CBDC offers the same level of anonymity as cash then it would pose a greater CFT/AML (Combating Financial Terrorism/ Anti-Money Laundering) risk. However, if appropriate CFT/AML requirements are built into the design of the CBDC, it could address some of the concerns regarding its usage in illegal transactions. Such CFT/AML requirements are already being followed by existing banks and payment systems providers.

Reduced Costs: If a CBDC is adopted to the extent that it begins to act as a substitute for cash, it could allow the central bank to print lesser currency, thereby saving costs on printing, transporting, storing, and distributing currency. Such a cost reduction is not exclusive to only CBDTs but can also be achieved through the widespread adoption of existing payment systems.

Reduction in Private Virtual Currencies (VCs): Central banks are of the view that a widely used CBDC will provide users with an alternative toexisting private cryptocurrencies and thereby limit various risks including credit risks, volatility risks, risk of fraud, etc. However if a CBDC does not offer the same level of anonymity or potential for high return on investment that is available with existing VCs, it may not be considered an attractive alternative.

Serving Future Needs: Several central banks see the potential for “programmable money” that can be used to conduct transactions automatically on the fulfilment of certain conditions, rules, or events. Such a feature may be used for automatic routing of tax payments to authorities at the point of sale, shares programmed to pay dividends directly to shareholders, etc. Specific programmable CBDCs can also be issued for certain types of payments such as toward subway fees, shared bike fees, or bus fares. This characteristic of CBDCs has huge potential in India in terms of delivery of various subsidies.

3. Potential Risks

As with most things, CBDCs have certain drawbacks and risks that need to be considered and mitigated in the designing phase itself. A successful and widely adopted CBDC could change the structure and functions of various stakeholders and institutions in the economy.

Both private and public sector banks rely on bank deposits to fund their loan activities. Since bank deposits offer a safe and risk-freeway to park one’s savings, a large number of people utilise this facility, thereby providing banks with a large pool of funds that is utilised for lending activities. A CBDC could offer the public a safer alternative to bank deposits since it eradicates even the minute risk of the bank becoming insolvent making it more secure than regular bank deposits. A widely accepted CBDC could adversely affect bank deposits, thereby reducing the availability of funds for lending by banks and adversely affecting credit facilities in the economy. Further, since a CBDC is a safer form of money, in times of stress, people may opt to convert funds stored in banks into safer CBDCs, which might cause a bank run. However, these issues can be mitigated by making the CBDC deposits non-interest-bearing, thus reducing their attractiveness as an alternative to bank deposits. Further, in times of monetary stress, the central bank could impose restrictions on the amount of bank money that can be converted into the CBDC, just as it has done in the case of cash withdrawals from specific banks when it finds that such banks are undergoing extreme financial stress.

If a significantly large portion of a country’s population adopts a private digital currency, it could seriously hamper the ability of the central bank to carry out several crucial functions, such as implementing the monetary policy, controlling inflation, etc.

It may be safe to say that the question of how CBDCs may affect the economy in general and more specifically, the central bank’s ability to implement monetary policy, seigniorage, financial stability, etc. requires further research and widespread consultation to mitigate any potential risk factors.

4. The Role of the Central Bank in a CBDC

The next issue that requires attention when dealing with CBDCs is the role and level of involvement of the central bank. This would depend not only on the number of additional functions that the central bank is comfortable adopting but also on the maturity of the fintech ecosystem in the country. Broadly speaking, there are three basic models concerning the role of the central bank in CBDCs:

(i) Unilateral CBDCs: Where the central bank performs all the functions right from issuing the CBDC to carrying out and verifying transactions and also dealing with the users by maintaining their accounts.

(ii) Hybrid or Intermediate Model: In this model, the CBDCs are issued by the central bank, but private firms carry out some of the other functions such as providing wallets to end users, verifying transactions, updating ledgers, etc. These private entities will be regulated by the central bank to ensure that there is sufficient supervision.

(iii) Synthetic CBDCs: In this model, the CBDC itself is not issued by the central bank but by private players. However, these CBDCs are backed by central bank liabilities, thus providing the sovereign stability that is the hallmark of a CBDC.

The mentioned models could also be modified to suit the needs of the economy; e.g., the second model could be modified by not only allowing private players to perform the user-facing functions, but also offering the same functions either by the central bank or even some other public sector enterprise. Such a scenario has the potential to offer services at a reduced price (perhaps with reduced functionalities) thereby fulfilling the financial inclusion and cost reduction policy goals mentioned above.

5. Role of Blockchain Technology

While it is true that the entire concept of a CBDC evolved from cryptocurrencies and that popular cryptocurrencies like Bitcoin and Ether are based on blockchain technology, recent research seems to suggest that blockchain may not necessarily be the default technology for a CBDC. Additionally, different jurisdictions have their own views on the merits and demerits of this technology, for example, the Bahamas and the Eastern Caribbean Central Bank have DLT-based systems; however, China has decided that DLT-based systems do not have adequate capacity to process transactions and store data to meet its system requirements.

Similarly, a project by the Massachusetts Institute of Technology (MIT) Currency Initiative and the Federal Reserve Bank of Boston titled “Project Hamilton” to explore the CBDC design space and its technical challenges and opportunities has surmised that a distributed ledger operating under the jurisdiction of different actors is not necessarily crucial. It was found that even if controlled by a single actor, the DLT architecture has downsides such as performance bottlenecks and significantly reduced transaction throughput scalability compared to other options.

6. Conclusion

Although a CBDC potentially offers some advantages, launching one is an expensive and complicated proposition, requiring in-depth research and detailed analyses of a large number of issues, only some of which have been highlighted here. Therefore, before launching a CBDC, central banks issue white papers and consult with the public in addition to major stakeholders, conduct pilot projects, etc. to ensure that the issue is analysed from all possible angles. Although the Reserve Bank of India is examining various issues such as whether the CBDC would be retail or wholesale, the validation mechanism, the underlying technology to be used, distribution architecture, degree of anonymity, etc., it has not yet released any consultation papers or confirmed the completion of any pilot programmes for the CBDC project.

It is, therefore, unclear whether there has been any detailed cost–benefit analysis by the government or the RBI regarding its feasibility and benefits over existing payment systems and whether such benefits justify the costs of investing in a CBDC. For example, several of the potential advantages discussed here, such as financial inclusion and improved payment systems may not be relevant in the Indian context, while others such as reduced costs and a reduction in illegal transactions may be achieved by improving the existing systems. It must be noted that the current system of distribution of central bank money has worked well over the years, and any systemic changes should be made only if the potential upside justifies such fundamental changes.

The Government of India has already announced the launch of the Indian CBDC in early 2023, but the lack of public consultation on such an important project is a matter of concern. The last time the RBI took a major decision in the crypto space without consulting stakeholders was when it banned financial institutions from having any dealings with crypto entities. On that occasion, the circular imposing the ban was struck down by the Supreme Court as violating the fundamental right to trade and profession. It is, therefore, imperative that the government and the Reserve Bank conduct wide-ranging consultations with experts and the public to conduct a detailed and thorough cost–benefit analysis to determine the feasibility of such a project before deciding on the launch of an Indian CBDC.

Response to the Pegasus Questionnaire issued by the SC Technical Committee

The questionnaire had 11 questions and the responses had to be submitted through an online form- which was available here. The last date for submitting the response was March 31, 2022. CIS had submitted the following responses to the questions in the questionnaire. Access the Response to the Questionnaire

Rethinking Acquisition of Digital Devices by Law Enforcement Agencies

Read the article originally published in RGNUL Student Research Review (RSRR) Journal

Abstract

The Criminal Procedure Code was created in the 1970s when the concept of the right to privacy was highly unacknowledged. Following the Puttuswamy I (2017) judgement of the Supreme Court affirming the right to privacy, these antiquated codes must be re-evaluated. Today, the police can acquire digital devices through summons and gain direct access to a person’s life, despite the summons mechanism having been intended for targeted, narrow enquiries. Once in possession of a device, the police attempt to circumvent the right against self-incrimination by demanding biometric passwords, arguing that the right does not cover biometric information . However, due to the extent of information available on digital devices, courts ought to be cautious and strive to limit the power of the police to compel such disclosures, taking into consideration the right to privacy judgement.

Keywords: Privacy, Criminal Procedural Law, CrPc, Constitutional Law

Introduction

New challenges confront the Indian criminal investigation framework, particularly in the context of law enforcement agencies (LEAs) acquiring digital devices and their passwords. Criminal procedure codes delimiting police authority and procedures were created before the widespread use of digital devices and are no longer pertinent to the modern age due to the magnitude of information available on a single device. A single device could provide more information to LEAs than a complete search of a person’s home; yet, the acquisition of a digital device is not treated with the severity and caution it deserves. Following the affirmation of the right to privacy in Puttuswamy I (2017), criminal procedure codes must be revamped, taking into consideration that the acquisition of a person’s digital device constitutes a major infringement on their right to privacy.

Acquisition of digital devices by LEAs through summons

Section 91 of the Criminal Procedure Code (CrPc) grants powers to a court or police officer in charge of a police station to compel a person to produce any form of document or ‘thing’ necessary and desirable to a criminal investigation. In Rama Krishna v State, ‘necessary’ and ‘desirable’ have been interpreted as any piece of evidence relevant to the investigation or a link in the chain of evidence. Abhinav Sekhri, a criminal law litigator and writer, has argued that the wide wording of this section allows summons to be directed towards the retrieval of specific digital devices.

As summons are target-specific, the section has minimal safeguards. However, several issues arise in the context of summons regarding digital devices. In the current day, access to a user’s personal device can provide comprehensive insight into their life and personality due to the vast amounts of private and personal information stored on it. In Riley v California, the Supreme Court of the United States (SCOTUS) observed that due to the nature of the content present on digital devices, summons for them are equivalent to a roving search, i.e., demanding the simultaneous production of all contents of the home, bank records, call records, and lockers. The Riley decision correctly highlights the need for courts to recognise that digital devices ought to be treated distinctly compared to other forms of physical evidence due to the repository of information stored on digital devices.

The burden the state must surpass in order to issue summons is low as the relevancy requirement is easily provable. As noted in Riley, police must identify which evidence on a device is relevant. Due to the sheer amount of data on phones, it is very easy for police to claim that there will surely be some form of connection between the content on the device and the case. Due to the wide range of offences available for Indian LEAs to cite, it is easy for them to argue that the content on the device is relevant to any number of possible offences. LEAs rarely face consequences for slamming the accused with a huge roster of charges – even if many of them are baseless – leading to the system being prone to abuse. The Indian Supreme Court in its judgement in Canara Bank noted that the burden of proof must be higher for LEAs when investigations violate the right to privacy. Tarun Krishnakumar notes that the trickle-down effect of Puttuswamy I will lead to new privacy challenges with regards to a summons to appear in court. Puttuswamy I, will provide the bedrock and constitutional framework, within which future challenges to the criminal process will be undertaken. It is important for the court to recognise the transformative potential within the Puttuswamy judgement to help ensure that the right to privacy of citizens is safeguarded. The colonial logic of policing – wherein criminal procedure law was merely a tool to maximise the interest of the state at the cost of the people – must be abandoned. Courts ought to devise a framework under Section 91 to ensure that summons are narrowly framed to target specific information or content within digital devices. Additionally, the digital device must be collected following a judicial authority issuing the summons and not a police authority. Prior judicial warrants will require LEAs to demonstrate their requirement for the digital device; on estimating the impact on privacy, the authority can issue a suitable summons. Currently, the only consideration is if the item will furnish evidence relevant to the investigation; however, judges ought to balance the need for the digital device in the LEA’s investigation with the users’ right to privacy, dignity, and autonomy.

Puttuswamy I provides a triple test encompassing legality, necessity, and proportionality to test privacy claims. Legality requires that the measure be prescribed by law, necessity analyses if it is the least restrictive means being adopted by the state, and proportionality checks if the objective pursued by the measure is proportional to the degree of infringement of the right. The relevance standard, as mentioned before, is inadequate as it does not provide enough safeguards against abuse. The police can issue summons based on the slightest of suspicions and thus get access to a digital device, following which they can conduct a roving enquiry of the device to find evidence of any other offence, unrelated to the original cause of suspicion.

Unilateral police summons of digital devices cannot pass the triple test as it is grossly disproportionate and lacks any form of safeguard against the police. The current system has no mechanism for overseeing the LEAs; as long as LEAs themselves are of the view that they require the device, they can acquire it. In Riley, SCOTUS has already held that warrantless seizure of digital devices constitutes a violation of the right to privacy. India ought to also adopt a requirement of a prior judicial warrant for the procurement of devices by LEAs. A re-imagined criminal process would have to abide by the triple test in particular proportionality wherein the benefit claimed by the state ought not to be disproportionate to the impact on the fundamental right to privacy; and further, a framework must be proposed to provide safeguards against abuse.

Compelling the production of passwords of devices

In police investigations, gaining possession of a physical device is merely the first step in acquiring the data on the device, as the LEAs still require the passcodes needed to unlock the device. LEAs compelling the production of passcodes to gain access to potentially incriminating data raises obvious questions regarding the right against self-incrimination; however, in the context of digital devices, several privacy issues may crop up as well.

In Kathi Kalu Oghad, the SC held that compelling the production of fingerprints of an accused person to compare them with fingerprints discovered by the LEA in the course of their investigation does not violate the right to protection against self-incrimination of the accused. It has been argued that the ratio in the judgement prohibits the compelling of disclosure of passwords and biometrics for unlocking devices because Kathi Kalu Oghad only dealt with the production of fingerprints in order to compare the fingerprints with pre-existing evidence, as opposed to unlocking new evidence by utilising the fingerprint. However, the judgement deals with self-incrimination and does not address any privacy issues.