Blog

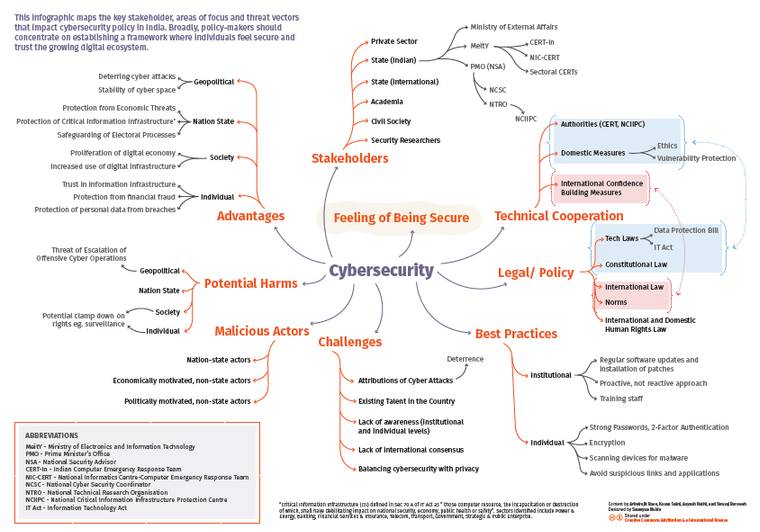

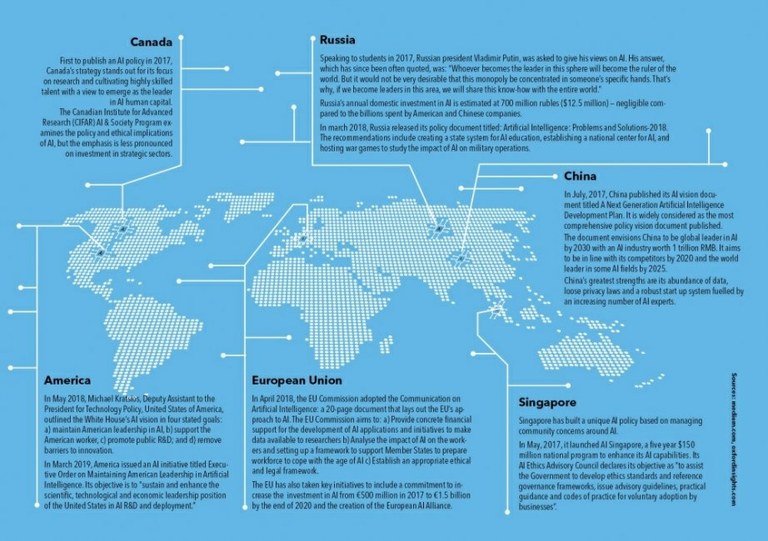

Mapping cybersecurity in India: An infographic

Infographic designed by Saumyaa Naidu

Private-public partnership for cyber security

For security The private sector has a long history of fostering global pacts iStockphoto - Getty Images/iStockphoto

The article by Arindrajit Basu was published in Hindu Businessline on December 24, 2018.

On November 11, 2018, as 70 world leaders gathered in Paris to commemorate the countless lives lost in World War I, French President Emmanuel Macron inaugurated the Paris Peace Forum with a fiery speech denouncing nationalism and urging global leaders to pursue peace and stability through multilateral initiatives.

In many ways, it echoed US President Woodrow Wilson’s monumental speech delivered at the US Senate a century ago in which he outlined 14 points on the principles for peace post World War I. As history unkindly reminds us through the catastrophic realities of World War II, Wilson’s principles went on to be sacrificed at the altar of national self-interest and inadequate multilateral enforcement.

President Macron’s first initiative for global peace — the Paris Call for Trust and Security in Cyber Space was unveiled on November 12 — at the UNESCO Internet Governance Forum — also taking place in Paris. The call was endorsed by over 50 states, 200 private sector entities, including Indian business guilds such as FICCI and the Mobile Association of India and over 100 organisations from civil society and academia from all over the globe. The text essentially comprises a set of high-level principles that seeks to prevent the weaponisation of cyberspace and promote existing institutional mechanisms to “limit hacking and destabilising activities” in cyberspace.

Need for private participation

Given the increasing exploitation of the internet for reaping offensive dividends by state and non-state actors alike and the prevailing roadblocks in the multilateral cyber norms formulation process, Macron’s efforts are perhaps of Wilsonian proportions.

A key difference, however, was that Macron’s efforts were devised hand-in-glove with Microsoft — one of the most powerful and influential private sector actors of our time. Microsoft’s involvement is unsurprising given that private entities have become a critical component of the global cybersecurity landscape and governments need to start thinking about how to optimise their participation in this process.

Indeed, one of the defining features of cyberspace is its incompatibility with state-centric ‘command and control’ formulae that lead to the ordering of other global security regimes — such as nuclear non-proliferation. The decentralised nature of cyberspace means that private sector actors play a vital role in implementing the rules designed to secure cyberspace.

Simultaneously, private actors such as Microsoft have recognised the utility of clearly defined ‘rules of the road’ which ensure certainty and stability in cyberspace and ensure its trustworthiness among global customers.

Normative deadlock

There have been multiple gambits to develop universal norms of responsible state behaviour to foster cyber stability. The United Nations-Group of Governmental Experts (UN-GGE) has been constituted five times now and will meet again in January 2019.

While the third and fourth GGEs in 2013 and 2015 respectively made some progress towards agreeing on some baseline principles, the fifth GGE broke down due to opposition from states including Russia, China and Cuba on the application of specific principles of international law to cyberspace.

This was an extension of a long-running ‘Cold War’ like divide among states at the United Nations. The US along with its NATO allies believe in creating voluntary non-binding norms for cybersecurity through the application of international law in its entirety while Russia, China and its allies in the Shanghai Co-operation Organization (SCO) reject the premise that international law applies in its entirety and call for the negotiation of an independent treaty for cyberspace that lays down binding obligations on states.

Critical role

The private sector has begun to play a critical role in breaking this deadlock. Recent history is testament to catalytic roles played by non-state actors in cementing global co-operative regimes.

For example, Dupont — the world’s leading ChloroFluoroCarbon (CFC) producer — played a leading role in the 1970s and 1980s towards the development of The Montreal Protocol on Substances that Deplete the Ozone Layer and gained positive recognition for its efforts.

Another example is the International Committee of the Red Cross (ICRC) — a non-governmental organisation that played a crucial role in the development of the Geneva Conventions and its Additional Protocols, which regulate the conduct of atrocities in warfare by preparing initial drafts of the treaties and circulating them to key government players.

Similarly, in cyberspace, Microsoft’s Digital Geneva Convention which devised a set of rules to protect civilian use of the internet was put forward by Chief Legal Officer, Brad Smith two months before the fifth GGE met in 2017.

Despite the breakdown at the UN-GGE, Microsoft pushed on with the Tech Accords — a public commitment made by (as of today) 69 companies “agreeing to defend all customers everywhere from malicious attacks by cyber-criminal enterprises and nation-states.”

Much like the ICRC, Microsoft leads commendable diplomatic efforts with the Paris Call as they reached out to states, civil society actors and corporations for their endorsement.

Looking Forward

Private sector-led normative efforts towards securing cyberspace are redundant in the absence of three key recommendations. First, is the implementation of best practices at the organisational level through the implementation of robust cyber defense mechanisms, the detection and mitigation of vulnerabilities and breach notifications — both to consumer and the government.

Second, is the development of mechanisms that enables direct co-operation between governments and private actors at the domestic level. In India, a Joint Working Group between the Data Security Council of India (DSCI) and the National Security Council Secretariat (NSCS) was set up in 2012 to explore a Private Public Partnership on cyber-security in India , which has great potential but is yet to report any tangible outcomes.

The third and final point is the recognition that their efforts need to result in a plurality of states coming to the negotiating table. The absence of the US, China and Russia in the Paris Call are eerily reminiscent of the lack of US participation in Woodrow Wilson’s League of Nations, which was one of the reasons for its ultimate failure.

Microsoft needs to keep on calling with Paris but Beijing, Washington and Alibaba need to pick up.

Is the new ‘interception’ order old wine in a new bottle?

An opinion piece co-authored by Elonnai Hickok, Vipul Kharbanda, Shweta Mohandas and Pranav M. Bidare was published in Newslaundry.com on December 27, 2018.

On December 20, 2018, through an order issued by the Ministry of Home Affairs (MHA), 10 security agencies—including the Intelligence Bureau, the Central Bureau of Investigation, the Enforcement Directorate and the National Investigation Agency—were listed as the intelligence agencies in India with the power to intercept, monitor and decrypt "any information" generated, transmitted, received, or stored in any computer under Rule 4 of the Information Technology (Procedure and Safeguards for Interception, Monitoring and Decryption of Information) Rules, 2009, framed under section 69(1) of the IT Act.

On December 21, the Press Information Bureau published a press release providing clarifications to the previous day’s order. It said the notification served to merely reaffirm the existing powers delegated to the 10 agencies and that no new powers were conferred on them. Additionally, the release also stated that “adequate safeguards” in the IT Act and in the Telegraph Act to regulate these agencies’ powers.

Presumably, these safeguards refer to the Review Committee constituted to review orders of interception and the prior approval needed by the Competent Authority—in this case, the secretary in the Ministry of Home Affairs in the case of the Central government and the secretary in charge of the Home Department in the case of the State government.

As noted in the press release, the government has always had the power to authorise intelligence agencies to submit requests to carry out the interception, decryption, and monitoring of communications, under Rule 4 of the Information Technology (Procedure and Safeguards for Interception, Monitoring and Decryption of Information) Rules, 2009, framed under section 69(1) of the IT Act.

When considering the implications of this notification, it is important to look at it in the larger framework of India’s surveillance regime, which is made up of a set of provisions found across multiple laws and operating licenses with differing standards and surveillance capabilities.

- Section 5(2) of the Indian Telegraph Act, 1885 allows the government (or an empowered authority) to intercept or detain transmitted information on the grounds of a public emergency, or in the interest of public safety if satisfied that it is necessary or expedient so to do in the interests of the sovereignty and integrity of India, the security of the State, friendly relations with foreign states or public order or for preventing incitement to the commission of an offence. This is supplemented by Rule 419A of the Indian Telegraph Rules, 1951, which gives further directions for the interception of these messages.

- Condition 42 of the Unified Licence for Access Services, mandates that every telecom service provider must facilitate the application of the Indian Telegraph Act. Condition 42.2 specifically mandates that the license holders must comply with Section 5 of the same Act.

- Section 69(1) of the Information Technology Act and associated Rules allows for the interception, monitoring, and decryption of information stored or transmitted through any computer resource if it is found to be necessary or expedient to do in the interest of the sovereignty or integrity of India, defense of India, security of the State, friendly relations with foreign States or public order or for preventing incitement to the commission of any cognizable offence relating to above or for investigation of any offence.

- Section 69B of the Information Technology Act and associated Rules empowers the Centre to authorise any agency of the government to monitor and collect traffic data “to enhance cyber security, and for identification, analysis, and prevention of intrusion, or spread of computer contaminant in the country”.

- Section 92 of the CrPc allows for a Magistrate or Court to order access to call record details.

Notably, a key difference between the IT Act and the Telegraph Act in the context of interception is that the Telegraph Act permits interception for preventing incitement to the commission of an offence on the condition of public emergency or in the interest of public safety while the IT Act permits interception, monitoring, and decryption of any cognizable offence relating to above or for investigation of any offence. Technically, this difference in surveillance capabilities and grounds for interception could mean that different intelligence agencies would be authorized to carry out respective surveillance capabilities under each statute. Though the Telegraph Act and the associated Rule 419A do not contain an equivalent to Rule 4—nine Central Government agencies and one State Government agency have previously been authorized under the Act. The Central Government agencies authorised under the Telegraph Act are the same as the ones mentioned in the December 20 notification with the following differences:

- Under the Telegraph Act, the Research and Analysis Wing (RAW) has the authority to intercept. However, the 2018 notification more specifically empowers the Cabinet Secretariat of RAW to issue requests for interception under the IT Act.

- Under the Telegraph Act, the Director General of Police, of concerned state/Commissioner of Police, Delhi for Delhi Metro City Service Area, has the authority to intercept. However, the 2018 notification specifically authorises the Commissioner of Police, New Delhi with the power to issue requests for interception.

That said, the IT (Procedure and safeguard for Monitoring and Collecting Traffic Data or Information) Rules, 2009 under 69B of the IT Act contain a provision similar to Rule 4 of the IT (Procedure and Safeguards for Interception, Monitoring and Decryption of Information) Rules, 2009 - allowing the government to authorize agencies that can monitor and collect traffic data. In 2016, the Central Government authorised the Indian Computer Emergency Response Team to monitor and collect traffic data, or information generated, transmitted, received, or stored in any computer resource. This was an exercise of the power conferred upon the Central Government by Section 69B(1) of the IT Act. However, this notification does not reference Rule 4 of the IT Rules, thus it is unclear if a similar notification has been issued under Rule 4.

While it is accurate that the order does not confer new powers, areas of concern that existed with India’s surveillance regime continue to remain including the question of whether 69(1) and 69B and associated Rules are constitutionally valid, the lack of transparency by the government and the prohibition of transparency by service providers, heavy handed penalties on service providers for non-compliance, and a lack of legal backing and oversight mechanisms for intelligence agencies. Some of these could be addressed if the draft Data Protection Bill 2018 is enacted and the Puttaswamy Judgement fully implemented.

Conclusion

The MHA’s order and the press release thereafter have served to publicise and provide needed clarity with respect to the powers vested in which intelligence agencies in India under section 69(1) of the IT Act. This was previously unclear and could have posed a challenge to ensuring oversight and accountability of actions taken by intelligence agencies issuing requests under section 69(1) .

The publishing of the list has subsequently served to raise questions and create a debate about key issues concerning privacy, surveillance and state overreach. On December 24, the order was challenged by advocate ML Sharma on the grounds of it being illegal, unconstitutional and contrary to public interest. Sharma in his contention also stated the need for the order to be tested on the basis of the right to privacy established by the Supreme Court in Puttaswamy which laid out the test of necessity, legality, and proportionality. According to this test, any law that encroaches upon the privacy of the individual will have to be justified in the context of the right to life under Article 21.

But there are also other questions that exist. India has multiple laws enabling its surveillance regime and though this notification clarifies which intelligence agencies can intercept under the IT Act, it is still seemingly unclear which intelligence agencies can monitor and collect traffic data under the 69B Rules. It is also unclear what this order means for past interceptions that have taken place by agencies on this list or agencies outside of this list under section 69(1) and associated Rules of the IT Act. Will these past interceptions possess the same evidentiary value as interceptions made by the authorised agencies in the order?

Economics of Cybersecurity: Literature Review Compendium

Authored by Natallia Khaniejo and edited by Amber Sinha

Politics and economics are increasingly being amalgamated with Cybernetic frameworks and consequently Critical infrastructure has become intrinsically dependent on Information and Communication Technology (ICTs). The rapid evolution of technological platforms has been accompanied by a concomitant rise in the vulnerabilities that accompany them. Recurrent issues include concerns like network externalities, misaligned incentives and information asymmetries. Malignant actors use these vulnerabilities to breach secure systems, access and sell data, and essentially destabilize cyber and network infrastructures. Additionally, given the relative nascence of the realm, establishing regulatory policies without limiting innovation in the space becomes an additional challenge as well. The lack of uniform understanding regarding the definition and scope of what can be defined as Cybersecurity also serves as a barrier preventing the implementation of clear guidelines. Furthermore, the contrast between what is convenient and what is ‘sanitary’ in terms of best practices for cyber infrastructures is also a constant tussle with recommendations often being neglected in favor of efficiency. In order to demystify the security space itself and ascertain methods of effective policy implementation, it is essential to take stock of current initiatives being proposed for the development and implementation of cybersecurity best practices, and examine their adequacy in a rapidly evolving technological environment. This literature review attempts to document the various approaches that are being adopted by different stakeholders towards incentivizing cybersecurity and the economic challenges of implementing the same.

Click on the below links to read the entire story:

Registering for Aadhaar in 2019

The article was published in Business Standard on January 2, 2019.

Last November, a global committee of lawmakers from nine countries the UK, Canada, Ireland, Brazil, Argentina, Singapore, Belgium, France and Latvia summoned Mark Zuckerberg to what they called an “international grand committee” in London. Mr. Zuckerberg was too spooked to show up, but Ashkan Soltani, former CTO of the FTC was among those who testified against Facebook. He said “in the US, a lot of the reticence to pass strong policy has been about killing the golden goose” referring to the innovative technology sector. Mr. Soltani went on to argue that “smart legislation will incentivise innovation”. This could be done either intentionally or unintentionally by governments. For example, a poorly thought through blocking of pornography can result in innovative censorship circumvention technologies. On other occasions, this can happen intentionally. I hope to use my inaugural column in these pages to provide an Indian example of such intentional regulatory innovation.

Eight years ago, almost to this date, my colleague Elonnai Hickok wrote an open letter to the Parliamentary Finance Committee on what was then called the UID or Unique Identity. She compared Aadhaar to the digital identity project started by the National Democratic Alliance (NDA) government in 2001. Like the Vajpayee administration which was working in response to the Kargil War, she advocated a decentralised authentication architecture using smart cards based on public key cryptography. Last year, even before the five-judge constitutional bench struck down Section 57 of the Aadhaar Act, the UIDAI preemptively responded to this regulatory development by launching offline Aadhaar cards. This was to be expected especially since from the A.P. Shah Committee report, the Puttaswamy Judgment, the B.N. Srikrishna Committee consultation paper, report and bill, the principle of “privacy by design” was emerging as a key Indian regulatory principle in the domain of data protection.

The introduction of the offline Aadhaar mechanism eliminates the need for biometrics during authentication. I have previously provided 11 reasons why biometrics is inappropriate technology for e-governance applications by democratic governments, and this comes as a massive relief for both human rights activists and security researchers. Second, it decentralises authentication, meaning that there is a no longer a central database that holds a 360-degree view of all incidents of identification and authentication. Third, it dramatically reduces the attack surface for Aadhaar numbers, since only the last four digits remain unmasked on the card. Each data controller using Aadhaar will have to generate his/her own series of unique identifiers to distinguish between residents. If those databases leak or get breached, it won’t tarnish the credibility of Aadhaar or the UIDAI to the same degree. Fourth, it increases the probability of attribution in case a data breach were to occur; if the breached or leaked data contains identifiers issued by a particular data controller, it would become easier to hold them accountable and liable for the associated harms. Fifth, unlike the previous iteration of the Aadhaar “card”, on which the QR code was easy to forge and alter, this mechanism provides for integrity and tamper detection because the demographic information contained within the QR code is digitally signed by the UIDAI. Finally, it retains the earlier benefit of being very cheap to issue, unlike smart cards.

Thanks to the UIDAI, the private sector is also being forced to implement privacy by design. Previously, since everyone was responsible for protecting Aadhaar numbers, nobody was. Data controllers would gladly share the Aadhaar number with their contractors, that is, data processors, since nobody could be held responsible. Now, since their own unique identifiers could be used to trace liability back to them, data controllers will start using tokenisation when they outsource any work that involves processing of the collected data. Skin in the game immediately breeds more responsible behaviour in the ecosystem.

The fintech sector has been rightfully complaining about regulatory and technological uncertainty from last year’s developments. This should be addressed by developing open standards and free software to allow for rapid yet secure implementation of these changes. The QR code standard itself should be an open standard developed by the UIDAI using some of the best practices common to international standard setting organisations like the World Wide Web Consortium, Internet Engineers Task Force and the Institute of Electrical and Electronics Engineers. While the UIDAI might still choose to take the final decision when it comes to various technological choices, it should allow stakeholders to make contributions through comments, mailing lists, wikis and face-to-face meetings. Once a standard has been approved, a reference implementation must be developed by the UIDAI under liberal licences, like the BSD licence that allows for both free software and proprietary software derivative works. For example, a software that can read the QR code as well as send and receive the OTP to authenticate the resident. This would ensure that smaller fintech companies with limited resources can develop secure systems.

Since Justice Dhananjaya Y. Chandrachud’s excellent dissent had no other takers on the bench, holdouts like me must finally register for an Aadhaar number since we cannot delay filing taxes any further. While I would still have preferred a physical digital artefact like a smart card (built on an open standard), I must say it is a lot less scary registering for Aadhaar in 2019 than it was in 2010, given how the authentication modalities have since evolved.

Response to TRAI Consultation Paper on Regulatory Framework for Over-The-Top (OTT) Communication Services

Click here to view the submission (PDF).

This submission presents a response by Gurshabad Grover, Nikhil Srinath and Aayush Rathi (with inputs from Anubha Sinha and Sai Shakti) to the Telecom Regulatory Authority of India’s “Consultation Paper on Regulatory Framework for Over-The-Top (OTT) Communication Services (hereinafter “TRAI Consultation Paper”) released on November 12, 2018 for comments. CIS appreciates the continual efforts of Telecom Regulatory Authority of India (TRAI) to have consultations on the regulatory framework that should be applicable to OTT services and Telecom Service Providers (TSPs). CIS is grateful for the opportunity to put forth its views and comments.

Addendum: Please note that this document differs in certain sections from the submission emailed to TRAI: this document was updated on January 9, 2019 with design and editorial changes to enhance readability. The responses to Q5 and Q9 have been updated. This updated document was also sent to TRAI.

How to make EVMs hack-proof, and elections more trustworthy

The article was published in Times of India on December 9, 2018.

Electronic voting machines (EVM) have been in use for general elections in India since 1999 having been first introduced in 1982 for a by-election in Kerala. The EVMs we use are indigenous, having been designed jointly by two public-sector organisations: the Electronics Corporation of India Ltd. and Bharat Electronics Ltd. In 1999, the Karnataka High Court upheld their use, as did the Madras High Court in 2001.

Since then a number of other challenges have been levelled at EVMs, but the only one that was successful was the petition filed by Subramanian Swamy before the Supreme Court in 2013. But before we get to Swamy's case and its importance, we should understand what EVMs are and how they are used.

The EVM used in India are standardised and extremely simple machines. From a security standpoint this makes them far better than the myriad different, and some notoriously insecure machines used in elections in the USA. Are they 'hack-proof' and 'infallible' as has been claimed by the ECI? Not at all.

Similarly simple voting machines in the Netherlands and Germany were found to have vulnerabilities, leading both those countries to go back to paper ballots.

Because the ECI doesn't provide security researchers free and unfettered access to the EVMs, there had been no independent scrutiny until 2010. That year, an anonymous source provided a Hyderabad-based technologist an original EVM. That technologist, Hari Prasad, and his team worked with some of the world's foremost voting security experts from the Netherlands and the US, and demonstrated several actual live hacks of the EVM itself and several theoretical hacks of the election process, and recommended going back to paper ballots. Further, EVMs have often malfunctioned, as news reports tell us. Instead of working on fixing these flaws, the ECI arrested Prasad (for being in possession of a stolen EVM) and denied Princeton Prof Alex Halderman entry into India when he flew to Delhi to publicly discuss their research. Even in 2017, when the ECI challenged political parties to âhackâ EVMs, it did not provide unfettered access to the machines.

While paper ballots may work well in countries like Germany, they hadn't in India, where in some parts ballot-stuffing and booth-capturing were rampant. The solution as recognised by international experts, and as the ECI eventually realised, was to have the best of both worlds and to add a printer to the EVMs.

These would print out a small slip of paper containing the serial number and name of the candidate, and the symbol of the political party, so that the sighted voter could verify that her vote has been cast correctly. This paper would then be deposited in a sealed box, which would provide a paper trail that could be used to audit the correctness of the EVM. They called this VVPAT: voter-verifiable paper audit trail. Swamy, in his PIL, asked for VVPAT to be introduced. The Supreme Court noted that the ECI had already done trials with VVPAT, and made them mandatory.

However, VVPATs are of no use unless they are actually counted to ensure that the EVM tally and the paper tally do match. The most advanced and efficient way of doing this has been proposed by Lindeman & Stark, through a methodology called (RLAs), in which you keep auditing until either you've done a full hand count or you have strong evidence that continuing is pointless. The ECI could request the Indian Statistical Institute for its recommendations in implementing RLAs. Also, it must be remembered, current VVPAT technology are inaccessible for persons with visual impairments.

While in some cases, the ECI has conducted audits of the printed paper slips, in 2017 it officially noted that only the High Court can order an audit and that the ECI doesn't have the power to do so under election law. Rule 93 of the Conduct of Election Rules needs to be amended to make audits mandatory.

The ECI should also create separate security procedures for handling of VVPATs and EVMs, since there are now reports of EVMs being replaced 'after' voting has ended. Having separate handling of EVMs and VVPATs would ensure that two different safe-houses would need to be broken into to change the results of the vote. Implementing these two changes, changing election law to make risk-limiting audits mandatory, and improving physical security practices would make Indian elections much more trustworthy than they are now, while far more needs to be done to make them inclusive and accessible to all.

The DNA Bill has a sequence of problems that need to be resolved

The opinion piece was published by Newslaundry on January 14, 2019.

On January 9, Science and Technology Minister Harsh Vardhan introduced the DNA Technology (Use and Application) Regulation Bill, 2018, amidst opposition and questions about the Bill’s potential threat to privacy and the lack of security measures. The Bill aims to provide for the regulation of the use and application of DNA technology for certain criminal and civil purposes, such as identifying offenders, suspects, victims, undertrials, missing persons and unknown deceased persons. The Schedule of the Bill also lists civil matters where DNA profiling can be used. These include parental disputes, issues relating to immigration and emigration, and establishment of individual identity. The Bill does not cover the commercial or private use of DNA samples, such as private companies providing DNA testing services for conducting genetic tests or for verifying paternity.

The Bill has seen several iterations and revisions from when it was first introduced in 2007. However, after repeated expert consultations, the Bill even at its current stage is far from a comprehensive legislation. Experts have articulated concerns that the version of the Bill that was presented post the Puttaswamy judgement still fails to make provisions that fully uphold the privacy and dignity of the individual. The hurry to pass the Bill by pushing for it by extending the winter session and before the Personal Data Protection Bill is brought before Parliament is also worrying. The Bill was passed in the Lok Sabha with only one amendment: which changed the year of the Bill from 2018 to 2019.

Need for a better-drafted legislation

Although the Schedule of the Bill includes certain civil matters under its purview, some important provisions are silent on the procedure that is to be followed for these civil matters. For example, the Bill necessitates the consent of the individual for DNA profiling in criminal investigation and for identifying missing persons. However, the Bill is silent on the requirement for consent in all civil matters that have been brought under the scope of the Bill.

The omission of civil matters in the provisions of the Bill that are crucial for privacy is just one of the ways the Bill fails to ensure privacy safeguards. The civil matters listed in the Bill are highly sensitive (such as paternity/maternity, use of assisted reproductive technology, organ transplants, etc.) and can have a far-reaching impact on a number of sections of society. For example, the civil matters listed in the Bill affect women not just in the case of paternity disputes but in a number of matters concerning women including the Domestic Violence Act and the Prenatal Diagnostic Techniques Act. Other matters such as pedigree, immigration and emigration can disproportionately impact vulnerable groups and communities, raising raises concerns of discrimination and abuse.

Privacy and security concerns

Although the Bill makes provisions for written consent for the collection of bodily substances and intimate bodily substances, the Bill allows non-consensual collection for offences punishable by death or imprisonment for a term exceeding seven years. Another issue with respect to collection with consent is the absence of safeguards to ensure that consent is given freely, especially when under police custody. This issue was also highlighted by MP NK Premachandran when he emphasised that the Bill be sent to a Parliamentary Standing Committee.

Apart from the collection, the Bill fails to ensure the privacy and security of the samples. One such example of this failure is Section 35(b), which allows access to the information contained in the DNA Data Banks for the purpose of training. The use of these highly sensitive data—that carry the risk of contamination—for training poses risks to the privacy of the people who have deposited their DNA both with and without consent.

An earlier version of the Bill included a provision for the creation of a population statistics databank. Though this has been removed now, there is no guarantee that this provision will not make its way through regulation. This is a cause for concern as the Bill also covers certain civil cases including those relating to immigration and emigration.

Conclusion

In July 2018, the Justice Sri Krishna Committee released the draft Personal Data Protection Bill. The Bill was open for public consultation and is now likely to be introduced in Parliament in June. The PDP Bill, while defining “sensitive personal data”, provides an exhaustive list of data that can be considered sensitive, including biometric data, genetic data and health data. Under the Bill, sensitive personal data has heightened parameters for collection and processing, including clear, informed, and specific consent. Ideally, the DNA Bill should be passed after ensuring that it is in line with the PDP Bill.

The DNA Bill, once it becomes a law, will allow for law enforcement authorities to collect sensitive DNA data and database the same for forensic purposes without a number of key safeguards in place with respect to security and the rights of individuals. In 2016 alone, 29,75,711 crimes under various provisions the Indian Penal Code were reported. One can only guess the sheer number of DNA profiles and related information that will be collected from both criminal and specified civil cases. The Bill needs to be revised to reduce all ambiguity with respect to the civil cases, and also to ensure that it is in line with the data protection regime in India. A comprehensive privacy legislation should be enacted prior to the passing of this Bill.

There are still studies and cases that show that DNA testing can be fallible. The Indian government needs to ensure that there is proper sensitisation and training on the collection, storage and use of DNA profiles as well as the recognition and awareness of the fact that the DNA tests are not infallible amongst key stakeholders, including law enforcement and the judiciary.

India should reconsider its proposed regulation of online content

The article was published in the Hindustan Times on January 24, 2019. The author would like to thank Akriti Bopanna and Aayush Rathi for their feedback.

Flowing from the Information Technology (IT) Act, India’s current intermediary liability regime roughly adheres to the “safe harbour” principle, i.e. intermediaries (online platforms and service providers) are not liable for the content they host or transmit if they act as mere conduits in the network, don’t abet illegal activity, and comply with requests from authorised government bodies and the judiciary. This paradigm allows intermediaries that primarily transmit user-generated content to provide their services without constant paranoia, and can be partly credited for the proliferation of online content. The law and IT minister shared the intent to change the rules this July when discussing concerns of online platforms being used “to spread incorrect facts projected as news and designed to instigate people to commit crime”.

On December 24, the government published and invited comments to the draft intermediary liability rules. The draft rules significantly expand “due diligence” intermediaries must observe to qualify as safe harbours: they mandate enabling “tracing” of the originator of information, taking down content in response to government and court orders within 24 hours, and responding to information requests and assisting investigations within 72 hours. Most problematically, the draft rules go much further than the stated intentions: draft Rule 3(9) mandates intermediaries to deploy automated tools for “proactively identifying and removing [...] unlawful information or content”.

The first glaring problem is that “unlawful information or content” is not defined. A conservative reading of the draft rules will presume that the phrase means restrictions on free speech permissible under Article 19(2) of the Constitution, including that relate to national integrity, “defamation” and “incitement to an offence”.

Ambiguity aside, is mandating intermediaries to monitor for “unlawful content” a valid requirement under “due diligence”? To qualify as a safe harbour, if an intermediary must monitor for all unlawful content, then is it substantively different from an intermediary that has active control over its content and not a safe harbour? Clearly, the requirement of monitoring for all “unlawful content” is so onerous that it is contrary to the philosophy of safe harbours envisioned by the law.

By mandating automated detection and removal of unlawful content, the proposed rules shift the burden of appraising legality of content from the state to private entities. The rule may run afoul of the Supreme Court’s reasoning in Shreya Singhal v Union of India wherein it read down a similar provision because, among other reasons, it required an intermediary to “apply [...] its own mind to whether information should or should not be blocked”. “Actual knowledge” of illegal content, since then, has held to accrue to the intermediary only when it receives a court or government order.

Given the inconsistencies with legal precedence, the rules may not stand judicial scrutiny if notified in their current form.

The lack of technical considerations in the proposal is also apparent since implementing the proposal is infeasible for certain intermediaries. End-to-end encrypted messaging services cannot “identify” unlawful content since they cannot decrypt it. Internet service providers also qualify as safe harbours: how will they identify unlawful content when it passes encrypted through their network? Presumably, the government’s intention is not to disallow end-to-end encryption so that intermediaries can monitor content.

Intermediaries that can implement the rules, like social media platforms, will leave the task to algorithms that perform even specific tasks poorly. Just recently, Tumblr flagged its own examples of permitted nudity as pornography, and Youtube slapped a video of randomly-generated white noise with five copyright-infringement notices. Identifying more contextual expression, such as defamation or incitement to offences, is a much more complex problem. In the lack of accurate judgement, platforms will be happy to avoid liability by taking content down without verifying whether it violated law. Rule 3(9) also makes no distinction between large and small intermediaries, and has no requirement for an appeal system available to users whose content is taken down. Thus, the proposed rules set up an incentive structure entirely deleterious to the exercise of the right to freedom of expression. Given the wide amplitude and ambiguity of India’s restrictions on free speech, online platforms will end up removing swathes of content to avoid liability if the draft rules are notified.

The use of draconian laws to quell dissent plays a recurring role in the history of the Indian state. The draft rules follow India’s proclivity to join the ignominious company of authoritarian nations when it comes to disrespecting protections for freedom of expression. To add insult to injury, the draft rules are abstruse, ignore legal precedence, and betray a poor technological understanding. The government should reconsider the proposed regulation and the stance which inspired it, both of which are unsuited for a democratic republic.

Response to GCSC on Request for Consultation: Norm Package Singapore

The Global Commission on the Stability of Cyberspace, a multi-stakeholder initiative comprised of eminent individuals across the globe that seeks to promote awareness and understanding among the various cyberspace communities working on issues related to international cyber security. CIS is honoured to have contributed research to this initiative previously and commends the GCSC for the work done so far.

The GCSC announced the release of its new Norm Package on Thursday November 8, 2018 that featured six norms that sought to promote the stability of cyberspace.This was done with the hope that they may be adopted by public and private actors in a bid to improve the international security architecture of cyberspace

The norms introduced by the GCSC focus on the following areas:

- Norm to Avoid Tampering

- Norm Against Commandeering of ICT Devices into Botnets

- Norm for States to Create a Vulnerability Equities Process

- Norm to Reduce and Mitigate Significant Vulnerabilities

- Norm on Basic Cyber Hygiene as Foundational Defense

- Norm Against Offensive Cyber Operations by Non-State Actors

The GCSC opened a public comment procedure to solicit comments and obtain additional feedback. CIS responded to the public call-offering comments on all six norms and proposing two further norms. We sincerely hope that the Commission may find the feedback useful in their upcoming deliberations.

A Gendered Future of Work

Abstract

Studies around the future of work have predicted technological disruption across industries, leading to a shift in the nature and organisation of work, as well as the substitution of certain kinds of jobs and growth of others. This paper seeks to contextualise this disruption for women workers in India. The paper argues that two aspects of the structuring of the labour market will be pertinent in shaping the future of work: the gendered nature of skilling and skill classification, and occupational segregation along the lines of gender and caste. We will take the case study of the electronics manufacturing sector to flesh out these arguments further. Finally, we bring in a discussion on the platform economy, a key area of discussion under the future of work. We characterise it as both generating employment opportunities, particularly for women, due to the flexible nature of work, and retrenching traditional inequalities built into non-standard employment.

Introduction

The question on the future of work across the global North - and parts of the global South - has recently been raised with regards to technological disruption, as a result of digitisation, and more recently, automation (Leurent et al., 2018). While the former has been successively replacing routine cognitive tasks, the latter, defined as the deployment of cyber-physical systems, will enable the replacement of manual tasks previously being performed using human labour (Leurent et al., 2018). In combination, these are expected to have a twofold effect on: the “structure of employment”, which includes occupational roles and nature of tasks, and “forms of work”, including interpersonal relationships and organization of work (Piasna and Drahokoupil, 2017). Building from historical evidence, the diffusion of digitising or automative technologies can be anticipated to take place differently across economic contexts, with different factors causing varied kinds of technological upgradation across the global North and South. Moreover, occupational analysis projects occupations in the latter to be at a significantly higher risk of being disrupted than the former (WTO, 2017).

However, these concerns are somewhat offset by the barriers to technological adoption that exist in lower income countries such as lower wages, and a relatively higher share of non-routine manual jobs (WTO, 2017). 1 With the global North typically being early and quicker adopters of automation technologies, the differential technology levels in countries have been in fact been utilised to understand global inequality (Foster and Rosenzweig, 2010). Consequently, the labour-cost advantage that economies in the global South enjoy may be eroded, leading to what may be understood as re-shoring/back shoring - a reversal of offshoring (ILO, 2017). This may especially be the case in sectors where there has been a failure to capitalise on the labour-cost advantage by evolving supplier networks to complement assembly activities (such as in manufacturing) (Milington, 2017), or production of high-value services (such as in the services sector).

Extensive work over the past three decades has been conducted on the effects of liberalisation and globalisation on employment for women in the global South. This has explored conditional empowerment and exploitation as women are increasingly employed in factories and offices, with different ways of reproducing and challenging patriarchal relations. However, the effects of reshoring and technological disruption have yet to be explored to any degree of granularity for this population, which arguably will be one of the first to face its effects. This can be seen as a consequence of industries that rely on low cost labour being impacted first by re-shoring, such as textile and apparel and electronics manufacturing (Kucera and Tejani, 2014).

Download the full paper here.

CIS Submission to UN High Level Panel on Digital Cooperation

The high-level panel on

Digital Cooperation was convened by the UN Secretary-General to advance

proposals to strengthen cooperation in the digital space among

Governments, the private sector, civil society, international

organizations, academia, the technical community and other relevant

stakeholders. The Panel issued a call for input that called for

responses to various questions. CIS responded to the call for inputs.

The response can be accessed here.

Response to the Draft of The Information Technology [Intermediary Guidelines (Amendment) Rules] 2018

This document presents the Centre for Internet & Society (CIS) response to the Ministry of Electronics and Information Technology’s invitation to comment and suggest changes to the draft of The Information Technology [Intermediary Guidelines (Amendment) Rules] 2018 (hereinafter referred to as the “draft rules”) published on December 24, 2018. CIS is grateful for the opportunity to put forth its views and comments. This response was sent on the January 31, 2019.

In this response, we aim to examine whether the draft rules meet tests of constitutionality and whether they are consistent with the parent Act. We also examine potential harms that may arise from the Rules as they are currently framed and make recommendations to the draft rules that we hope will help the Government meet its objectives while remaining situated within the constitutional ambit.

The response can be accessed here.

The Future of Work in the Automotive Sector in India

Introduction

The adoption of information and communication based technology (ICTs) for industrial use is not a new phenomenon. However, the advent of Industry 4.0 hasbeen described as a paradigm shift in production, involving widespread automation and irreversible shifts in the structure of jobs. Industry 4.0 is widely understood as the technical integration of cyber-physical systems into production and logistics, and the use of Internet of Things (IoTs) in processes and systems. This may pose major challenges for industries, workers, and policymakers as they grapple with shifts in the structure of employment and content of jobs, bring about significant changes in business models, downstream services and the organisation of work.

The adoption of information and communication based technology (ICTs) for industrial use is not a new phenomenon. However, the advent of Industry 4.0 hasbeen described as a paradigm shift in production, involving widespread automation and irreversible shifts in the structure of jobs. Industry 4.0 is widelyunderstood as the technical integration of cyber-physical systems into production and logistics, and the use of Internet of Things (IoTs) in processes and systems.This may pose major challenges for industries, workers, and policymakers as they grapple with shifts in the structure of employment and content of jobs, bringabout significant changes in business models, downstream services and the organisation of work.

Industry 4.0 is characterised by four elements. First, the use of intelligent machines could have significant impact on production through the introduction of automated processes in ‘smart factories.’ Second, real-time production would begin optimising utilisation capacity, with shorter lead times and avoidance of standstills. Third, the self-organisation of machines can lead to decentralisation of production. Finally, Industry 4.0 is commonly characterised by the individualisation of production, responding to customer requests. The advancement of digital technology and consequent increase in automation has raised concerns about unemployment and changes in the structure of work. Globally, automation in manufacturing and services has been posited as replacing jobs with routine task content, while generating jobs with non-routine cognitive and manual tasks.

Some scholars have argued that unemployment will increase globally as technology eliminates tens of million of jobs in the manufacturing sector. It could then result in the lowering of wages and employment opportunities for low skilled workers, and increased investment in capital-intensive technologies for employer.

However, this theory of technologically driven job loss and increasing inequality has been contested on numerous occasions, with the assertion that technology will be an enabler, will change task content rather than displace workers, and will also create new jobs . It has further been argued that other factors such as increasing globalisation, weakening trade unions and platforms for collective bargaining, and disaggregation of the supply chain through outsourcing has led to declined wages, income inequality, inadequate health and safety conditions, and displacement of workers.

In India, there is little evidence of unemployment caused by adoption of technology due to Industry 4.0, but there is a strong consensus that technology affects labour by changing the job mix and skill demand. It should be noted that technological adoption under Industry 4.0 in advanced industrial economies has been driven by cost-benefit analysis due to accessible technology, and a highly skilled labour force. However, these key factors are serious impediments in the Indian context, which brings the large scale adoption of cyber-physical systems into question.

The diffusion of low cost manual labour across a large majority of roles in manufacturing raises concerns about the cost-benefit analysis of investing capital inexpensive automative technology, while also accounting for the resultant displacement of labour. Further, the skill gap across the labour force implies that the adoption of cyber-physical systems would require significant up-skilling or re-skilling to meet the potential shortage in highly skilled professionals.

This is an in-depth case study on the future of work in the automotive sector in India. We chose to focus on the future of work in the automotive sector in India for two reasons: first, the Indian automotive sector is one of largest contributors to the GDP at 7.2 percent, and second, it is one of the largest employment generators among non-agricultural industries. The first section details the structure of the automotive industry in India, including the range of stakeholders, and the national policy framework, through an analysis of academic literature, government reports, and legal documents.

The second section explores different aspects of the future of work in the automotive sector, through a combination of in-depth semi-structured interviews and enterprise-based surveys in the North Indian belt of Gurgaon-Manesar-Dharuhera-Bawal. Challenges posed by shifts in the industrial relations framework, with increasing casualization and emergence of a typical forms of work, will also be explored, with specific reference to crises in collective bargaining and social security. We will then move onto looking at the state of female participation in the workforce in the automotive industry. The report concludes with policy recommendations addressing some of the challenges outlined above.

Read the full report here.

CIS Comment on ICANN's Draft FY20 Operating Plan and Budget

The following are our comments on relevant aspects from the different documents:

There are several significant undertakings which have not found adequate support in this budget, chief among them being the implementation of the ICANN Workstream 2 recommendations on Accountability. The budget accounts for any expenses that arise from WS2 as emanating from its contingency fund which is a mere 4%. Totalling more than 100 recommendations across 8 sub groups, execution of these would require significant expenditure. Ideally, this should have been budgeted for in the FY20 budget considering the final report was submitted in June, 2018 and conversations about its implementation have been carried out ever since. It is wondered if this is because the second Workstream does not have the effectuation of its recommendations in its mandate and hence it is easier for ICANN to be slow on it.[1] As a member of the community deeply interested in integrating human rights better in ICANN’s various processes, it is concerning to note the glacial pace of the approval of the aforementioned recommendations especially coupled with the lack of funds allocated to it. Further, there is 1 one person assigned to work on the WS2 implementation work which seems insufficient for the magnitude of work involved.[2]

A topical issue with ICANN currently is its tussle with the implementation of the General Data Protection Regulation (GDPR) and despite the prominence and extent of the legal burden involved, resources to complying with it have not been allocated. Again, it is within the umbrella of the contingency budget.

The Cross Community Working Group on New gTLD Auction Proceeds is also, presently, developing recommendations on how to distribute the proceeds. It is unclear where these will be funded from since their work is funded by the core ICANN budget yet it is assumed that the recommendations will be funded by the auction proceeds. Almost 7 years after the new gTLD round was open, it is alarming that ICANN has not formulated a plan for the proceeds and are still debating the merits of the entity which would resolve this question, as recently as the last ICANN meeting in October, 2018.

Another important policy development process being undertaken right now is the Working Group who is reviewing the current new gTLD policies to improve the process by proposing changes or new policies. There are no resources in the FY20 budget to implement the changes that will arise from this but only those to support the Working Group activities.

Lastly, the budgets lack information on how much each individual RIR contributes.

Staff costs

ICANN’s internal costs on their personnel have been rising for years and slated to account for more than half their annual budget with an estimated 56% or $76.3 million in the next financial year. The community has been consistent in calling upon them to revise their staff costs with many questioning if the growth in staff is justified.[3] There was criticism from all quarters such as the GNSO Council who stated that it is “not convinced that the proposed budget funds the policy work it needs to do over the coming year”.[4] The excessive use of professional service consultants has come under fire too.

As pointed out in a mailing list, in comments on the FY19 budget, every single constituency and stakeholder group remarked that personnel costs presented too high a burden on the budget. One of the suggestions presented by the NCSG was to relocate positions from from the LA headquarters to less expensive countries such as those in Asia. This can be seen from the high increase this budget of $200,000 in operational costs though no clear breakdown of that entails was given.

The view seems to be that ICANN repeatedly chooses to retain higher salaries while reducing funding for the community. This is even more of an issue since there employment remuneration scheme is opaque. In a DIDP I filed enquiring about the average salary across designations, gender, regions and the frequency of bonuses, the response was either to refer to their earlier documents which do not have concrete information or that the relevant documents were not in their possession.[5]

ICANN Fellowship

The budget of the fellowship has been reduced which is an important initiative to involve individuals in ICANN who cannot afford the cost of flying to the global ICANN meetings. The focus should be not only be on arriving at a suitable figure for the funding but also to ensure that people who either actively contribute or are likely to are supported as opposed to individuals who are already known in this circle.

Again, our attempts at understanding the Fellowship selection were met with resistance from ICANN. In a DIDP filed regarding it with questions such as if anyone had received it more than the maximum limit of thrice and details on the selection criteria, no clarity was provided.[6]

Lobbying and Sponsorship

At ICANN 63 in Barcelona, I enquired about ICANN’s sponsorship strategies and how the decision making is done with respect to which all events in each region to sponsor and for a comprehensive list of all sponsorship ICANN undertakes and receives. I was told such a document would be published soon but in the 4 months since then, none can be found. It is difficult to comment on the budget for such a team where there is not much information on the work it specifically carries out and the impact of such sponsoring activities. When questioned to someone on their team, I was told that it depends on the needs of each region and events that are significant in such regions. However without public accountability and transparency about these, sponsorship can be seen as a vague heading which could be better spent on community initiatives.

Talking of Transparency, it has also been pointed out that the Information Transparency Initiative has 3 million dollars set aside for its activities in this budget. It sounds positive yet with no deliverables to show in the past 2 years, it is difficult to ascertain the value of the investment in this initiative.

Lobbying activities do not find any mention in the budget and neither do the nature of sponsorship from other entities in terms of whether it is travel and accommodation of personnel or any other kind of institutional sponsorship.

[1] https://cis-india.org/internet-governance/blog/icann-work-stream-2-recommendations-on-accountability

[2] https://www.icann.org/en/system/files/files/proposed-opplan-fy20-17dec18-en.pdf

[3] http://domainincite.com/22680-community-calls-on-icann-to-cut-staff-spending

[4] Ibid

[5]https://cis-india.org/internet-governance/blog/didp-request-30-enquiry-about-the-employee-pay-structure-at-icann

[6] https://cis-india.org/internet-governance/blog/didp-31-on-icanns-fellowship-program

Intermediary liability law needs updating

The article was published in Business Standard on February 9, 2019.

Intermediaries get immunity from liability emerging from user-generated and third-party content because they have no “actual knowledge” until it is brought to their notice using “take down” requests or orders.

Since some of the harm caused is immediate, irreparable and irreversible, it is the preferred alternative to approaching courts for each case. When intermediary liability regimes were first enacted, most intermediaries were acting as common carriers — ie they did not curate the experience of users in a substantial fashion. While some intermediaries like Wikipedia continue this common carrier tradition, others driven by advertising revenue no longer treat all parties and all pieces of content neutrally. Facebook, Google and Twitter do everything they can to raise advertising revenues. They make you depressed. And if they like you, they get you to go out and vote. There is an urgent need to update intermediary liability law.

In response to being summoned by multiple governments, Facebook has announced the establishment of an independent oversight board. A global free speech court for the world’s biggest online country. The time has come for India to exert its foreign policy muscle. The amendments to our intermediary liability regime can have global repercussions, and shape the structure and functioning of this and other global courts.

While with one hand Facebook dealt the oversight board, with the other hand it took down APIs that would enable press and civil society to monitor political advertising in real time. How could they do that with no legal consequences? The answer is simple — those APIs were provided on a voluntary basis. There was no law requiring them to do so.

There are two approaches that could be followed. One, as scholar of regulatory theory Amba Kak puts it, is to “disincentivise the black box”. Most transparency reports produced by intermediaries today are on a voluntary basis; there is no requirement for this under law. Our new law could require a extensive transparency with appropriate privacy safeguards for the government, affected parties and the general public in terms of revenues, content production and consumption, policy development, contracts, service-level agreements, enforcement, adjudication and appeal. User empowerment measures in the user interface and algorithm explainability could be required. The key word in this approach is transparency.

The alternative is to incentivise the black box. Here faith is placed in technological solutions like artificial intelligence. To be fair, technological solutions may be desirable for battling child pornography, where pre-censorship (or deletion before content is published) is required. Fingerprinting technology is used to determine if the content exists in a global database maintained by organisations like the Internet Watch Foundation. A similar technology called Content ID is used pre-censor copyright infringement. Unfortunately, this is done by ignoring the flexibilities that exist in Indian copyright law to promote education, protect access knowledge by the disabled, etc. Even within such narrow application of technologies, there have been false positives. Recently, a video of a blogger testing his microphone was identified as a pre-existing copyrighted work.

The goal of a policy-maker working on this amendment should be to prevent repeats of the Shreya Singhal judgment where sections of the IT Act were read down or struck down. To avoid similar constitution challenges in the future, the rules should not specify any new categories of illegal content, because that would be outside the scope of the parent clause. The fifth ground in the list is sufficient — “violates any law for the time being in force”. Additional grounds, such as “harms minors in anyway”, is vague and cannot apply to all categories of intermediaries — for example, a dating site for sexual minorities. The rights of children need to be protected. But that is best done within the ongoing amendment to the POCSO Act.

As an engineer, I vote to eliminate redundancy. If there are specific offences that cannot fit in other parts of the law, those offences can be added as separate sections in the IT Act. For example, even though voyeurism is criminalised in the IT Act, the non-consensual distribution of intimate content could be criminalised, as it has been done in the Philippines.

Provisions that have to do with data retention and government access to that data for the purposes of national security, law enforcement and also anonymised datasets for the public interest should be in the upcoming Data Protection law. The rules for intermediary liability is not the correct place to deal with it, because data retention may also be required of those intermediaries that don’t handle any third-party information or user generated content. Finally, there have to be clear procedures in place for reinstatement of content that has been taken down.

Disclosure: The Centre for Internet and Society receives grants from Facebook, Google and Wikimedia Foundation

Data Infrastructures and Inequities: Why Does Reproductive Health Surveillance in India Need Our Urgent Attention?

The article was first published by EPW Engage, Vol. 54, Issue No. 6, on 9 February 2019.

Framing Reproductive Health as a Surveillance Question

The approach of the postcolonial Indian state to healthcare has been Malthusian, with the prioritisation of family planning and birth control (Hodges 2004). Supported by the notion of socio-economic development arising out of a “modernisation” paradigm, the target-based approach to achieving reduced fertility rates has shaped India’s reproductive and child health (RCH) programme (Simon-Kumar 2006).

This is also the context in which India’s abortion law, the Medical Termination of Pregnancy (MTP) Act, was framed in 1971, placing the decisional privacy of women seeking abortions in the hands of registered medical practitioners. The framing of the MTP act invisibilises females seeking abortions for non-medical reasons within the legal framework. The exclusionary provisions only exacerbated existing gaps in health provisioning, as access to safe and legal abortions had already been curtailed by severe geographic inequalities in funding, infrastructure, and human resources. The state has concomitantly been unable to meet contraceptive needs of married couples or reduce maternal and infant mortality rates in large parts of the country, mediating access along the lines of class, social status, education, and age (Sanneving et al 2013).

While the official narrative around the RCH programme transitioned to focus on universal access to healthcare in the 1990s, the target-based approach continues to shape the reality on the ground. The provision of reproductive healthcare has been deeply unequal and, in some cases, in hospitals. These targets have been known to be met through the practice of forced, and often unsafe, sterilisation, in conditions of absence of adequate provisions or trained professionals, pre-sterilisation counselling, or alternative forms of contraception (Sama and PLD 2018). Further, patients have regularly been provided cash incentives, foreclosing the notion of free consent, especially given that the target population of these camps has been women from marginalised economic classes in rural India.

Placing surveillance studies within a feminist praxis allows us to frame the reproductive health landscape as more than just an ill-conceived, benign monitoring structure. The critical lens becomes useful for highlighting that taken-for-granted structures of monitoring are wedded with power differentials: genetic screening in fertility clinics, identification documents such as birth certificates, and full-body screeners are just some of the manifestations of this (Adrejevic 2015). Emerging conversations around feminist surveillance studies highlight that these data systems are neither benign nor free of gendered implications (Andrejevic 2015). In continual remaking of the social, corporeal body as a data actor in society, such practices render some bodies normative and obfuscate others, based on categorisations put in place by the surveiller.

In fact, the history of surveillance can be traced back to the colonial state where it took the form of systematic sexual and gendered violence enacted upon indigenous populations in order to render them compliant (Rifkin 2011; Morgensen 2011). Surveillance, then, manifests as a “scientific” rationalisation of complex social hieroglyphs (such as reproductive health) into formats enabling administrative interventions by the modern state. Lyon (2001) has also emphasised how the body emerged as the site of surveillance in order for the disciplining of the “irrational, sensual body”—essential to the functioning of the modern nation-state—to effectively happen.

Questioning the Information and Communications Technology for Development (ICT4D) and Big Data for Development (BD4D) Rhetoric

Information and Communications Technology (ICT) and data-driven approaches to the development of a robust health information system, and by extension, welfare, have been offered as solutions to these inequities and exclusions in access to maternal and reproductive healthcare in the country.

The move towards data-driven development in the country commenced with the introduction of the Health Management Information System in Andhra Pradesh in 2008, and the Mother and Child Tracking System (MCTS) nationally in 2011. These are reproductive health information systems (HIS) that collect granular data about each pregnancy from the antenatal to the post-natal period, at the level of each sub-centre as well as primary and community health centre. The introduction of HIS comprised cross-sectoral digitisation measures that were a part of the larger national push towards e-governance; along with health, thirty other distinct areas of governance, from land records to banking to employment, were identified for this move towards the digitalised provisioning of services (MeitY 2015).

The HIS have been seen as playing a critical role in the ecosystem of health service provision globally. HIS-based interventions in reproductive health programming have been envisioned as a means of: (i) improving access to services in the context of a healthcare system ridden with inequalities; (ii) improving the quality of services provided, and (iii) producing better quality data to facilitate the objectives of India’s RCH programme, including family planning and population control. Accordingly, starting 2018, the MCTS is being replaced by the RCH portal in a phased manner. The RCH portal, in areas where the ANMOL (ANM Online) application has been introduced, captures data real-time through tablets provided to health workers (MoHFW 2015).

A proposal to mandatorily link the Aadhaar with data on pregnancies and abortions through the MCTS/RCH has been made by the union minister for Women and Child Development as a deterrent to gender-biased sex selection (Tembhekar 2016). The proposal stems from the prohibition of gender-biased sex selection provided under the Pre-Conception and Pre-Natal Diagnostics Techniques (PCPNDT) Act, 1994. The approach taken so far under the PCPNDT Act, 2014 has been to regulate the use of technologies involved in sex determination. However, the steady decline in the national sex ratio since the passage of the PCPNDT Act provides a clear indication that the regulation of such technology has been largely ineffective. A national policy linking Aadhaar with abortions would be aimed at discouraging gender-biased sex selection through state surveillance, in direct violation of a female’s right to decisional privacy with regards to their own body.

Linking Aadhaar would also be used as a mechanism to enable direct benefit transfer (DBT) to the beneficiaries of the national maternal benefits scheme. Linking reproductive health services to the Aadhaar ecosystem has been critiqued because it is exclusionary towards women with legitimate claims towards abortions and other reproductive services and benefits, and it heightens the risk of data breaches in a cultural fabric that already stigmatises abortions. The bodies on which this stigma is disproportionately placed, unmarried or disabled females, for instance, experience the harms of visibility through centralised surveillance mechanisms more acutely than others by being penalised for their deviance from cultural expectations. This is in accordance with the theory of "data extremes,” wherein marginalised communities are seen as living on the extremes of data capture, leading to a data regime that either refuses to recognise them as legitimate entities or subjects them to overpolicing in order to discipline deviance (Arora 2016). In both developed and developing contexts, the broader purpose of identity management has largely been to demarcate legitimate and illegitimate actors within a population, either within the framework of security or welfare.

Potential Harms of the Data Model of Reproductive Health Provisioning

Informational privacy and decisional privacy are critically shaped by data flows and security within the MCTS/RCH. No standards for data sharing and storage, or anonymisation and encryption of data have been implemented despite role-based authentication (NHSRC and Taurus Glocal 2011). The risks of this architectural design are further amplified in the context of the RCH/ANMOL where data is captured real-time. In the absence of adequate safeguards against data leaks, real-time data capture risks the publicising of reproductive health choices in an already stigmatised environment. This opens up avenues for further dilution of autonomy in making future reproductive health choices.

Several core principles of informational privacy, such as limitations regarding data collection and usage, or informed consent, also need to be reworked within this context.[1] For instance, the centrality of the requirement of “free, informed consent” by an individual would need to be replaced by other models, especially in the context of reproductive health of rape survivors who are vulnerable and therefore unable to exercise full agency. The ability to make a free and informed choice, already dismantled in the context of contemporary data regimes, gets further precluded in such contexts. The constraints on privacy in decisions regarding the body are then replicated in the domain of reproductive data collection.

What is uniform across these digitisation initiatives is their treatment of maternal and reproductive health as solely a medical event, framed as a data scarcity problem. In doing so, they tend to amplify the understanding of reproductive health through measurable indicators that ignore social determinants of health. For instance, several studies conducted in the rural Indian context have shown that the degree of women’s autonomy influences the degree of usage of pregnancy care, and that the uptake of pregnancy care was associated with village-level indicators such as economic development, provisioning of basic infrastructure and social cohesion. These contextual factors get overridden in pervasive surveillance systems that treat reproductive healthcare as comprising only of measurable indicators and behaviours, that are dependent on individual behaviour of practitioners and women themselves, rather than structural gaps within the system.

While traditionally associated with state governance, the contemporary surveillance regime is experienced as distinct from its earlier forms due to its reliance on a nexus between surveillance by the state and private institutions and actors, with both legal frameworks and material apparatuses for data collection and sharing (Shepherd 2017). As with historical forms of surveillance, the harms of contemporary data regimes accrue disproportionately among already marginalised and dissenting communities and individuals. Data-driven surveillance has been critiqued for its excesses in multiple contexts globally, including in the domains of predictive policing, health management, and targeted advertising (Mason 2015). In the attempts to achieve these objectives, surveillance systems have been criticised for their reliance on replicating past patterns, reifying proximity to a hetero-patriarchal norm (Haggerty and Ericson 2000). Under data-driven surveillance systems, this proximity informs the preexisting boxes of identity for which algorithmic representations of the individual are formed. The boxes are defined contingent on the distinct objectives of the particular surveillance project, collating disparate pieces of data flows and resulting in the recasting of the singular offline self into various 'data doubles' (Haggerty and Ericson 2000). Refractive, rather than reflective, the data doubles have implications for the physical, embodied life of individual with an increasing number of service provisioning relying on the data doubles (Lyon 2001). Consider, for instance, apps on menstruation, fertility, and health, and wearables such as fitness trackers and pacers, that support corporate agendas around what a woman’s healthy body should look, be or behave like (Lupton 2014). Once viewed through the lens of power relations, the fetishised, apolitical notion of the data “revolution” gives way to what we may better understand as “dataveillance.”

Towards a Networked State and a Neo-liberal Citizen