Blog

Privacy Round Table, Delhi

Invite-Delhi.pdf

—

PDF document,

1157 kB (1185675 bytes)

Invite-Delhi.pdf

—

PDF document,

1157 kB (1185675 bytes)

International Principles on the Application of Human Rights to Communications Surveillance

necessaryandproportionateFINAL.pdf

—

PDF document,

191 kB (196308 bytes)

necessaryandproportionateFINAL.pdf

—

PDF document,

191 kB (196308 bytes)

More than a Hundred Global Groups Make a Principled Stand against Surveillance

Nothing could demonstrate the urgency of this situation more than the recent revelations confirming the mass surveillance of innocent individuals around the world.

To move toward that goal, today we’re pleased to announce the formal launch of the International Principles on the Application of Human Rights to Communications Surveillance. The principles articulate what international human rights law – which binds every country across the globe – require of governments in the digital age. They speak to a growing global consensus that modern surveillance has gone too far and needs to be restrained. They also give benchmarks that people around the world can use to evaluate and push for changes in their own legal systems.

The product of over a year of consultation among civil society, privacy and technology experts, including the Centre for Internet and Society (read here, here, here and here), the principles have already been co-signed by over hundred organisations from around the world. The process was led by Privacy International, Access, and the Electronic Frontier Foundation. The process was led by Privacy International, Access, and the Electronic Frontier Foundation.

The release of the principles comes on the heels of a landmark report from the United Nations Special Rapporteur on the right to Freedom of Opinion and Expression, which details the widespread use of state surveillance of communications, stating that such surveillance severely undermines citizens’ ability to enjoy a private life, freely express themselves and enjoy their other fundamental human rights. And recently, the UN High Commissioner for Human Rights, Nivay Pillay, emphasised the importance of applying human right standards and democratic safeguards to surveillance and law enforcement activities.

"While concerns about national security and criminal activity may justify the exceptional and narrowly-tailored use of surveillance programmes, surveillance without adequate safeguards to protect the right to privacy actually risk impacting negatively on the enjoyment of human rights and fundamental freedoms," Pillay said.

The principles, summarised below, can be found in full at necessaryandproportionate.org. Over the next year and beyond, groups around the world will be using them to advocate for changes in how present laws are interpreted and how new laws are crafted.

We encourage privacy advocates, rights organisations, scholars from legal and academic communities, and other members of civil society to support the principles by adding their signature.

To sign, please send an email to [email protected], or visit https://www.necessaryandproportionate.org/about

Summary of the 13 principles

- Legality: Any limitation on the right to privacy must be prescribed by law.

- Legitimate Aim: Laws should only permit communications surveillance by specified State authorities to achieve a legitimate aim that corresponds to a predominantly important legal interest that is necessary in a democratic society.

- Necessity: Laws permitting communications surveillance by the State must limit surveillance to that which is strictly and demonstrably necessary to achieve a legitimate aim.

- Adequacy: Any instance of communications surveillance authorised by law must be appropriate to fulfill the specific legitimate aim identified.

- Proportionality: Decisions about communications surveillance must be made by weighing the benefit sought to be achieved against the harm that would be caused to users’ rights and to other competing interests.

- Competent judicial authority: Determinations related to communications surveillance must be made by a competent judicial authority that is impartial and independent.

- Due process: States must respect and guarantee individuals' human rights by ensuring that lawful procedures that govern any interference with human rights are properly enumerated in law, consistently practiced, and available to the general public.

- User notification: Individuals should be notified of a decision authorising communications surveillance with enough time and information to enable them to appeal the decision, and should have access to the materials presented in support of the application for authorisation.

- Transparency: States should be transparent about the use and scope of communications surveillance techniques and powers.

- Public oversight: States should establish independent oversight mechanisms to ensure transparency and accountability of communications surveillance.

- Integrity of communications and systems: States should not compel service providers, or hardware or software vendors to build surveillance or monitoring capabilities into their systems, or to collect or retain information.

- Safeguards for international cooperation: Mutual Legal Assistance Treaties (MLATs) entered into by States should ensure that, where the laws of more than one State could apply to communications surveillance, the available standard with the higher level of protection for users should apply.

- Safeguards against illegitimate access: States should enact legislation criminalising illegal communications surveillance by public and private actors.

The Audacious ‘Right to Be Forgotten’

Perhaps less dire, what if someone decides that she no longer wants the history of her internet action stored in online systems?

So far, there has been confusion over what should be done, and what realistically can be done about this type of permanent presence on a platform as complex and international in scope as the internet. But now, the idea of a right to be forgotten may be able to define the rights and responsibilities in dealing with unwanted data.

The right to be forgotten is an interesting and highly contentious concept currently being debated in the new European Union Data Protection Regulations.[1]

The Data Protection Regulation Bill was proposed in 2012 by EU Commissioner Viviane Reding and stands to replace the EU’s previous Data Protection law, which was enacted in 1995. Referred to as the “right to be forgotten” (RTBF), article 17 of the proposal would essentially allow an EU citizen to demand service providers to “take all reasonable steps” to remove his or her personal data from the internet, as long as there is no “legitimate” reason for the provider to retain it.[1] Despite the evident emphasis on personal privacy, the proposition is surrounded by controversy and facing resistance from many parties. Apparently, there are a range of concerns over the ramifications RTBF could bring.

Not only are major IT companies staunchly opposed to the daunting task of being responsible for the erasure of data floating around the web, but governments like the United States and even Great Britain are objecting the proposal as well.[2],[3]

From a commercial aspect, IT companies and US lobbying forces view the concept of RTBF as a burden and a waste of resources for service providers to implement. Largely due to the RTBF clause, the new EU Data Protection proposal as a whole has witnessed intense, “unprecedented” lobbying by the largest US tech companies and US lobby groups[4],[5]. From a different angle, there are those like Great Britain, whose grievances with the RTBF are in its overzealous aim and insatiable demands.[2] There are doubts as to whether a company will even be able to track down and erase all forms of the data in question. The British Ministry of Justice stated, "The UK does not support the right to be forgotten as proposed by the European commission. The title raises unrealistic and unfair expectations of the proposals."[2] Many experts share these feasibility concerns. The Council of European Professional Informatics Societies (CEPIS) wrote a short report on the ramifications of cloud computing practices in 2011, in which it conformed, “It is impossible to guarantee complete deletion of all copies of data. Therefore it is difficult to enforce mandatory deletion of data. Mandatory deletion of data should be included into any forthcoming regulation of Cloud Computing services, but still it should not be relied on too much: the age of a ‘Guaranteed complete deletion of data’, if it ever existed has passed."[6]

Feasibility aside, the most compelling issue in the debate over RTBF is the demanding challenge of balancing and prioritizing parallel rights. When it comes to forced data erasure, conflicts of right to be forgotten versus freedom of speech and expression easily arises. Which right takes precedence over the other?

Some RTBF opponents fear that RTBF will hinder freedom of speech. They have a valid point. What is the extent of personal data erasure? Abuse of RTBF could result in some strange, Orwellian cyberspace where the mistakes or blemishes of society are all erased or constantly amended, and only positivity fills the internet. There are reasonable fears that a chilling effect may come into play once providers face the hefty noncompliance fines of the Data Protection law, and begin to automatically opt for customer privacy over considerations for freedom of expression. Moreover, what safeguards may be in place to prevent politicians or other public figures from removing bits of unwanted coverage?

Although these examples are extreme, considerations like these need to be made in the development of this law. With the amount of backlash from various entities, it is clear that a concept like the right to be forgotten could not exist as a simple, generalized law. It needs refinement.

Still, the concept of a RTBF is not without its supporters. Viktor Mayer-Schönberger, professor of Internet Governance at Oxford Internet Institute, considers RTBF implementation feasible and necessary, saying that even if it is difficult to remove all traces of an item, "it might be in Google's back-up, but if 99% of the population don't have access to it you have effectively been deleted."[7] Additionally, he claims that the undermining of freedom of speech and expression is "a ridiculous misstatement."[7] To him, the right to be forgotten is tied intricately to the important and natural process of forgetting things of the past.

Moreover, the Data Protection Regulation does mention certain exceptions for the RTBF, including protection for "journalistic purposes or the purpose of artistic or literary expression." [1] The problem, however, is the seeming contradiction between the RTBF and its own exceptions. In practice, it will be difficult to reconcile the powers granted by the RTBF with the limitations claimed in other sections of the Data Protection Regulation.

Currently, the are a few clean and straight forward implementations of RTBF. One would be the removal of mined user data which has been accumulated by service providers. Here, invoking the right would be possible once a person has deleted accounts or canceled contracts with a service (thereby fulfilling the notion that the service no longer has "legitimate" reason to retain the data). Another may be in the case of personal data given by minors who later want their data removed, which is an important example mentioned in Reding’s original proposal.[4] These narrow cases are some of the only instances where RTBF may be used without fear of interference with other social rights. Broader implementations of the RTBF concept, under the current unrefined form, may cause too many conflicting areas with other freedoms, and especially freedom of expression.

Overall, the Right to Be Forgotten is a noble concept, born out of concern for the citizen being overpowered by the internet. As an early EU publication states, "The [RTBF] rules are about empowering people, not about erasing past events or restricting the freedom of the press."[8] But at this point, too many clear details seem to be lacking from the draft design of the RTBF. There is concern that without proper deliberation, the concept could lead to unforeseen and undesirable outcomes. Privacy is a fundamental right that deserves to be protected, but policy makers cannot blindly follow the ideals of one right to the point where it interferes with other aspects of society.

Fortunately, recent amendment proposals have attempted some refinement of the bill. Jeffrey Rosen writes in the Stanford Law Review about a certain key concept that could help legitimize the right, namely an amendment proposing that only personally contributed data may be rescinded.[9] This would help avoid interference with others’ rights to expression, and provide limitations on the extent of right to be forgotten claims. As Leslie Harris, president of the Center for Democracy and Technology wrote in the Huffington Post, amendments are needed which can specifically define personal data in the RTBF sense; thereby distinguishing which type of data is allowed to be removed.[10] In the upcoming months, the European Parliament will be considering such amendments to the proposal. This time will be crucial as it will determine if the development of the right to be forgotten will make it a viable option for the EU’s 500 million citizens.

But even after terms are defined and after safeguards are established, this underling philosophical question remains:

Should a person be able to reclaim the right to privacy after willingly giving it up in the first place?

The RTBF is obviously a contentious topic, one which may need to be gauged individually by nation states; it will soon be revealed if the EU becomes the first to adopt the right. If RTBF fails to pass in European parliament, I would hope that it at least serves to remind people of the permanence of the data which they add to the internet, further incentivizing careful consideration of what one yields to the web. Rights frequently evolve and expand to meet societal or technological advances. If we are to expand the concept of privacy, however, then we must do so with proper consideration, so that privacy may not gain disproportionate power over other rights, or vice versa.

India's National Cyber Security Policy in Review

What the Policy achieves in breadth, however, it often lacks in depth. Vague, cursory language ultimately prevents the Policy from being anything more than an aspirational document. In order to translate the Policy’s goals into an effective strategy, a great deal more specificity and precision will be required.

The Scope of National Cyber Security

Where such precision is most required is in definitions. Having no legal force itself, the Policy arguably does not require the sort of legal precision one would expect of an act of Parliament, for example. Yet the Policy deals in terms plagued with ambiguity, cyber security not the least among them. In forgoing basic definitions, the Policy fails to define its own scope, and as a result it proves remarkably broad and arguably unfocused.

The Policy’s preamble comes close to defining cyber security in paragraph 5 when it refers to "cyber related incident[s] of national significance" involving "extensive damage to the information infrastructure or key assets…[threatening] lives, economy and national security." Here at least is a picture of cyber security on a national scale, a picture which would be quite familiar to Western policymakers: computer security practices "fundamental to both protecting government secrets and enabling national defence, in addition to protecting the critical infrastructures that permeate and drive the 21st century global economy."[*] The paragraph 5 definition of sorts becomes much broader, however, when individuals and businesses are introduced, and threats like identity theft are brought into the mix.

Here the Policy runs afoul of a common pitfall: conflating threats to the state or society writ large (e.g. cyber warfare, cyber espionage, cyber terrorism) with threats to businesses and individuals (e.g. fraud, identity theft). Although both sets of threats may be fairly described as cyber security threats, only the former is worthy of the term national cyber security. The latter would be better characterized as cyber crime. The distinction is an important one, lest cyber crime be “securitized,” or elevated to an issue of national security. National cyber security has already provided the justification for the much decried Central Monitoring System (CMS). Expanding the range of threats subsumed under this rubric may provide a pretext for further surveillance efforts on a national scale.

Apart from mission creep, this vague and overly broad conception of national cyber security risks overwhelming an as yet underdeveloped system with more responsibilities than it may be able to handle. Where cyber crime might be left up to the police, its inclusion alongside true national-level cyber security threats in the Policy suggests it may be handled by the new "nodal agency" mentioned in section IV. Thus clearer definitions would not only provide the Policy with a more focused scope, but they would also make for a more efficient distribution of already scarce resources.

What It Get Right

Definitions aside, the Policy actually gets a lot of things right — at least as an aspirational document. It certainly covers plenty of ground, mentioning everything from information sharing to procedures for risk assessment / risk management to supply chain security to capacity building. It is a sketch of what could be a very comprehensive national cyber security strategy, but without more specifics, it is unlikely to reach its full potential. Overall, the Policy is much of what one might expect from a first draft, but certain elements stand out as worthy of special consideration.

First and foremost, the Policy should be commended for its commitment to “[safeguarding] privacy of citizen’s data” (sic). Privacy is an integral component of cyber security, and in fact other states’ cyber security strategies have entire segments devoted specifically to privacy. India’s Policy stands to be more specific as to the scope of these safeguards, however. Does the Policy aim primarily to safeguard data from criminals? Foreign agents? Could it go so far as to protect user data even from its own agents? Indeed this commitment to privacy would appear at odds with the recently unveiled CMS. Rather than merely paying lip service to the concept of online privacy, the government would be well advised to pass legislation protecting citizens’ privacy and to use such legislation as the foundation for a more robust cyber security strategy.

The Policy also does well to advocate “fiscal schemes and incentives to encourage entities to install, strengthen and upgrade information infrastructure with respect to cyber security.” Though some have argued that such regulation would impose inordinate costs on private businesses, anyone with a cursory understanding of computer networks and microeconomics could tell you that “externalities in cybersecurity are so great that even the freest free market would fail”—to quote expert Bruce Schneier. In less academic terms, a network is only as strong as its weakest link. While it is true that many larger enterprises take cyber security quite seriously, small and medium-sized businesses either lack immediate incentives to invest in security (e.g. no shareholders to answer to) or more often lack the basic resources to do so. Some form of government transfer for cyber security related investments could thus go a long way toward shoring up the country’s overall security.

The Policy also “[encourages] wider usage of Public Key Infrastructure (PKI) within Government for trusted communication and transactions.” It is surprising, however, that the Policy does not mandate the usage of PKI. In general, the document provides relatively few details on what specific security practices operators of Critical Information Infrastructure (CII) can or should implement.

Where It Goes Wrong

One troubling aspect of the Policy is its ambiguous language with respect to acquisition policies and supply chain security in general. The Policy, for example, aims to “[mandate] security practices related to the design, acquisition, development, use and operation of information resources” (emphasis added). Indeed, section VI, subsection A, paragraph 8 makes reference to the “procurement of indigenously manufactured ICT products,” presumably to the exclusion of imported goods. Although supply chain security must inevitably factor into overall cyber security concerns, such restrictive acquisition policies could not only deprive critical systems of potentially higher-quality alternatives but—depending on the implementation of these policies—could also sharpen the vulnerabilities of these systems.

Not only do these preferential acquisition policies risk mandating lower quality products, but it is unlikely they will be able to keep pace with the rapid pace of innovation in information technology. The United States provides a cautionary tale. The U.S. National Institute of Standards and Technology (NIST), tasked with producing cyber security standards for operators of critical infrastructure, made its first update to a 2005 set of standards earlier this year. Other regulatory agencies, such as the Federal Energy Regulatory Commission (FERC) move at a marginally faster pace yet nevertheless are delayed by bureaucratic processes. FERC has already moved to implement Version 5 of its Critical Infrastructure Protection (CIP) standards, nearly a year before the deadline for Version 4 compliance. The need for new standards thus outpaces the ability of industry to effectively implement them.

Fortunately, U.S. cyber security regulation has so-far been technology-neutral. Operators of Critical Information Infrastructure are required only to ensure certain functionalities and not to procure their hardware and software from any particular supplier. This principle ensures competition and thus security, allowing CII operators to take advantage of the most cutting-edge technologies regardless of name, model, etc. Technology neutrality does of course raise risks, such as those emphasized by the Government of India regarding Huawei and ZTE in 2010. Risk assessment must, however, remain focused on the technology in question and avoid politicization. India’s cyber security policy can be technology neutral as long as it follows one additional principle: trust but verify.

Verification may be facilitated by the use of free and open-source software (FOSS). FOSS provides security through transparency as opposed to security through obscurity and thus enables more agile responses to security responses. Users can identify and patch bugs themselves, or otherwise take advantage of the broader user community for such fixes. Thus open-source software promotes security in much the same way that competitive markets do: by accepting a wide range of inputs.

Despite the virtues of FOSS, there are plenty of good reasons to run proprietary software, e.g. fitness for purpose, cost, and track record. Proprietary software makes verification somewhat more complicated but not impossible. Source code escrow agreements have recently gained some traction as a verification measure for proprietary software, even with companies like Huawei and ZTE. In 2010, the infamous Chinese telecommunications giants persuaded the Indian government to lift its earlier ban on their products by concluding just such an agreement. Clearly trust but verify is imminently practicable, and thus technology neutrality.

What’s Missing

Level of detail aside, what is most conspicuously absent from the new Policy is any framework for institutional cooperation beyond 1) the designation of CERT-In “as a Nodal Agency for coordination of all efforts for cyber security emergency response and crisis management” and 2) the designation of the “National Critical Information Infrastructure Protection Centre (NCIIPC) to function as the nodal agency for critical information infrastructure protection in the country.” The Policy mentions additionally “a National nodal agency to coordinate all matters related to cyber security in the country, with clearly defined roles & responsibilities.” Some clarity with regard to roles and responsibilities would certainly be in order. Even among these three agencies—assuming they are all distinct—it is unclear who is to be responsible for what.

More confusing still is the number of other pre-existing entities with cyber security responsibilities, in particular the National Technical Research Organization (NTRO), which in an earlier draft of the Policy was to have authority over the NCIIPC. The Ministry of Defense likewise has bolstered its cyber security and cyber warfare capabilities in recent years. Is it appropriate for these to play a role in securing civilian CII? Finally, the already infamous Central Monitoring System, justified predominantly on the very basis of cyber security, receives no mention at all. For a government that is only now releasing its first cyber security policy, India has developed a fairly robust set of institutions around this issue. It is disappointing that the Policy does not more fully address questions of roles and responsibilities among government entities.

Not only is there a lack of coordination among government cyber security entities, but there is no mention of how the public and private sectors are to cooperate on cyber security information—other than oblique references to “public-private partnerships.” Certainly there is a need for information sharing, which is currently facilitated in part by the sector-level CERTS. More interesting, however, is the question of liability for high-impact cyber attacks. To whom are private CII operators accountable in the event of disruptive cyber attacks on their systems? This legal ambiguity must necessarily be resolved in conjunction with the “fiscal schemes and incentives” also alluded to in the Policy in order to motivate strong cyber security practices among all CII operators and the public more broadly.

Next Steps

India’s inaugural National Cyber Security Policy is by and large a step in the right direction. It covers many of the most pressing issues in national cyber security and lays out a number of ambitious goals, ranging from capacity building to robust public-private partnerships. To realize these goals, the government will need a much more detailed roadmap.

Firstly, the extent of the government’s proposed privacy safeguards must be clarified and ideally backed by a separate piece of privacy legislation. As Benjamin Franklin once said, “Those who would give up essential Liberty, to purchase a little temporary Safety, deserve neither Liberty nor Safety.” When it comes to cyberspace, the Indian people must demand both liberty and safety.

Secondly, the government should avoid overly preferential acquisition policies and allow risk assessments to be technologically rather than politically driven. Procurement should moreover be technology-neutral. Open source software and source code escrow agreements can facilitate the verification measures that make technology neutrality work.

Finally, to translate this policy into a sound strategy will necessarily require that India’s various means be directed toward specific ends. The Policy hints at organizational mapping with references to CERT-In and the NCIIPC, but the roles and responsibilities of other government agencies as well as the private sector remain underdetermined. Greater clarity on these points would improve inter-agency and public-private cooperation—and thus, one hopes, security—significantly.

Not only is there a lack of coordination among government cyber security entities, but there is no mention of how the public and private sectors are to cooperate on cyber security information—other than oblique references to “public-private partnerships.” Certainly there is a need for information sharing, which is currently facilitated in part by the sector-level CERTS. More interesting, however, is the question of liability for high-impact cyber attacks. To whom are private CII operators accountable in the event of disruptive cyber attacks on their systems? This legal ambiguity must necessarily be resolved in conjunction with the “fiscal schemes and incentives” also alluded to in the Policy in order to motivate strong cyber security practices among all CII operators and the public more broadly.

[*]. Melissa E. Hathaway and Alexander Klimburg, “Preliminary Considerations: On National Cyber Security” in National Cyber Security Framework Manual, ed. Alexander Klimburg, (Tallinn, Estonia: Nato Cooperative Cyber Defence Centre of Excellence, 2012), 13

Guidelines for the Protection of National Critical Information Infrastructure: How Much Regulation?

Yet the National Cyber Security Policy, taken together with what little is known of the as-yet restricted guidelines for CII protection, raises troubling questions, particularly regarding the regulation of cyber security practices in the private sector. Whereas the current Policy suggests the imposition of certain preferential acquisition policies, India would be best advised to maintain technology neutrality to ensure maximum security.

According to Section 70(1) of the Information Technology Act, Critical Information Infrastructure (CII) is defined as a “computer resource, the incapacitation or destruction of which, shall have debilitating impact on national security, economy, public health or safety.” In one of the 2008 amendments to the IT Act, the Central Government granted itself the authority to “prescribe the information security practices and procedures for such protected system[s].” These two paragraphs form the legal basis for the regulation of cyber security within the private sector.

Such basis notwithstanding, private cyber security remains almost completely unregulated. According to the Intermediary Guidelines [pdf], intermediaries are required to report cyber security incidents to India’s national-level computer emergency response team (CERT-In). Other than this relatively small stipulation, the only regulation in place for CII exists at the sector level. Last year the Reserve Bank of India mandated that each bank in India appoint a chief information officer (CIO) and a steering committee on information security. The finance sector is also the only sector of the four designated “critical” by the Department of Electronics and Information Technology (DEIT) Cyber Security Strategy to have established a sector-level CERT, which released a set of non-compulsory guidelines [pdf] for information security governance in late 201

The new guidelines for CII protection seek to reorganize the government’s approach to CII. According to a Times of India article on the new guidelines, the NTRO will outline a total of eight sectors (including energy, aviation, telecom and National Stock Exchange) of CII and then “monitor if they are following the guidelines.” Such language, though vague and certainly unsubstantiated, suggests the NTRO may ultimately be responsible for enforcing the “[mandated] security practices related to the design, acquisition, development, use and operation of information resources” described in the Cyber Security Policy. If so, operators of systems deemed critical by the NTRO or by other authorized government agencies may soon be subject to cyber security regulation—with teeth.

To be sure, some degree of cyber security regulation is necessary. After all, large swaths of the country’s CII are operated by private industry, and poor security practices on the part of one operator can easily undermine the security of the rest. To quote security expert Bruce Schneier, “the externalities in cybersecurity are so great that even the freest free market would fail.” In less academic terms, networks are only as secure as their weakest links. While it is true that many larger enterprises take cyber security quite seriously, small and medium-sized businesses either lack immediate incentives to invest in security (e.g. no shareholders to answer to) or more often lack the basic resources to do so. Some form of government transfer for cyber security related investments could thus go a long way toward shoring up the country’s overall security.

Yet regulation may well extend beyond the simple “fiscal schemes and incentives” outlined in section IV of the Policy and “provide for procurement of indigenously manufactured ICT products that have security implications.” Such, at least, was the aim of the Preferential Market Access (PMA) Policy recently put on hold by the Prime Minister’s Office (PMO). Under pressure from international industry groups, the government has promised to review the PMA Policy, with the PMO indicating it may strike out clauses “regarding preference to domestic manufacturer[s] on security related products that are to be used by private sector.” If the government’s aim is indeed to ensure maximum security (rather than to grow an infant industry), it would be well advised to extend this approach to the Cyber Security Policy and the new guidelines for CII protection.

Although there is a national security argument to be made in favor of such policies—namely that imported ICT products may contain “backdoors” or other nefarious flaws—there are equally valid arguments to be made against preferential acquisition policies, at least for the private sector. First and foremost, it is unlikely that India’s nascent cyber security institutions will be able to regulate procurement in such a rapidly evolving market. Indeed, U.S. authorities have been at pains to set cyber security standards, especially in the past several years. Secondly, by mandating the procurement of indigenously manufactured products, the government may force private industry to forgo higher quality products. Absent access to source code or the ability to effectively reverse engineer imported products, buyers should make decisions based on the products’ performance records, not geo-economic considerations like country of origin. Finally, limiting procurement to a specific subset of ICT products likewise restricts the set of security vulnerabilities available to hackers. Rather than improve security, however, a smaller, more distinct set of vulnerabilities may simply make networks easier targets for the sorts of “debilitating” attacks the Policy aims to avert.

As India broaches the difficult task of regulating cyber security in the private sector, it must emphasize flexibility above all. On one hand, the government should avoid preferential acquisition policies which risk a) overwhelming limited regulatory resources, b) saddling CII operators with subpar products, and/or c) differentiating the country’s attack surface. On the other hand, the government should encourage certain performance standards through precisely the sort of “fiscal schemes and incentives” alluded to in the Cyber Security Policy. Regulation should focus on what technology does and does not do, not who made it or what rival government might have had their hands in its design. Ultimately, India should adopt a policy of technology neutrality, backed by the simple principle of trust but verify. Only then can it be truly secure.

CIS Cybersecurity Series (Part 9) - Saikat Datta

"Anonymous speech, in countries which have extremely severe systems of governments, which do not have freedom, etcetera, is welcome. But in a democracy like India, I do not see the need for anonymous speech because it is anyways guaranteed by the Constitution of India. So, no, I do not see the need for anonymity in an open and democratic state like India and I would be seriously worried if such a requirement comes up. Shouldn't I strive to be ideal? The ideal suggests that the constitution has guaranteed freedom of speech. Anonymity, for a time being may be acceptable to some people but I would like a situation where a person, without having to seek anonymity, can speak about anything and not be prosecuted by the state, or persecuted by society. And that is the ideal situation that I would like to strive for." - Saikat Datta, Resident Editor, DNA, Delhi.

Centre for Internet and Society presents its ninth installment of the CIS Cybersecurity Series.

The CIS Cybersecurity Series seeks to address hotly debated aspects of cybersecurity and hopes to encourage wider public discourse around the topic.

Saikat Datta is a journalist who began his career in December 1996 and has worked with several publications like The Indian Express, the Outlook magazine and the DNA newspaper. He is currently the Resident Editor of DNA, Delhi. Saikat has authored a book on India's Special Forces and presented papers at seminars organized by the Centre for Land Warfare Studies, the Centre for Air Power Studies and the National Security Guards. He has also been awarded the International Press Institute Award for investigative journalism, the National RTI award in the journalism category and the Jagan Phadnis Memorial Award for investigative journalism.

'Ethical Hacker' Saket Modi Calls for Stronger Cyber Security Discussions

This research was undertaken as part of the 'SAFEGUARDS' project that CIS is undertaking with Privacy International and IDRC

At the Confederation of Indian Industry (CII) conference on July 13, titled “ACT – Achieving Cyber-Security Together,” Modi as the youngest speaker on the agenda delivered an impromptu talk which lambasted the weaknesses of modern cyber security discussions, enlightened the audience on modern capabilities and challenges of leading cyber security groups, and ultimately received a standing ovation from the crowd. As a later speaker commented, Modi’s controversial opinions and practitioner insight had "set the auditorium ablaze for the remainder of the evening". Since then the Centre for Internet and Society (CIS) has had the pleasure of interviewing Saket Modi over Skype.

It is quite easy to find accounts of Saket Modi's introduction into hacking just by typing his name in the search engine. Faced with the pressure of failing, a teenage Saket discovered how to hack into his high school Chemistry teacher’s test and answer database. After successfully obtaining the answers, and revealing his wrong doings to his teacher, the young man grew intrigued by the possibilities of hacking. "I thought, if I could do this in a couple hours, four hours, then what might I be able to do in four days, four weeks, four months?"

Nowadays, Modi describes himself and his Lucideus team as "ethical hackers", a term recently espoused by hacker groups in the public eye. As opposed to "hacktivists", who utilize hacking methods (including attacks) to achieve or bring awareness to political issues, ethical hackers claim to exclusively use their computer skills to support defenses. At first, incorporation of ethics into a for-profit organization’s game plan may seem confusing, as it leaves room for key questions, like how does one determine which clients constitute ethical business? When asked, however, Modi clarifies by explaining how the ethics are not manifest in the entities Lucideus supports, but instead inherent in the choice of building defensive networks as opposed to using their skills for attack or debilitation. Nevertheless, considerations remain as to whether supporting the cyber security of some entities can lead to the insecurity of others, for example, strengthening the agencies which work in covert cyber espionage. On this point, Modi seems more ambivalent, saying "it depends on a case by case basis". But he still believes cyber security is a right that should be enjoyed by all, "entitled to [you] the moment you set foot on the internet".

As an experienced professional in the field who often gives input on major cyber policy decisions, Modi emphasizes the necessity of youth engagement in cyber security practice and policy. He calls his age bracket the “web generation,” those who have “grown with technology.” According to Modi, no one over 50 or 60 years of age can properly meet the current challenges of the cyber security realm. It is "a sad thing" that those older leaders carry the most power in policy making, and that they often have problems with both understanding and acceptability of modern technological capabilities. For the public, businesses, and also government, there are misconceptions about the importance of cyber security and the extent of modern cyber threats, threats which Modi and his company claim to combat regularly. "About 90 per cent of the crimes that take place in cyber space are because of lack of knowledge, rather than the expertise of the hacker,” he explains. Modi mentions a few basic misconceptions, as simple as, "if I have an anti-virus, my system is secured" or "if you have HTTPS certificate and SSL connection, your system is secured". “These are like wearing an elbow guard while playing cricket,” Modi tells. “If the ball comes at the elbow then you are protected, but what about the rest of the body?”

This highlights another problem evident in India’s current cyber security scene, the problem of lacking “quality institutes to produce good cyber security experts.” For example, Modi takes offence at there not being “a single institute which is providing cyber security at the undergraduate level [in India].” He alludes to the recently unveiled National Cyber Security Policy, specifically the call for five lakh cyber security experts in upcoming years. He calls this “a big figure,” but agrees that there needs to be a lot more awareness throughout the nation. “You really have to change a lot of things,” he says, “in order to get the right things in the right place here in India.”

When considering citizen privacy in relation to cyber security, and the relationship between the two (be it direct or inverse), Saket Modi says the important factor is the governing body, because the issue ultimately resolves to trust. Citizens must trust the “right people with the right qualifications” to store and protect their sensitive data, and to respect privacy. Modi is no novice to the importance of personal data protection, and his company works with a plethora of extremely sensitive information relating to both their clients and their clients’ clients data, so it operates with due care lest it create a “wikileaks part two.”

On internationalization and cyber security, he views the connection between the two as natural, intrinsic. “Cyberspace has added a new dimension to humanity,” says Modi, and tells how former constructs of physical constraints and linear bounds no longer apply. International cooperation is especially pertinent, according to Modi, because the greatest challenge for catching today’s criminal hackers is their international anonymity, “the ability to jump from one country to the other in a matter of milliseconds.”

With the extent of the challenges facing cyber defense specialists, and with the somewhat disorderly current state of Indian cyber security, it is curious to see that Saket Modi has devoted himself to the "ethical" side of hacking. Why hasn’t he or the rest of the Lucideus team resorted to offensive hacking, since Modi claims the majority of cyber attacks of the world who are committed by people also fall between the ages of 15 and 24? Apparently, the answer is simple. “We believe in the need for ethical hacking,” he defends. “We believe in the purpose of making the internet safer.”

Ethical Issues in Open Data

The main panelists, Carly Nyst and Sam Smith from Privacy International, as well as Steve Song from the International Development Research Centre, were joined by roughly a dozen other privacy and development researchers from around the globe in the hour long session.

The primary issue of the meeting was the concern over modern capabilities of cross-analytics for de-anonymizing data sets and revealing personally identifiable information (PII) in open data. Open data can constitute publicly available information such as budgets, infrastructures, and population statistics, as long as the data meets the three open data characteristics: accessibility, machine readability, and availability for re-use. “Historically,” said Tim Davies, “public registers have been protected through obscurity.” However, both the capabilities of data analysts and the definition of personal data have continued to expand in recent years. This concern thus presents a conflict between researchers who advocate governments releasing open data reports, and researchers who emphasize privacy in the developing world.

Steve Song, advisor to IDRC Information & Networks program, spoke of the potential collateral damage that comes with publishing more and more types of information. Song addressed the imperative of the meeting in saying, “privacy needs to be a core part of open data conversation.” In his presentation, he gave a particularly interesting example of the tensions between public and private information implications. Following the infamous 2012 school shooting in Newtown, Connecticut, the information on Newtown’s gun permit owning citizens (made publicly available through America’s Freedom of Information Act) was aggregated into an interactive map which revealed the citizens’ addresses. This obviously became problematic for the Newtown community, as the map not only singled out homes which exercised their right to bear arms but also indirectly revealed which homes were without firearm protection and thereby more vulnerable to theft and crime. The Newtown example clearly demonstrates the relationship (and conflict) between open data and privacy; it resolves to the conflict between the right to information and the right to privacy.

An apparent issue surrounding open data is its perceived binary nature. Many advocates either view data as being open, or not; any intermediary boundaries are only forms of governments limiting data accessibility. Therefore, a point raised by meeting attendee Raed Sharif aptly presented an open data counter-argument. Sarif noted how, inversely, privacy conceptions may form a threat to open data. He mentioned how governments could take advantage of privacy arguments to justify their refusal to publish open reports.

However, Carly Nyst summarized the privacy concern and argument in her remarks near the end of the meeting. Namely, she reasoned that the open data mission is viable, if only limited to generic data, i.e., data about infrastructure, or other information that is in no way personal. Doing so will avoid obstructions of individual privacy. Until more advanced anonymization techniques can be achieved, which can overcome modern re-identification methods, publicly publishing PII may prove too risky. It was generally agreed upon during the meeting that open data is not inherently bad, and in fact its analysis and availability can be beneficial, but the threat of its misuse makes it dangerous. For the future of open data, researchers and advocates should perhaps consider more nuanced approaches to the concept in order to respect considerations for other ethical issues, such as privacy.

FinFisher in India and the Myth of Harmless Metadata

In light of PRISM, the Central Monitoring System (CMS) and other such surveillance projects in India and around the world, the question of whether the collection of metadata is “harmless” has arisen.[1] In order to examine this question, FinFisher[2] — surveillance spyware — has been chosen as a case study to briefly examine to what extent the collection and surveillance of metadata can potentially violate the right to privacy and other human rights. FinFisher has been selected as a case study not only because its servers have been recently found in India[3] but also because its “remote monitoring solutions” appear to be very pervasive even on the mere grounds of metadata.

FinFisher in India

FinFisher is spyware which has the ability to take control of target computers and capture even encrypted data and communications. The software is designed to evade detection by anti-virus software and has versions which work on mobile phones of all major brands.[4] In many cases, the surveillance suite is installed after the target accepts installation of a fake update to commonly used software.[5] Citizen Lab researchers have found three samples of FinSpy that masquerades as Firefox.[6]

FinFisher is a line of remote intrusion and surveillance software developed by Munich-based Gamma International. FinFisher products are sold exclusively to law enforcement and intelligence agencies by the UK-based Gamma Group.[7] A few months ago, it was reported that command and control servers for FinSpy backdoors, part of Gamma International´s FinFisher “remote monitoring solutions”, were found in a total of 25 countries, including India.[8]

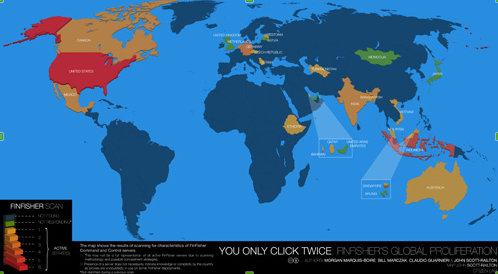

The following map, published by the Citizen Lab, shows the 25 countries in which FinFisher servers have been found.[9]

|

|

|---|

| The above map shows the results of scanning for characteristics of FinFisher command and control servers. |

FinFisher spyware was not found in the countries coloured blue, while the colour green is used for countries not responding. The countries using FinFisher range from shades of orange to shades of red, with the lightest shade of orange ranging to the darkest shade of red on a scale of 1-6, and with 1 representing the least active servers and 6 representing the most active servers in regards to the use of FinFisher. On a scale of 1-6, India is marked a 3 in terms of actively using FinFisher.[10]

Research published by the Citizen Lab reveals that FinSpy servers were recently found in India, which indicates that Indian law enforcement agencies may have bought this spyware from Gamma Group and might be using it to target individuals in India.[11] According to the Citizen Lab, FinSpy servers in India have been detected through the HostGator operator and the first digits of the IP address are: 119.18.xxx.xxx. Releasing complete IP addresses in the past has not proven useful, as the servers are quickly shut down and relocated, which is why only the first two octets of the IP address are revealed.[12]

The Citizen Lab's research reveals that FinFisher “remote monitoring solutions” were found in India, which, according to Gamma Group's brochures, include the following:

- FinSpy: hardware or software which monitors targets that regularly change location, use encrypted and anonymous communications channels and reside in foreign countries. FinSpy can remotely monitor computers and encrypted communications, regardless of where in the world the target is based. FinSpy is capable of bypassing 40 regularly tested antivirus systems, of monitoring the calls, chats, file transfers, videos and contact lists on Skype, of conducting live surveillance through a webcam and microphone, of silently extracting files from a hard disk, and of conducting a live remote forensics on target systems. FinSpy is hidden from the public through anonymous proxies.[13]

- FinSpy Mobile: hardware or software which remotely monitors mobile phones. FinSpy Mobile enables the interception of mobile communications in areas without a network, and offers access to encrypted communications, as well as to data stored on the devices that is not transmitted. Some key features of FinSpy Mobile include the recording of common communications like voice calls, SMS/MMS and emails, the live surveillance through silent calls, the download of files, the country tracing of targets and the full recording of all BlackBerry Messenger communications. FinSpy Mobile is hidden from the public through anonymous proxies.[14]

- FinFly USB: hardware which is inserted into a computer and which can automatically install the configured software with little or no user-interaction and does not require IT-trained agents when being used in operations. The FinFly USB can be used against multiple systems before being returned to the headquarters and its functionality can be concealed by placing regular files like music, video and office documents on the device. As the hardware is a common, non-suspicious USB device, it can also be used to infect a target system even if it is switched off.[15]

- FinFly LAN: software which can deploy a remote monitoring solution on a target system in a local area network (LAN). Some of the major challenges law enforcement faces are mobile targets, as well as targets who do not open any infected files that have been sent via email to their accounts. FinFly LAN is not only able to deploy a remote monitoring solution on a target´s system in local area networks, but it is also able to infect files that are downloaded by the target, by sending fake software updates for popular software or to infect the target by injecting the payload into visited websites. Some key features of the FinFly LAN include: discovering all computer systems connected to LANs, working in both wired and wireless networks, and remotely installing monitoring solutions through websites visited by the target. FinFly LAN has been used in public hotspots, such as coffee shops, and in the hotels of targets.[16]

- FinFly Web: software which can deploy remote monitoring solutions on a target system through websites. FinFly Web is designed to provide remote and covert infection of a target system by using a wide range of web-based attacks. FinFly Web provides a point-and-click interface, enabling the agent to easily create a custom infection code according to selected modules. It provides fully-customizable web modules, it can be covertly installed into every website and it can install the remote monitoring system even if only the email address is known.[17]

- FinFly ISP: hardware or software which deploys a remote monitoring solution on a target system through an ISP network. FinFly ISP can be installed inside the Internet Service Provider Network, it can handle all common protocols and it can select targets based on their IP address or Radius Logon Name. Furthermore, it can hide remote monitoring solutions in downloads by targets, it can inject remote monitoring solutions as software updates and it can remotely install monitoring solutions through websites visited by the target.[18]

Although FinFisher is supposed to be used for “lawful interception”, it has gained notoriety for targeting human rights activists.[19] According to Morgan Marquis-Boire, a security researcher and technical advisor at the Munk School and a security engineer at Google, FinSpy has been used in Ethiopia to target an opposition group called Ginbot.[20] Researchers have argued that FinFisher has been sold to Bahrain's government to target activists, and such allegations were based on an examination of malicious software which was emailed to Bahraini activists.[21] Privacy International has argued that FinFisher has been deployed in Turkmenistan, possibly to target activists and political dissidents.[22]

Many questions revolving around the use of FinFisher and its “remote monitoring solutions” remain vague, as there is currently inadquate proof of whether this spyware is being used to target individuals by law enforcement agencies in the countries where command and control servers have been found, such as India.[23] However, FinFisher's brochures which were circulated in the ISS world trade shows and leaked by WikiLeaks do reveal some confirmed facts: Gamma International claims that its FinFisher products are capable of taking control of target computers, of capturing encrypted data and of evading mainstream anti-virus software.[24] Such products are exhibited in the world's largest surveillance trade show and probably sold to law enforcement agencies around the world.[25] This alone unveils a concerning fact: spyware which is so sofisticated that it even evades encryption and anti-virus software is currently in the market and law enforcement agencies can potentially use it to target activists and anyone who does not comply with social conventions.[26] A few months ago, two Indian women were arrested after having questioned the shutdown of Mumbai for Shiv Sena patriarch Bal Thackeray's funeral.[27] Thus, it remains unclear what type of behaviour is targeted by law enforcement agencies and whether spyware, such as FinFisher, would be used in India to track individuals without a legally specified purpose.

Furthermore, India lacks privacy legislation which could safeguard individuals from potential abuse, while sections 66A and 69 of the Information Technology (Amendment) Act, 2008, empower Indian authorities with extensive surveillance capabilites.[28] While it remains unclear if Indian law enforcement agencies are using FinFisher spy products to unlawfully target individuals, it is a fact that FinFisher control and command servers have been found in India and that, if used, they could potentially have severe consequences on individuals' right to privacy and other human rights.[29]

The Myth of Harmless Metadata

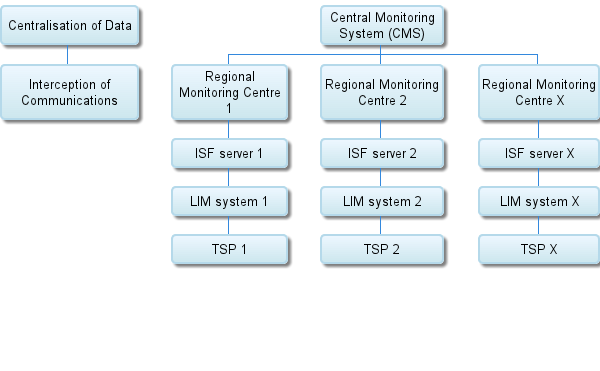

Over the last months, it has been reported that the Central Monitoring System (CMS) is being implemented in India, through which all telecommunications and Internet communications in the country are being centrally intercepted by Indian authorities. This mass surveillance of communications in India is enabled by the omission of privacy legislation and Indian authorities are currently capturing the metadata of communications.[30]

Last month, Edward Snowden leaked confidential U.S documents on PRISM, the top-secret National Security Agency (NSA) surveillance programme that collects metadata through telecommunications and Intenet communications. It has been reported that through PRISM, the NSA has tapped into the servers of nine leading Internet companies: Microsoft, Google, Yahoo, Skype, Facebook, YouTube, PalTalk, AOL and Apple.[31] While the extent to which the NSA is actually tapping into these servers remains unclear, it is certain that the NSA has collected metadata on a global level.[32] Yet, the question of whether the collection of metadata is “harmful” remains ambiguous.

According to the National Information Standards Organization (NISO), the term “metadata” is defined as “structured information that describes, explains, locates or otherwise makes it easier to retrieve, use or manage an information resource”. NISO claims that metadata is “data about data” or “information about information”.[33] Furthermore, metadata is considered valuable due to its following functions:

- Resource discovery

- Organizing electronic resources

- Interoperability

- Digital Identification

- Archiving and preservation

Metadata can be used to find resources by relevant criteria, to identify resources, to bring similar resources together, to distinguish dissimilar resources and to give location information. Electronic resources can be organized through the use of various software tools which can automatically extract and reformat information for Web applications. Interoperability is promoted through metadata, as describing a resource with metadata allows it to be understood by both humans and machines, which means that data can automatically be processed more effectively. Digital identification is enabled through metadata, as most metadata schemes include standard numbers for unique identification. Moreover, metadata enables the archival and preservation of large volumes of digital data.[34]

Surveillance projects, such as PRISM and India's CMS, collect large volumes of metadata, which include the numbers of both parties on a call, location data, call duration, unique identifiers, the International Mobile Subscriber Identity (IMSI) number, email addresses, IP addresses and browsed webpages.[35] However, the fact that such surveillance projects may not have access to content data might potentially create a false sense of security.[36] When Microsoft released its report on data requests by law enforcement agencies around the world in March 2013, it revealed that most of the disclosed data was metadata, while relatively very little content data was allegedly disclosed.[37]

imilarily, Google's transparency report reveals that the company disclosed large volumes of metadata to law enforcement agencies, while restricting its disclosure of content data.[38]

Such reports may potentially provide a sense of security to the public, as they reassure that the content of personal emails, for example, has not been shared with the government, but merely email addresses – which might be publicly available online anyway. However, is content data actually more “harmful” than metadata? Is metadata “harmless”? How much data does metadata actually reveal?

The Guardian recently published an article which includes an example of how individuals can be tracked through their metadata. In particular, the example explains how an individual is tracked – despite using an anonymous email account – by logging in from various hotels' public Wi-Fi and by leaving trails of metadata that include times and locations. This example illustrates how an individual can be tracked through metadata alone, even when anonymous accounts are being used.[39]

Wired published an article which states that metadata can potentially be more harmful than content data because “unlike our words, metadata doesn't lie”. In particular, content data shows what an individual says – which may be true or false – whereas metadata includes what an individual does. While the validity of the content within an email may potentially be debateable, it is undeniable that an individual logged into specific websites – if that is what that individuals' IP address shows. Metadata, such as the browsing habits of an individual, may potentially provide a more thorough and accurate profile of an individual than that individuals' email content, which is why metadata can potentially be more harmful than content data.[40]

Furthermore, voice content is hard to process and written content in an email or chat communication may not always be valid. Metadata, on the other hand, provides concrete patterns of an individuals' behaviour, interests and interactions. For example, metadata can potentially map out an individuals' political affiliation, interests, economic background, institution, location, habits and the people that individual interacts with. Such data can potentially be more valuable than content data, because while the validity of email content is debateable, metadata usually provides undeniable facts. Not only is metadata more accurate than content data, but it is also ideally suited to automated analysis by a computer. As most metadata includes numeric figures, it can easily be analysed by data mining software, whereas content data is more complicated.[41]

FinFisher products, such as FinFly LAN, FinFly Web and FinFly ISP, provide solid proof that the collection of metadata can potentially be “harmful”. In particular, FinFly LAN can be deployed in a target system in a local area network (LAN) by infecting files that are downloaded by the target, by sending fake software updates for popular software or by infecting the payload into visited websites. The fact that FinFly LAN can remotely install monitoring solutions through websites visited by the target indicates that metadata alone can be used to acquire other sensitive data.[42]

FinFly Web can deploy remote monitoring solutions on a target system through websites. Additionally, FinFly Web can be covertly installed into every website and it can install the remote monitoring system even if only the email address is known.[43] FinFly ISP can select targets based on their IP address or Radius Logon Name. Furthermore, FinFly ISP can remotely install monitoring solutions through websites visited by the target, as well as inject remote monitoring solutions as software updates.[44] In other words, FinFisher products, such as FinFly LAN, FinFly Web and FinFly ISP, can target individuals, take control of their computers and their data, and capture even encrypted data and communications with the help of metadata alone.

The example of FinFisher products illustrates that metadata can potentially be as “harmful” as content data, if acquired unlawfully and without individual consent.[45] Thus, surveillance schemes, such as PRISM and India's CMS, which capture metadata without individuals' consent can potentially pose a major threat to the right to privacy and other human rights.[46] Privacy can be defined as the claim of individuals, groups or institutions to determine when, how and to what extent information about them is communicated to others.[47] Furthermore, privacy is at the core of human rights because it protects individuals from abuse by those in power.[48] The unlawful collection of metadata exposes individuals to the potential violation of their human rights, as it is not transparent who has access to their data, whether it is being shared with third parties or for how long it is being retained.

It is not clear if Indian law enforcement agencies are actually using FinFisher products, but the Citizen Lab did find FinFisher command and control servers in the country which indicates that there is a high probability that such spyware is being used.[49] This probability is highly concerning not only because the specific spy products have such advanced capabilities that they are even capable of capturing encrypted data, but also because India currently lacks privacy legislation which could safeguard individuals.

Thus, it is recommended that Indian law enforcement agencies are transparent and accountable if they are using spyware which can potentially breach their citizens' human rights and that privacy legislation is enacted into law. Lastly, it is recommended that all surveillance technologies are strictly regulated with regards to the protection of human rights and that Indian authorities adopt the principles on communication surveillance formulated by the Electronic Frontier Foundation and Privacy International.[50] The above could provide a decisive first step in ensuring that India is the democracy it claims to be.

[1]. Robert Anderson (2013), “Wondering What Harmless 'Metadata' Can Actually Reveal? Using Own Data, German Politician Shows Us”, The CSIA Foundation, http://bit.ly/1cIhu7G

[2]. Gamma Group, FinFisher IT Intrusion, http://bit.ly/fnkGF3

[3]. Morgan Marquis-Boire, Bill Marczak, Claudio Guarnieri & John Scott-Railton, “You Only Click Twice: FinFisher's Global Proliferation”, The Citizen Lab, 13 March 2013, http://bit.ly/YmeB7I

[4]. Michael Lewis, “FinFisher Surveillance Spyware Spreads to Smartphones”, The Star: Business, 30 August 2012, http://bit.ly/14sF2IQ

[5]. Marcel Rosenbach, “Troublesome Trojans: Firm Sought to Install Spyware Via Faked iTunes Updates”, Der Spiegel, 22 November 2011, http://bit.ly/14sETVV

[6]. Intercept Review, Mozilla to Gamma: stop disguising your FinSpy as Firefox, 02 May 2013, http://bit.ly/131aakT

[7]. Intercept Review, LI Companies Review (3) – Gamma, 05 April 2012, http://bit.ly/Hof9CL

[8]. Morgan Marquis-Boire, Bill Marczak, Claudio Guarnieri & John Scott-Railton, For Their Eyes Only: The Commercialization of Digital Spying, Citizen Lab and Canada Centre for Global Security Studies, Munk School of Global Affairs, University of Toronto, 01 May 2013, http://bit.ly/ZVVnrb

[9]. Morgan Marquis-Boire, Bill Marczak, Claudio Guarnieri & John Scott-Railton, “You Only Click Twice: FinFisher's Global Proliferation”, The Citizen Lab, 13 March 2013, http://bit.ly/YmeB7I

[10]. Ibid.

[11]. Morgan Marquis-Boire, Bill Marczak, Claudio Guarnieri & John Scott-Railton, For Their Eyes Only: The Commercialization of Digital Spying, Citizen Lab and Canada Centre for Global Security Studies, Munk School of Global Affairs, University of Toronto, 01 May 2013, http://bit.ly/ZVVnrb

[12]. Morgan Marquis-Boire, Bill Marczak, Claudio Guarnieri & John Scott-Railton, “You Only Click Twice: FinFisher's Global Proliferation”, The Citizen Lab, 13 March 2013, http://bit.ly/YmeB7I

[13]. Gamma Group, FinFisher IT Intrusion, FinSpy: Remote Monitoring & Infection Solutions, WikiLeaks: The Spy Files, http://bit.ly/zaknq5

[14]. Gamma Group, FinFisher IT Intrusion, FinSpy Mobile: Remote Monitoring & Infection Solutions, WikiLeaks: The Spy Files, http://bit.ly/19pPObx

[15]. Gamma Group, FinFisher IT Intrusion, FinFly USB: Remote Monitoring & Infection Solutions, WikiLeaks: The Spy Files, http://bit.ly/1cJSu4h

[16]. Gamma Group, FinFisher IT Intrusion, FinFly LAN: Remote Monitoring & Infection Solutions, WikiLeaks: The Spy Files, http://bit.ly/14J70Hi

[17]. Gamma Group, FinFisher IT Intrusion, FinFly Web: Remote Monitoring & Intrusion Solutions, WikiLeaks: The Spy Files, http://bit.ly/19fn9m0

[18]. Gamma Group, FinFisher IT Intrusion, FinFly ISP: Remote Monitoring & Intrusion Solutions, WikiLeaks: The Spy Files, http://bit.ly/13gMblF

[19]. Gerry Smith, “FinSpy Software Used To Surveil Activists Around The World, Reports Says”, The Huffington Post, 13 March 2013, http://huff.to/YmmhXI

[20]. Jeremy Kirk, “FinFisher Spyware seen Targeting Victims in Vietnam, Ethiopia”, Computerworld: IDG News, 14 March 2013, http://bit.ly/14J8BwW

[21]. Reporters without Borders: For Freedom of Information (2012), The Enemies of the Internet: Special Edition: Surveillance, http://bit.ly/10FoTnq

[22]. Privacy International, FinFisher Report, http://bit.ly/QlxYL0

[23]. Morgan Marquis-Boire, Bill Marczak, Claudio Guarnieri & John Scott-Railton, “You Only Click Twice: FinFisher's Global Proliferation”, The Citizen Lab, 13 March 2013, http://bit.ly/YmeB7I

[24]. Gamma Group, FinFisher IT Intrusion, FinSpy: Remote Monitoring & Infection Solutions, WikiLeaks: The Spy Files, http://bit.ly/zaknq5

[25]. Adi Robertson, “Paranoia Thrives at the ISS World Cybersurveillance Trade Show”, The Verge, 28 December 2011, http://bit.ly/tZvFhw

[26]. Gerry Smith, “FinSpy Software Used To Surveil Activists Around The World, Reports Says”, The Huffington Post, 13 March 2013, http://huff.to/YmmhXI

[27]. BBC News, “India arrests over Facebook post criticising Mumbai shutdown”, 19 November 2012, http://bbc.in/WoSXkA

[28]. Indian Ministry of Law, Justice and Company Affairs, The Information Technology (Amendment) Act, 2008, http://bit.ly/19pOO7t

[29]. Morgan Marquis-Boire, Bill Marczak, Claudio Guarnieri & John Scott-Railton, For Their Eyes Only: The Commercialization of Digital Spying, Citizen Lab and Canada Centre for Global Security Studies, Munk School of Global Affairs, University of Toronto, 01 May 2013, http://bit.ly/ZVVnrb

[30]. Phil Muncaster, “India introduces Central Monitoring System”, The Register, 08 May 2013, http://bit.ly/ZOvxpP

[31]. Glenn Greenwald & Ewen MacAskill, “NSA PRISM program taps in to user data of Apple, Google and others”, The Guardian, 07 June 2013, http://bit.ly/1baaUGj

[32]. BBC News, “Google, Facebook and Microsoft seek data request transparency”, 12 June 2013, http://bbc.in/14UZCCm

[33]. National Information Standards Organization (2004), Understanding Metadata, NISO Press, http://bit.ly/LCSbZ

[34]. Ibid.

[35]. The Hindu, “In the dark about 'India's PRISM'”, 16 June 2013, http://bit.ly/1bJCXg3 ; Glenn Greenwald, “NSA collecting phone records of millions of Verizon customers daily”, The Guardian, 06 June 2013, http://bit.ly/16L89yo

[36]. Robert Anderson, “Wondering What Harmless 'Metadata' Can Actually Reveal? Using Own Data, German Politician Shows Us”, The CSIA Foundation, 01 July 2013, http://bit.ly/1cIhu7G

[37]. Microsoft: Corporate Citizenship, 2012 Law Enforcement Requests Report,http://bit.ly/Xs2y6D

[38]. Google, Transparency Report, http://bit.ly/14J7hKp

[39]. Guardian US Interactive Team, A Guardian Guide to your Metadata, The Guardian, 12 June 2013, http://bit.ly/ZJLkpy

[40]. Matt Blaze, “Phew, NSA is Just Collecting Metadata. (You Should Still Worry)”, Wired, 19 June 2013, http://bit.ly/1bVyTJF

[41]. Ibid.

[42]. Gamma Group, FinFisher IT Intrusion, FinFly LAN: Remote Monitoring & Infection Solutions, WikiLeaks: The Spy Files, http://bit.ly/14J70Hi

[43]. Gamma Group, FinFisher IT Intrusion, FinFly Web: Remote Monitoring & Intrusion Solutions, WikiLeaks: The Spy Files, http://bit.ly/19fn9m0

[44]. Gamma Group, FinFisher IT Intrusion, FinFly ISP: Remote Monitoring & Intrusion Solutions, WikiLeaks: The Spy Files, http://bit.ly/13gMblF

[45]. Robert Anderson, “Wondering What Harmless 'Metadata' Can Actually Reveal? Using Own Data, German Politician Shows Us”, The CSIA Foundation, 01 July 2013, http://bit.ly/1cIhu7G

[46]. Shalini Singh, “India's surveillance project may be as lethal as PRISM”, The Hindu, 21 June 2013, http://bit.ly/15oa05N

[47]. Cyberspace Law and Policy Centre, Privacy, http://bit.ly/14J5u7W

[48]. Bruce Schneier, “Privacy and Power”, Schneier on Security, 11 March 2008, http://bit.ly/i2I6Ez

[49]. Morgan Marquis-Boire, Bill Marczak, Claudio Guarnieri & John Scott-Railton, For Their Eyes Only: The Commercialization of Digital Spying, Citizen Lab and Canada Centre for Global Security Studies, Munk School of Global Affairs, University of Toronto, 01 May 2013, http://bit.ly/ZVVnrb

[50]. Elonnai Hickok, “Draft International Principles on Communications Surveillance and Human Rights”, The Centre for Internet and Society, 16 January 2013, http://bit.ly/XCsk9b

Freedom from Monitoring: India Inc Should Push For Privacy Laws

This article by Sunil Abraham was published in Forbes India Magazine on August 21, 2013.

I think I understand why the average Indian IT entrepreneur or enterprise does not have a position on blanket surveillance. This is because the average Indian IT enterprise’s business model depends on labour arbitrage, not intellectual property. And therefore they have no worries about proprietary code or unfiled patent applications being stolen by competitors via rogue government officials within projects such as NATGRID, UID and, now, the CMS.

A sub-section of industry, especially the technology industry, will always root for blanket surveillance measures. The surveillance industry has many different players, ranging from those selling biometric and CCTV hardware to those providing solutions for big data analytics and legal interception systems. There are also more controversial players who provide spyware, especially those in the market for zero-day exploits. The cheerleaders for the surveillance industry are techno-determinists who believe you can solve any problem by throwing enough of the latest and most expensive technology at it.

What is surprising, though, is that other indigenous or foreign enterprises that depend on secrecy and confidentiality—in sectors such a banking, finance, health, law, ecommerce, media, consulting and communications—also don’t seem to have a public position on the growing surveillance ambitions of ‘democracies’ such as India and the United States of America. (Perhaps the only exceptions are a few multinational internet and software companies that have made some show of resistance and disagreement with the blanket surveillance paradigm.)

Is it because these businesses are patriotic? Do they believe that secrecy, confidentiality and, most importantly, privacy, must be sacrificed for national security? If that were true then it would not be a particularly wise thing to do, as privacy is the precondition for security. Ann Cavoukian, privacy commissioner of Ontario, calls it a false dichotomy. Bruce Schneier, security technologist and writer, calls it a false zero sum game; he goes on to say, “There is no security without privacy. And liberty requires both security and privacy.”

The reason why the secret recipe of Coca Cola is still secret after over 120 years is the same as the reason why a captured soldier cannot spill the beans on the overall war strategy. Corporations, like militaries, have layers and layers of privacy and secrecy. The ‘need to know’ principle resists all centralising tendencies, such as blanket surveillance. It’s important to note that targeted surveillance to identify a traitor or spy within the military, or someone engaged in espionage within a corporation, is pretty much an essential. However, any more surveillance than absolutely necessary actually undermines the security objective. To summarise, privacy is a pre-condition to the security of the individual, the enterprise, the military and the nation state.

Most people complaining online about projects like the Central Monitoring System seem to think that India has no privacy laws. This is completely untrue: We have around 50 different laws, rules and regulations that aim to uphold privacy and confidentiality in various domains. Unfortunately, most of those policies are very dated and do not sufficiently take into account the challenges of contemporary information societies. These policy documents need to be updated and harmonised through the enactment of a new horizontal privacy law. A small minority will say that Section 43(A) of the Information Technology Act is the India privacy law. That is not completely untrue, but is a gross exaggeration. Section 43(A) is really only a data security provision and, at that, it does not even comprehensively address data protection, which is only a sub-set of the overall privacy regulation required in a nation.

What would an ideal privacy law for India look like? For one, it would protect the rights of all persons, regardless of whether they are citizens or residents. Two, it would define privacy principles. Three, it would establish the office of an independent and autonomous privacy commissioner, who would be sufficiently empowered to investigate and take action against both government and private entities. Four, it would define civil and criminal offences, remedies and penalties. And five, it would have an overriding effect on previous legislation that does not comply with all the privacy principles.