Blog

GSMA Partners Meeting

GSMA_Meeting_9.04.2014.pdf

—

PDF document,

105 kB (107973 bytes)

GSMA_Meeting_9.04.2014.pdf

—

PDF document,

105 kB (107973 bytes)

Identity and Privacy

Identity and Privacy.zip

—

ZIP archive,

3969 kB (4064327 bytes)

Identity and Privacy.zip

—

ZIP archive,

3969 kB (4064327 bytes)

National Security and Privacy

National Security and Privacy.zip

—

ZIP archive,

1701 kB (1742258 bytes)

National Security and Privacy.zip

—

ZIP archive,

1701 kB (1742258 bytes)

Transparency and Privacy

Transperancy and Privacy.zip

—

ZIP archive,

1225 kB (1255316 bytes)

Transperancy and Privacy.zip

—

ZIP archive,

1225 kB (1255316 bytes)

Networks: What You Don’t See is What You (for)Get

The blog entry was originally published in DML Central on April 17, 2014 and mirrored in Hybrid Publishing Lab on May 13, 2014.

Are we an ensemble of actors? A cluster of friends? A conference of scholars? A committee of decision makers? An array of perspectives? A group of associates? A play-list of voices? I do not pose these questions rhetorically, though I do enjoy rhetoric. I want to look at this inability to name collectives and the confusions and ambiguity it produces as central to our conversations around digital thinking. In particular, I want to look at the notion of the network. Because, I am sure, that if we were to go for the most neutralised digital term to characterise this collection that we all weave in and out of, it would have to be the network. We are a network.[1]

But, what does it mean to say that we are a network? The network is a very strange thing. Especially within the realms of the Internet, which, in itself, purports to be a giant network, the network is self-explanatory, self-referential and completely denuded of meaning. A network is benign, and like the digital, that foregrounds the network aesthetic, the network is inscrutable. You cannot really touch a network or name it. You cannot shape it or define it. You can produce momentary snapshots of it, but you can never contain it or limit it. The network cannot be held or materially felt.

And yet, the network touches us. We live within networked societies. We engage in networking – network as a verb. We are a network – network as a noun. We belong to networks – network as a collective. In all these poetic mechanisms of network, there is perhaps the core of what we want to talk about today – the tension between the local and the global and the way in which we will understand the Internet and then the frameworks of governance and policy that surround it.

Let me begin with a genuine question. What predates the network? Because the network is a very new word. The first etymological trace of the network is in 1887, where it was used as a verb, within broadcast and communications models, to talk about an outreach. As in ‘to cover with a network.’ The idea of a network as a noun is older where in the 1550s, the idea of ‘net-like arrangements of threads, wires, etc.’ was first identified as a network. In the second half of the industrial 19th Century, the term network was used for understanding an extended, complex, interlocking system. The idea of network as a set of connected people emerged in the latter half of the 20thCentury. I am pointing at these references to remind us that the ubiquitous presence of the network, as a practice, as a collective, and as a metaphor that seeks to explain the rest of the world around us, is a relatively new phenomenon. And we need to be aware of the fact, that the network, especially as it is understood in computing and digital technologies, is a particular model through which objects, individuals and the transactions between them are imagined.

For anybody who looks at the network itself – especially the digital network that we have accepted as the basis on which everything from social relationships on Facebook to global financial arcs are defined – we know that the network is in a state of crisis.

Networks of crises: The Bangalore North East Exodus

Let me illustrate the multiple ways in which the relationship between networks and crisis has been imagined through a particular story. In August 2012, I woke up one morning to realise that I was living in a city of crisis. Bangalore, which is one of my homes, where the largest preoccupations to date have been about bad roads, stray dogs, and occasionally, the lack of a nightlife, was suddenly a space that people wanted to flee and occupy simultaneously.

Through the technology mediated gossip mill that produced rumours faster than the speed of a digital click, imagination of terror, danger, and material harm found currency. The city suddenly witnessed thousands of people running away from it, heading back to their imagined homelands. It was called the North East exodus, where, following an ethnic-religious clash between two traditionally hostile communities in Assam, there were rumours that the large North East Indian community in Bangalore was going to be attacked by certain Muslim factions at the end of Ramadan.

The media spectacle of the exodus around questions of religion, ethnicity, regionalism and belonging only emphasised the fact that there is a new way of connectedness that we live in – the network society that no longer can be controlled, contained or corrected by official authorities and their voices. Despite a barrage of messages from law enforcement and security authorities, on email, on large screens on the roads, and on our cell phones, there was a growing anxiety and a spiralling information explosion that was producing an imaginary situation of precariousness and bodily harm. For me, this event, was one of the first signalling how to imagine the network society in a crisis, especially when it came to Bangalore, which is supposed to represent the Silicon dreams of an India that is shining brightly. While there is much to be unpacked about the political motivations and the ecologies of fear that our migrant lives in global cities are enshrined in, I want to specifically focus on what the emergence of this network society means.

There is an imagination, especially in cities like Bangalore, of digital technologies as necessarily plugging in larger networks of global information consumption. The idea that technology plugs us into the transnational circuits is so huge that it only tunes us toward an idea of connectedness that is always outward looking, expanding the scope of nation, community and body.

However, the ways in which information was circulating during this phenomenon reminds us that digital networks are also embedded in local practices of living and survival. Most of the time, these networks are so natural and such an integral part of our crucial mechanics of urban life that they appear as habits, without any presence or visibility. In times of crises – perceived or otherwise – these networks make themselves visible, to show that they are also inward looking. But in this production of hyper-visible spectacles, the network works incessantly to make itself invisible.

Which is why, in the case of the North East exodus, the steps leading to the resolution of the crisis, constructed and fuelled by networks is interesting. As government and civil society efforts to control the rumours and panic reached an all-time high and people continued to flee the city, the government eventually went in to regulate the technology itself. There were expert panel discussions about whether the digital technologies are to be blamed for this rumour mill. There was a ban on mass-messaging and there was a cap on the number of messages which could be sent on a day by each mobile phone subscriber. The Information and Broadcast Ministry along with the Information Technologies cell, started monitoring and punishing people for false and inflammatory information.

Network as Crisis: The unexpected visibility of a network

What, then, was the nature of the crisis in this situation? It is a question worth exploring. We would imagine that this crisis was a crisis about the nationwide building of mega-cities filled with immigrant bodies that are not allowed their differences because they all have to be cosmopolitan and mobile bodies. The crisis could have been read as one of neo-liberal flatness in imagining the nation and its fragments, that hides the inherent and historical sites of conflict under the seductive rhetoric of economic development. And yet, when we look at the operationalization of the resolutions, it looked as if the crisis was the appearance and the visibility of the hitherto hidden local networks of information and communication.

In her analysis of networks, Brown University’s Wendy Chun posits that this is why networks are an opaque metaphor. If the function of metaphor is to explain, through familiarity, objects which are new to us, the network as an explanatory paradigm presents a new conundrum. While the network presumes and exteriority that it seeks to present, while the network allows for a subjective interiority of the actor and its decisions, while the network grants visibility and form to the everyday logic of organisation, what the network actually seeks to explain is itself. Or, in less evocative terms, the network is not only the framework through which we analyse, but it is also the object of analyses. Once the network has been deployed as a paradigm through which to understand a crisis, once the network has made itself visible, all our efforts are driven at explaining and strengthening, and almost like digital mothers, comfort the network back into its peaceful existence as infrastructure. We develop better tools to regulate the network. We define new parameters to mine the data more effectively. We develop policies to govern and govern through the network with greater transparency and ease.

Thus, in the case of the North East exodus, instead of addressing the larger issues of conservative parochialism, an increasing backlash by right-wing governments and a growing hostility that emerges from these cities that nobody possesses and nobody belongs to, the efforts were directed at blaming technology as the site where the problem is located and the network as the object that needs to be controlled. What emerged was a series of corrective mechanisms and a set of redundant regulations that controlled the number of text messages that people were able to send per day or policing the Internet for spreading rumours. The entire focus was on information management, as if the reason for the mass exodus of people from the NE Indian states and the sense of fragility that the city had been immersed in, was all due to the pervasive and ubiquitous information gadgets and their ability to proliferate in p2p (peer-to-peer) environments outside of the government’s control. This lack of exteriority to the network is something that very few critical voices have pointed out.

Duncan Watts, the father of network computing, working through the logic of nodes, traffic and edges, has suggested there is a great problem in the ways in which we understand the process of network making. I am paraphrasing his complex mathematical text that explains the production of physical networks – what he calls the small worlds – and pointing out his strong critique about how the social scientists engage with networks. In the social sciences’ imagination of networks, there is a messy exteriority – fuzzy, complex and often not reducible to patterns or basic principles. The network is a distilling of the messy exteriority, a representation of the complex interplay between different objects and actors, and a visual mapping of things as they are. Which is to say, we imagine there is a material reality and the network is a tool by which this reality, or at least parts of this reality, are mapped and represented to us in patterns which can help us understand the true nature of this reality.

Drawing from practices of network modelling and building, Watts proved, that we have the equation wrong. The network is not a representation of reality but the ontology of reality. The network is not about trying to make sense of an exteriority. Instead, the network is an abstract and ideological map that constructs the reality in a particular way. In other words, the network precedes the real, and because of its ability to produce objective, empiricist and reductive principles (constantly filtering out that which is not important to the logic or the logistics of the network design), it then gives us a reality that is produced through the network principles. To make it clear, the network representation is not the derivative of the real but the blue-print of the real. And the real as we access it, through these networked tools, is not the raw and messy real but one that is constructed and shaped by the network in those ways. The network, then, needs to be understood, examined and critiqued, not as something that represents the natural, but something that shapes our understanding of the natural itself.

In the case of the Bangalore North East Exodus, the network and its visibility created a problem for us – and the problem was, that the network, which is supposed to be infrastructure, and hence, by nature invisible, had suddenly become visible. We needed to make sure that it was shamed, blamed, named and tamed so that we can go back to our everyday practices of regulation, governance and policy.

The Intersectional Network

What I want to emphasise, then, is that this binary of local versus the global, or local working in tandem with global, or the quaintly hybridised glocal are not very generative in thinking of policy and politics around the Internet. What we need is to recognise what gets hidden in this debate. What becomes visible when it is not supposed to? What remains invisible beyond all our efforts? And how do we develop a framework that actually moves beyond these binary modes of thinking, where the resolution is either to collapse them or to pretend that they do not exist in the first place? Working with frameworks like the network makes us aware of the ways in which these ideas of the global and the local are constructed and continue to remain the focus of our conversations, making invisible the real questions at hand.

Hence, we need to think of networks, not as spaces of intersection, but in need of intersections. The networks, because of their predatory, expanding nature, and the constant interaction with the edges, often appear as dynamic and inclusive. We need to now think of the networks as in need of intersections – or of intersectional networks. Developing intersections, of temporality, of geography and of contexts are great. But, we need to move one step beyond – and look at the couplings of aspiration, inspiration, autonomy, control, desire, belonging and precariousness that often mark the new digital subjects. And our policies, politics and regulations will have to be tailored to not only stop the person abandoning her life and running to a place of safety, not only stop the rumours within the Information and communication networks, not only create stop-gap measures of curbing the flows of gossip, but to actually account for the human conditions of life and living.

[1]. This post has grown from conversations across three different locations. The first draft of this talk was presented at the Habits of Living Conference, organised by the Centre for Internet & Society and Brown University, in Bangalore. A version of this talk found great inputs from the University of California Humanities Research Institute in Irvine, where I found great ways of sharpening the focus. The responses at the Milton Wolf Seminar at the America Austria Foundation, Austria, to this story, helped in making it more concrete to the challenges that the “network” throws to our digital modes of thinking. I am very glad to be able to put the talk into writing this time, and look forward to more responses.

Filtering content on the internet

The op-ed was published in the Hindu on May 2, 2014.

On May 5, the Supreme Court will hear Kamlesh Vaswani’s infamous anti-pornography petition again. The petition makes some rather outrageous claims. Watching pornography ‘puts the country’s security in danger’ and it is ‘worse than Hitler, worse than AIDS, cancer or any other epidemic,’ it says. This petition has been pending before the Court since February 2013, and seeks a new law that will ensure that pornography is exhaustively curbed.

Disintegrating into binaries

The petition assumes that pornography causes violence against women and children. The trouble with such a claim is that the debate disintegrates into binaries; the two positions being that pornography causes violence or that it does not. The fact remains that the causal link between violence against women and pornography is yet to be proven convincingly and remains the subject of much debate. Additionally, since the term pornography refers to a whole range of explicit content, including homosexual adult pornography, it cannot be argued that all pornography objectifies women or glamorises violent treatment of them.

Allowing even for the petitioner’s legitimate concern about violence against women, it is interesting to note that of all the remedies available, he seeks the one which is authoritarian but may not have any impact at all. Mr. Vaswani could have, instead, encouraged the state to do more toward its international obligations under the Convention on the Elimination of Discrimination against Women (CEDAW). CEDAW’s General Recommendation No. 19 is about violence against women and recommends steps to be taken to reduce violence against women. These include encouraging research on the extent, causes and effects of violence, and adopting preventive measures, such as public information and education programmes, to change attitudes concerning the roles and status of men and women.

Child pornography

Although different countries disagree about the necessity of banning adult pornography, there is general international consensus about the need to remove child pornography from the Internet. Children may be harmed in the making of pornography, and would at the very minimum have their privacy violated to an unacceptable degree. Being minors, they are not in a position to consent to the act. Each act of circulation and viewing adds to the harmful nature of child pornography. Therefore, an argument can certainly be made for the comprehensive removal of this kind of content.

Indian policy makers have been alive to this issue. The Information Technology Act (IT Act) contains a separate provision for material depicting children explicitly or obscenely, stating that those who circulate such content will be penalised. The IT Act also criminalises watching child pornography (whereas watching regular pornography is not a crime in India).

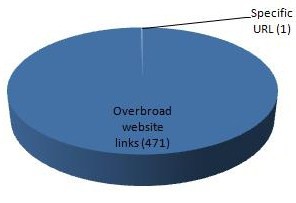

Intermediaries are obligated to take down child pornography once they have been made aware that they are hosting it. Organisations or individuals can proactively identify and report child pornography online. Other countries have tried, with reasonable success, systems using hotlines, verification of reports and co-operation of internet service providers to take down child pornography. However, these systems have also sometimes resulted in the removal of other legitimate content.

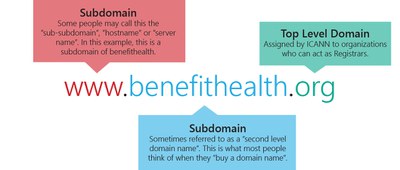

Filtering speech on the Internet

Child pornography can be blocked or removed using the IT Act, which permits the government to send lists of URLs of illegal content to internet service providers, requiring them to remove this content. Even private parties can send notices to online intermediaries informing them of illegal content and thereby making them legally accountable for such content if they do not remove it. However, none of this will be able to ensure the disappearance of child pornography from the Internet in India.

Technological solutions like filtering software that screens or blocks access to online content, whether at the state, service provider or user level, can at best make child pornography inaccessible to most people. People who are more skilled than amateurs will be able to circumvent technological barriers since these are barriers only until better technology enables circumvention.

Additionally, attempts at technological filtering usually even affect speech that is not targeted by the filtering mechanism. Therefore, any system for filtering or blocking content from the Internet needs to build in safeguards to ensure that processes designed to remove child pornography do not end up being used to remove political speech or speeches that are constitutionally protected.

In the Vaswani case, the government has correctly explained to the Supreme Court that any greater attempt to monitor pornography is not technologically feasible. It has pointed out that human monitoring of content will delay transmission of data substantially, will slow down the Internet, and will also be ineffective, since the illegal content can easily be moved to other servers in other countries.

Making intermediaries liable for the content they host will undo the safe harbour protection granted to them by the IT Act. Without it, intermediaries like Facebook will actually have to monitor all the content they host, and the resources required for such monitoring will reduce the content that makes its way online. This would seriously impact the extensiveness and diversity of content available on the Internet in India. Additionally, when demands are made for the removal of legitimate content, profit-making internet companies will be disinclined to risk litigation much in the same way as Penguin was reluctant to defend Wendy Doniger’s book.

If the Supreme Court makes the mistake of creating a positive obligation to monitor Internet content for intermediaries, it will effectively kill the Internet in India.

(Chinmayi Arun is research director, Centre for Communication Governance, National Law University, Delhi, and fellow, Centre for Internet and Society, Bangalore)

Round-table on User Safety Internet

Agenda_roundtable discussion-Bengaluru (1).pdf

—

PDF document,

258 kB (264900 bytes)

Agenda_roundtable discussion-Bengaluru (1).pdf

—

PDF document,

258 kB (264900 bytes)

European Court of Justice rules Internet Search Engine Operator responsible for Processing Personal Data Published by Third Parties

The ruling is expected to have considerable impact on reputation and privacy related takedown requests as under the decision, data subjects may approach the operator directly seeking removal of links to web pages containing personal data. Currently, users prove whether data needs to be kept online—the new rules reverse the burden of proof, placing an obligation on companies, rather than users for content regulation.

A win for privacy?

The ECJ ruling addresses Mario Costeja González complaint filed in 2010, against Google Spain and Google Inc., requesting that personal data relating to him appearing in search results be protected and that data which was no longer relevant be removed. Referring to the Directive 95/46/EC of the European Parliament, the court said, that Google and other search engine operators should be considered 'controllers' of personal data. Following the decision, Google will be required to consider takedown requests of personal data, regardless of the fact that processing of such data is carried out without distinction in respect of information other than the personal data.

The decision—which cannot be appealed—raises important of questions of how this ruling will be applied in practice and its impact on the information available online in countries outside the European Union. The decree forces search engine operators such as Google, Yahoo and Microsoft's Bing to make judgement calls on the fairness of the information published through their services that reach over 500 million people across the twenty eight nation bloc of EU.

ECJ rules that search engines 'as a general rule,' should place the right to privacy above the right to information by the public. Under the verdict, links to irrelevant and out of date data need to be erased upon request, placing search engines in the role of controllers of information—beyond the role of being an arbitrator that linked to data that already existed in the public domain. The verdict is directed at highlighting the power of search engines to retrieve controversial information while limiting their capacity to do so in the future.

The ruling calls for maintaining a balance in addressing the legitimate interest of internet users in accessing personal information and upholding the data subject’s fundamental rights, but does not directly address either issues. The court also recognised, that the data subject's rights override the interest of internet users, however, with exceptions pertaining to nature of information, its sensitivity for the data subject's private life and the role of the data subject in public life. Acknowledging that data belongs to the individual and is not the right of the company, European Commissioner Viviane Reding, hailed the verdict, "a clear victory for the protection of personal data of Europeans".

The Court stated that if data is deemed irrelevant at the time of the case, even if it has been lawfully processed initially, it must be removed and that the data subject has the right to approach the operator directly for the removal of such content. The liability issue is further complicated by the fact, that search engines such as Google do not publish the content rather they point to information that already exists in the public domain—raising questions of the degree of liability on account of third party content displayed on their services.

The ECJ ruling is based on the case originally filed against Google, Spain and it is important to note that, González argued that searching for his name linked to two pages originally published in 1998, on the website of the Spanish newspaper La Vanguardia. The Spanish Data Protection Agency did not require La Vanguardia to take down the pages, however, it did order Google to remove links to them. Google appealed this decision, following which the National High Court of Spain sought advice from the European court. The definition of Google as the controller of information, raises important questions related to the distinction between liability of publishers and the liability of processors of information such as search engines.

The 'right to be forgotten'

The decision also brings to the fore, the ongoing debate and fragmented opinions within the EU, on the right of the individual to be forgotten. The 'right to be forgotten' has evolved from the European Commission's wide-ranging plans of an overhaul of the commission's 1995 Data Protection Directive. The plans for the law included allowing people to request removal of personal data with an obligation of compliance for service providers, unless there were 'legitimate' reasons to do otherwise. Technology firms rallying around issues of freedom of expression and censorship, have expressed concerns about the reach of the bill. Privacy-rights activist and European officials have upheld the notion of the right to be forgotten, highlighting the right of the individual to protect their honour and reputation.

These issues have been controversial amidst EU member states with the UK's Ministry of Justice claiming the law 'raises unrealistic and unfair expectations' and has sought to opt-out of the privacy laws. The Advocate General of the European Court Niilo Jääskinen's opinion, that the individual's right to seek removal of content should not be upheld if the information was published legally, contradicts the verdict of the ECJ ruling. The European Court of Justice's move is surprising for many and as Richard Cumbley, information-management and data protection partner at the law firm Linklaters puts it, “Given that the E.U. has spent two years debating this right as part of the reform of E.U. privacy legislation, it is ironic that the E.C.J. has found it already exists in such a striking manner."

The economic implications of enforcing a liability regime where search engine operators censor legal content in their results aside, the decision might also have a chilling effect on freedom of expression and access to information. Google called the decision “a disappointing ruling for search engines and online publishers in general,” and that the company would take time to analyze the implications. While the implications of the decision are yet to be determined, it is important to bear in mind that while decisions like these are public, the refinements that Google and other search engines will have to make to its technology and the judgement calls on the fairness of the information available online are not public.

The ECJ press release is available here and the actual judgement is available here.

Net Neutrality, Free Speech and the Indian Constitution – III: Conceptions of Free Speech and Democracy

In the modern State, effective exercise of free speech rights is increasingly dependent upon an infrastructure that includes newspapers, television and the internet. Access to a significant part of this infrastructure is determined by money. Consequently, if what we value about free speech is the ability to communicate one’s message to a non-trivial audience, financial resources influence both who can speak and, consequently, what is spoken. The nature of the public discourse – what information and what ideas circulate in the public sphere – is contingent upon a distribution of resources that is arguably unjust and certainly unequal.

There are two opposing theories about how we should understand the right to free speech in this context. Call the first one of these the libertarian conception of free speech. The libertarian conception takes as given the existing distribution of income and resources, and consequently, the unequal speaking power that that engenders. It prohibits any intervention designed to remedy the situation. The most famous summary of this vision was provided by the American Supreme Court, when it first struck down campaign finance regulations, in Buckley v. Valeo: “the concept that government may restrict the speech of some [in] order to enhance the relative voice of others is wholly foreign to the First Amendment.” This theory is part of the broader libertarian worldview, which would restrict government’s role in a polity to enforcing property and criminal law, and views any government-imposed restriction on what people can do within the existing structure of these laws as presumptively wrong.

We can tentatively label the second theory as the social-democratic theory of free speech. This theory focuses not so much on the individual speaker’s right not to be restricted in using their resources to speak as much as they want, but upon the collective interest in maintaining a public discourse that is open, inclusive and home to a multiplicity of diverse and antagonistic ideas and viewpoints. Often, in order to achieve this goal, governments regulate access to the infrastructure of speech so as to ensure that participation is not entirely skewed by inequality in resources. When this is done, it is often justified in the name of democracy: a functioning democracy, it is argued, requires a thriving public sphere that is not closed off to some or most persons.

Surprisingly, one of the most powerful judicial statements for this vision also comes from the United States. In Red Lion v. FCC, while upholding the “fairness doctrine”, which required broadcasting stations to cover “both sides” of a political issue, and provide a right of reply in case of personal attacks, the Supreme Court noted:

“[Free speech requires] preserv[ing] an uninhibited marketplace of ideas in which truth will ultimately prevail, rather than to countenance monopolization of that market, whether it be by the Government itself or a private licensee… it is the right of the public to receive suitable access to social, political, esthetic, moral, and other ideas and experiences which is crucial here.”

What of India? In the early days of the Supreme Court, it adopted something akin to the libertarian theory of free speech. In Sakal Papers v. Union of India, for example, it struck down certain newspaper regulations that the government was defending on grounds of opening up the market and allowing smaller players to compete, holding that Article 19(1)(a) – in language similar to what Buckley v. Valeo would hold, more than fifteen years later – did not permit the government to infringe the free speech rights of some in order to allow others to speak. The Court continued with this approach in its next major newspaper regulation case, Bennett Coleman v. Union of India, but this time, it had to contend with a strong dissent from Justice Mathew. After noting that “it is no use having a right to express your idea, unless you have got a medium for expressing it”, Justice Mathew went on to hold:

“What is, therefore, required is an interpretation of Article 19(1)(a) which focuses on the idea that restraining the hand of the government is quite useless in assuring free speech, if a restraint on access is effectively secured by private groups. A Constitutional prohibition against governmental restriction on the expression is effective only if the Constitution ensures an adequate opportunity for discussion… Any scheme of distribution of newsprint which would make the freedom of speech a reality by making it possible the dissemination of ideas as news with as many different facets and colours as possible would not violate the fundamental right of the freedom of speech of the petitioners. In other words, a scheme for distribution of a commodity like newsprint which will subserve the purpose of free flow of ideas to the market from as many different sources as possible would be a step to advance and enrich that freedom. If the scheme of distribution is calculated to prevent even an oligopoly ruling the market and thus check the tendency to monopoly in the market, that will not be open to any objection on the ground that the scheme involves a regulation of the press which would amount to an abridgment of the freedom of speech.”

In Justice Mathew’s view, therefore, freedom of speech is not only the speaker’s right (the libertarian view), but a complex balancing act between the listeners’ right to be exposed to a wide range of material, as well as the collective, societal right to have an open and inclusive public discourse, which can only be achieved by preventing the monopolization of the instruments, infrastructure and access-points of speech.

Over the years, the Court has moved away from the majority opinions in Sakal Papers and Bennett Coleman, and steadily come around to Justice Mathew’s view. This is particularly evident from two cases in the 1990s: in Union of India v. The Motion Picture Association, the Court upheld various provisions of the Cinematograph Act that imposed certain forms of compelled speech on moviemakers while exhibiting their movies, on the ground that “to earmark a small portion of time of this entertainment medium for the purpose of showing scientific, educational or documentary films, or for showing news films has to be looked at in this context of promoting dissemination of ideas, information and knowledge to the masses so that there may be an informed debate and decision making on public issues. Clearly, the impugned provisions are designed to further free speech and expression and not to curtail it.”

LIC v. Manubhai D. Shah is even more on point. In that case, the Court upheld a right of reply in an in-house magazine, “because fairness demanded that both view points were placed before the readers, however limited be their number, to enable them to draw their own conclusions and unreasonable because there was no logic or proper justification for refusing publication… the respondent’s fundamental right of speech and expression clearly entitled him to insist that his views on the subject should reach those who read the magazine so that they have a complete picture before them and not a one sided or distorted one…” This goes even further than Justice Mathew’s dissent in Bennett Coleman, and the opinion of the Court in Motion Picture Association, in holding that not merely is it permitted to structure the public sphere in an equal and inclusive manner, but that it is a requirement of Article 19(1)(a).

We can now bring the threads of the separate arguments in the three posts together. In the first post, we found that public law and constitutional obligations can be imposed upon private parties when they discharge public functions. In the second post, it was argued that the internet has replaced the park, the street and the public square as the quintessential forum for the circulation of speech. ISPs, in their role as gatekeepers, now play the role that government once did in controlling and keeping open these avenues of expression. Consequently, they can be subjected to public law free speech obligations. And lastly, we discussed how the constitutional conception of free speech in India, that the Court has gradually evolved over many years, is a social-democratic one, that requires the keeping open of a free and inclusive public sphere. And if there is one thing that fast-lanes over the internet threaten, it is certainly a free and inclusive (digital) public sphere. A combination of these arguments provides us with an arguable case for imposing obligations of net neutrality upon ISPs, even in the absence of a statutory or regulatory obligations, grounded within the constitutional guarantee of the freedom of speech and expression.

For the previous post, please see: http://cis-india.org/internet-governance/blog/-neutrality-free-speech-and-the-indian-constitution-part-2.

_____________________________________________________________________________________________________

Gautam Bhatia — @gautambhatia88 on Twitter — is a graduate of the National Law School of India University (2011), and presently an LLM student at the Yale Law School. He blogs about the Indian Constitution at http://indconlawphil.wordpress.com. Here at CIS, he will be blogging on issues of online freedom of speech and expression.

Global Governance Reform Initiative

Conference Program_GGRI_ FINAL.pdf

—

PDF document,

984 kB (1007636 bytes)

Conference Program_GGRI_ FINAL.pdf

—

PDF document,

984 kB (1007636 bytes)

Net Freedom Campaign Loses its Way

The article was published in the Hindu Businessline on May 10, 2014.

One word to describe NetMundial: Disappointing! Why? Because despite the promise, human rights on the Internet are still insufficiently protected. Snowden’s revelations starting last June threw the global Internet governance processes into crisis.

Things came to a head in October, when Brazil’s President Dilma Rousseff, horrified to learn that she was under NSA surveillance for economic reasons, called for the organisation of a global conference called NetMundial to accelerate Internet governance reform.

The NetMundial was held in São Paulo on April 23-24 this year. The result was a statement described as “the non-binding outcome of a bottom-up, open, and participatory process involving … governments, private sector, civil society, technical community, and academia from around the world.” In other words — it is international soft law with no enforcement mechanisms.

The statement emerges from “broad consensus”, meaning governments such as India, Cuba and Russia and civil society representatives expressed deep dissatisfaction at the closing plenary. Unlike an international binding law, only time will tell whether each member of the different stakeholder groups will regulate itself.

Again, not easy, because the outcome document does not specifically prescribe what each stakeholder can or cannot do — it only says what internet governance (IG) should or should not be. And finally, there’s no global consensus yet on the scope of IG. The substantive consensus was disappointing in four important ways:

Mass surveillance : Civil society was hoping that the statement would make mass surveillance illegal. After all, global violation of the right to privacy by the US was the raison d'être of the conference.

Instead, the statement legitimised “mass surveillance, interception and collection” as long as it was done in compliance with international human rights law. This was clearly the most disastrous outcome.

Access to knowledge: The conference was not supposed to expand intellectual property rights (IPR) or enforcement of these rights. After all, a multilateral forum, WIPO, was meant to address these concerns. But in the days before the conference the rights-holders lobby went into overdrive and civil society was caught unprepared.

The end result — “freedom of information and access to information” or right to information in India was qualified “with rights of authors and creators”. The right to information laws across the world, including in India, contains almost a dozen exemptions, including IPR. The only thing to be grateful for is that this limitation did not find its way into the language for freedom of expression.

Intermediary liability: The language that limits liability for intermediaries basically provides for a private censorship regime without judicial oversight, and without explicit language protecting the rights to freedom of expression and privacy. Even though the private sector chants Hillary Clinton's Internet freedom mantra — they only care for their own bottomlines.

Net neutrality: Even though there was little global consensus, some optimistic sections of civil society were hoping that domestic best practice on network neutrality in Brazil’s Internet Bill of Right — also known as Marco Civil, that was signed into law during the inaugural ceremony of NetMundial — would make it to the statement. Unfortunately, this did not happen.

For almost a decade since the debate between the multi-stakeholder and multilateral model started, the multi-stakeholder model had produced absolutely nothing outside ICANN (Internet Corporation for Assigned Names and Numbers, a non-profit body), its technical fraternity and the standard-setting bodies.

The multi-stakeholder model is governance with the participation (and consent — depending on who you ask) of those stakeholders who are governed. In contrast, in the multilateral system, participation is limited to nation-states.

Civil society divisions

The inability of multi-stakeholderism to deliver also resulted in the fragmentation of global civil society regulars at Internet Governance Forums.

But in the run-up to NetMundial more divisions began to appear. If we ignore nuances — we could divide them into three groups. One, the ‘outsiders’ who are best exemplified by Jérémie Zimmermann of the La Quadrature du Net. Jérémie ran an online campaign, organised a protest during the conference and did everything he could to prevent NetMundial from being sanctified by civil society consensus.

Two, the ‘process geeks’ — for these individuals and organisations process was more important than principles. Most of them were as deeply invested in the multi-stakeholder model as ICANN and the US government and some who have been riding the ICANN gravy train for years.

Even worse, some were suspected of being astroturfers bootstrapped by the private sector and the technical community. None of them were willing to rock the boat. For the ‘process geeks’, seeing politicians and bureaucrats queue up like civil society to speak at the mike was the crowning achievement.

Three, the ‘principles geeks’ perhaps best exemplified by the Just Net Coalition who privileged principles over process. Divisions were also beginning to sharpen within the private sector. For example, Neville Roy Singham, CEO of Thoughtworks, agreed more with civil society than he did with other members of the private sector in his interventions.

In short, the ‘outsiders’ couldn't care less about the outcome and will do everything to discredit it, the ‘process geeks’ stood in ovation when the outcome document was read at the closing plenary and the ‘principles geeks’ returned devastated.

For the multi-stakeholder model to survive it must advance democratic values, not undermine them.

This will only happen if there is greater transparency and accountability. Individuals, organisations and consortia that participate in Internet governance processes need to disclose lists of donors including those that sponsor travel to these meetings.

Civil Society - Privacy Bill

privacy bill related story.pdf

—

PDF document,

999 kB (1023754 bytes)

privacy bill related story.pdf

—

PDF document,

999 kB (1023754 bytes)

FOEX Live: May 26-27, 2014

Media reports across India are focusing on the new government and its Cabinet portfolios. In the midst of the celebration of and grief over the regime change, we found many reports indicating that civil society is wary of the new government’s stance towards Internet freedoms.

Andhra Pradesh:

Andhra MLA and All India Majlis-e-Ittihad ul-Muslimin member Akbaruddin Owaisi has been summoned to appear before a Kurla magistrate’s court on grounds of alleged hate speech and intention to harm harmony of Hinduism and Islam. Complainant Gulam Hussain Khan saw an online video of a December 2012 speech by Owaisi and filed a private complaint with the court. “I am prima facie satisfied that it disclosed an offence punishable under Section(s) 153A and 295A of the Indian Penal Code,” the Metropolitan Magistrate said.

Goa:

A Goa Sessions Judge has dismissed shipbuilding diploma engineer Devu Chodankar’s application for anticipatory bail. On the basis of an April 26 complaint by CII state president Atul Pai Kane, Goa cybercrime cell registered a case against Chodankar for allegedly posting matter on a Facebook group with the intention of promoting enmity between religious groups in view of the 2014 general elections. The Judge noted, inter alia, that Sections 153A and 295A of the Indian Penal Code were attracted, and that it is necessary to find out whether, on the Internet, “there is any other material which could be considered as offensive or could create hatred among different classes of citizens of India”.

Karnataka:

Syed Waqas, an MBA student from Bhatkal pursuing an internship in Bangalore, was picked up for questioning along with four of his friends after Belgaum social activist Jayant Tinaikar filed a complaint. The cause of the complaint was a MMS, allegedly derogatory to Prime Minister Narendra Modi. After interrogation, the Khanapur (Belgaum) police let Waqas off on the ground that Waqas was not the originator of the MMS, and that Mr. Tinaikar had provided an incorrect mobile phone number.

In another part of the country, Digvijaya Singh is vocal about Indian police’s zealous policing of anti-Modi comments, while they were all but visible when former Prime Minister Dr. Manmohan Singh was the target of abusive remarks.

Kerala:

The Anti-Piracy Cell of Kerala Police plans to target those uploading pornographic content on to the Internet and its sale through memory cards. A circular to this effect has been issued to all police stations in the state, and civil society cooperation is requested.

In other news, Ernakulam MLA Hibi Eden inaugurated “Hibi on Call”, a public outreach programme that allows constituents to reach the MLA directly. A call on 1860 425 1199 registers complaints.

Maharashtra:

Mumbai police are investigating pizza delivery by an unmanned drone, which they consider a security threat.

Tamil Nadu:

Small and home-run businesses in Chennai are flourishing with the help of Whatsapp and Facebook: Mohammed Gani helps his customers match bangles with Whatsapp images, Ayeesha Riaz and Bhargavii Mani send cakes and portraits to Facebook-initiated customers. Even doctors spread information and awareness using Facebook. In Madurai, you can buy groceries online, too.

Opinion:

Chethan Kumar fears that Indian cyberspace is strangling freedom of expression through the continued use of the ‘infamous’ Section 66A of the Information Technology Act, 2000 (as amended in 2008). Sunil Garodia expresses similar concerns, noting a number of arrests made under Section 66A.

However, Ankan Bose has a different take; he believes there is a thin but clear line between freedom of expression and a ‘freedom to threaten’, and believes Devu Chodankar and Syed Waqar may have crossed that line. For more on Section 66A, please redirect here.

While Nikhil Pahwa is cautious of the new government’s stance towards Internet freedoms, given the (as yet) mixed signals of its ministers, Shaili Chopra ruminates on the new government’s potential dive into a “digital mutiny and communications revolution” and wonders about Modi’s social media management strategy. For Kashmir Times reader Hardev Singh, even Kejriwal’s arrest for allegedly defaming Nitin Gadkari will lead to a chilling effect on freedom of expression.

Elsewhere, the Hindustan Times is intent on letting Prime Minister Narendra Modi know that his citizens demand their freedom of speech and expression. Civil society and media all over India express their concerns for their freedom of expression in light of the new government.

Legislating for Privacy - Part II

The article was published in the Hoot on May 20, 2014.

|

|---|

In October 2010, the Department of Personnel and Training ("DOPT") of the Ministry of Personnel, Public Grievances and Pensions released an ‘Approach Paper’ towards drafting a privacy law for India. The Approach Paper claims to be prepared by a leading Indian corporate law firm that, to the best of my knowledge, has almost no experience of criminal procedure or constitutional law. The Approach Paper resulted in the drafting of a Right to Privacy Bill, 2011 ("DOPT Bill") which, although it has suffered several leaks, has neither been published for public feedback nor sent to the Cabinet for political clearance prior to introduction in Parliament.

Approach Paper and DOPT Bill

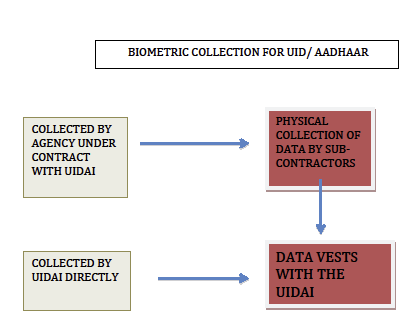

The first article in this two-part series broadly examined the many legal facets of privacy. Notions of privacy have long informed law in common law countries and have been statutorily codified to protect bodily privacy, territorial or spatial privacy, locational privacy, and so on. These fields continue to evolve and advance; for instance, the legal imperative to protect intimate body privacy from violation has now expanded to include biometric information, and the protection given to the content of personal communications that developed over the course of the twentieth century is now expanding to encompass metadata and other ‘information about information’.

The Approach Paper suffers from several serious flaws, the largest of which is its conflation of commercial data protection and privacy. It ignores the diversity of privacy law and jurisprudence in the common law, instead concerning itself wholly with commercial data protection. This creates a false equivalency, albeit not one that cannot be rectified by re-naming the endeavour to describe commercial data protection only.

However, there are other errors. The paper claims that no right of action exists for privacy breaches between citizens inter se. This is false, the civil wrongs of nuisance, interference with enjoyment, invasion of privacy, and other similar torts and actionable claims operate to redress privacy violations. In fact, in the case of Ratan Tata v. Union of India that is currently being heard by the Supreme Court of India, at least two parties are arguing that privacy is already adequately protected by civil law. Further, the criminal offences of nuisance and defamation, amongst others, and the recently introduced crimes of stalking and voyeurism, all create rights of action for privacy violations. These measures are incomplete, – this is not contested, the premise of these articles is the need for better privacy protection law – but denying their existence is not useful.

The shortcomings of the Approach Paper are reflected in the draft legislation it resulted in. A major concern with the DOPT Bill is its amateur treatment of surveillance and interception of communications. This is inevitable for the Approach Paper does not consider this area at all although there is sustained and critical global and national attention to the issues that attend surveillance and communications privacy. For an effort to propose privacy law, this lapse is quite astonishing. The Approach Paper does not even examine if Parliament is competent to regulate surveillance, although the DOPT Bill wades into this contested turf.

Constitutionality of Interceptions

In a federal country, laws are weighed by the competence of their legislatures and struck down for overstepping their bounds. In India, the powers to legislate arise from entries that are contained in three lists in Schedule VII of the Constitution. The power to legislate in respect of intercepting communications traditionally emanates from Entry 31 of the Union List, which vests the Union – that is, Parliament and the Central Government – with the power to regulate “Posts and telegraphs; telephones, wireless, broadcasting and other like forms of communication” to the exclusion of the States. Hence, the Indian Telegraph Act, 1885, and the Indian Post Office Act, 1898, both Union laws, contain interception provisions. However, after holding the field for more than a century, the Supreme Court overturned this scheme in Bharat Shah’s case in 2008.

The case challenged the telephone interception provisions of the Maharashtra Control of Organised Crime Act, 1999 ("MCOCA"), a State law that appeared to transgress into legislative territory reserved for the Union. The Supreme Court held that Maharashtra’s interception provisions were valid and arose from powers granted to the States – that is, State Assemblies and State Governments – by Entries 1 and 2 of the State List, which deal with “public order” and “police” respectively. This cleared the way for several States to frame their own communications interception regimes in addition to Parliament’s existing laws. The question of what happens when the two regimes clash has not been answered yet. India’s federal scheme anticipates competing inconsistencies between Union and State laws, but only when these laws derive from the Concurrent List which shares legislative power. In such an event, the ‘doctrine of repugnancy’ privileges the Union law and strikes down the State law to the extent of the inconsistency.

In competitions between Union and State laws that do not arise from the Concurrent List but instead from the mutually exclusive Union and State Lists, the ‘doctrine of pith and substance’ tests the core substance of the law and traces it to one the two Lists. Hence, in a conflict, a Union law the substance of which was traceable to an entry in the State List would be struck down, and vice versa.

However, the doctrine permits incidental interferences that are not substantive. For example, as in a landmark 1946 case, a State law validly regulating moneylenders may incidentally deal with promissory notes, a Union field, since the interference is not substantive. Since surveillance is a police activity, and since “police” is a State subject, care must be taken by a Union surveillance law to remain on the pale of constitutionality by only incidentally affecting police procedure. Conversely, State surveillance laws were required to stay clear of the Union’s exclusive interception power until Bharat Shah’s case dissolved this distinction without answering the many questions it threw up.

Since the creation of the Republic, India’s federal scheme was premised on the notion that the Union and State Lists were exclusive of each other. Conceptually, the Union and the States could not have competing laws on the same subject. But Bharat Shah did just that; it located the interception power in both the Lists and did not enunciate a new doctrine to resolve their (inevitable) future conflict. This both disturbs Indian constitutional law and goes to the heart of surveillance and privacy law.

Three Principles of Interception

Apart from the important questions regarding legislative competence and constitutionality, the DOPT Bill proposed weak, ill-informed, and poorly drafted provisions to regulate surveillance and interceptions. It serves no purpose to further scrutinise the 2011 DOPT Bill. Instead, at this point, it may be constructive to set out the broad contours of a good interceptions regulation regime. Some clarity on the concepts: intercepting communications means capturing the content and metadata of oral and written communications, including letters, couriers, telephone calls, facsimiles, SMSs, internet telephony, wireless broadcasts, emails, and so on. It does not include activities such visual capturing of images, location tracking or physical surveillance; these are separate aspects of surveillance, of which interception of communications is a part.

Firstly, all interceptions of communications must be properly sanctioned. In India, under Rule 419A of the Indian Telegraph Rules, 1951, the Home Secretary – an unelected career bureaucrat, or a junior officer deputised by the Home Secretary – with even lesser accountability, authorises interceptions. In certain circumstances, even senior police officers can authorise interceptions. Copies of the interception orders are supposed to be sent to a Review Committee, consisting of three more unelected bureaucrats, for bi-monthly review. No public information exists, despite exhaustive searching, regarding the authorisers and numbers of interception orders and the appropriateness of the interceptions.

The Indian system derives from outdated United Kingdom law that also enables executive authorities to order interceptions. But, the UK has constantly revisited and revised its interception regime; its present avatar is governed by the Regulation of Investigatory Powers Act, 2000 ("RIPA") which creates a significant oversight mechanism headed by an independent commissioner, who monitors interceptions and whose reports are tabled in Parliament, and quasi-judicially scrutinised by a tribunal comprised of judges and senior independent lawyers, which hears public complaints, cancels interceptions, and awards monetary compensation. Put together, even though the current UK interceptions system is executively sanctioned, it is balanced by independent and transparent quasi-judicial authorities.

In the United States, all interceptions are judicially sanctioned because American constitutional philosophy – the separation of powers doctrine – requires state action to be checked and balanced. Hence, ordinary interceptions of criminals’ communications as also extraordinary interceptions of perceived national security threats are authorised only by judges, who are ex hypothesi independent, although, as the PRISM affairs teaches us, independence can be subverted. In comparison, India’s interception regime is incompatible with its democracy and must be overhauled to establish independent and transparent authorities to properly sanction interceptions.

Secondly, no interceptions should be sanctioned but upon ‘probable cause’. Simply described, probable cause is the standard that convinces a reasonable person of the existence of criminality necessary to warrant interception. Probable case is an American doctrine that flows from the US Constitution’s Fourth Amendment that protects the rights of people to be secure in places in which they have a reasonable expectation of privacy. There is no equivalent standard in UK law, except perhaps the common law test of reasonability that attaches to all government action that abridges individual freedoms. If a coherent ‘reasonable suspicion’ test could be coalesced from the common law, I think it would fall short of the strictness that the probable cause doctrine imposes on the executive. Therefore, the probable cause requirement is stronger than ordinary constraint of reasonability but weaker than the standard of reasonable doubt beyond which courts may convict. In this spectrum of acceptable standards, India’s current law in section 5(2) of the Indian Telegraph Act, 1885 is the weakest for it permits interceptions merely “on the occurrence of any public emergency or in the interest of public safety”, which determination is left to the “satisfaction” of a bureaucrat. And, under Rule 419A(2) of the Telegraph Rules, the only imposition on the bureaucrat when exercising this satisfaction is that the order “contain reasons” for the interception.

Thirdly, all interceptions should be warranted. This point refers not to the necessity or otherwise of the interception, but to the framework within which it should be conducted. Warrants should clearly specify the name and clear identity of the person whose communications are sought to be intercepted. The target person’s identity should be linked to the specific means of communication upon which the suspected criminal conversations take place. Therefore, if the warrant lists one person’s name but another person’s telephone number – which, because of the general ineptness of many police forces, is not uncommon – the warrant should be rejected and the interception cancelled. And, by extension, the specific telephone number, or email account, should be specified. A warrant against a person called Rahul Kumar, for instance, cannot be executed against all Rahul Kumars in the vicinity, nor also against all the telephones that the one specific Rahul Kumar uses, but only against the one specific telephone number that is used by the one specific Rahul Kumar. Warrants should also specify the duration of the interception, the officer responsible for its conduct and thereby liable for its abuse, and other safeguards. Some of these concerns were addressed in 2007 when the Telegraph Rules were amended, but not all.

A law that fails to substantially meet the standards of these principles is liable, perhaps in the not too distant future, to be read down or struck down by India’s higher judiciary. But, besides the threat of judicial review, a democratic polity must protect the freedoms and diversity of its citizens by holding itself to the highest standards of the rule of law, where the law is just.

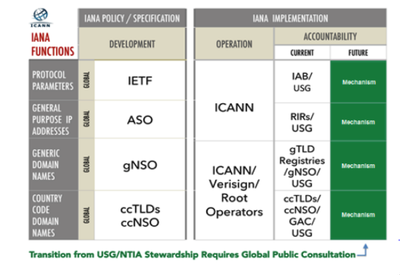

Accountability of ICANN

S. Kumar, Accountability of ICANN.pdf

—

PDF document,

135 kB (138513 bytes)

S. Kumar, Accountability of ICANN.pdf

—

PDF document,

135 kB (138513 bytes)

FOEX Live: May 28-29, 2014

Media focus on the new government and its ministries and portfolios has been extensive, and to my knowledge, few newspapers or online sources have reported violations of freedom of speech. However, on his first day in office, the new I&B Minister, Prakash Javadekar, acknowledged the importance of press freedom, avowing that it was the “essence of democracy”. He has assured that the new government will not interfere with press freedom.

Assam:

A FICCI discussion in Guwahati, attended among others by Microsoft and Pricewaterhouse Coopers, focused on the role of information technology in governance.

Goa:

Following the furore over allegedly inflammatory, ‘hate-mongering’ Facebook posts by shipping engineer Devu Chodankar, a group of Goan netizens formed a ‘watchdog forum’ to police “inappropriate and communally inflammatory content” on social media. Diana Pinto feels, however, that some ‘compassion and humanism’ ought to have prompted only a stern warning in Devu Chodankar’s case, and not a FIR.

Karnataka:

Syed Waqar was released by Belgaum police after questioning revealed he was a recipient of the anti-Modi MMS. The police are still tracing the original sender.

Madhya Pradesh:

The cases of Shaheen Dhada and Rinu Srinivasan, and recently of Syed Waqar and Devu Chodankar have left Indore netizens overly cautious about “posting anything recklessly on social media”. Some feel it is a blow to democracy.

Maharashtra:

In Navi Mumbai, the Karjat police seized several computers, hard disks and blank CDs from the premises of the Chandraprabha Charitable Trust in connection with an investigation into sexual abuse of children at the Trust’s school-shelter. The police seek to verify whether the accused recorded any obscene videos of child sexual abuse.

In Mumbai, even as filmmakers, filmgoers, artistes and LGBT people celebrated the Kashish Mumbai International Queer Film Festival, all remained apprehensive of the new government’s social conservatism, and were aware that the films portrayed acts now illegal in India.

Manipur:

At the inauguration of the 42nd All Manipur Shumang Leela Festival, V.K. Duggal, State Governor and Chairman of the Manipur State Kala Akademi, warned that the art form was under threat in the digital age, as Manipuri films are replacing it in popularity.

Rajasthan:

Following the lead of the Lok Sabha, the Rajasthan state assembly has adopted a digital conference and voting system to make the proceedings in the House more efficient and transparent.

Seemandhra:

Seemandhra Chief Minister designate N. Chandrababu Naidu promised a repeat of his hi-tech city miracle ‘Cyberabad’ in Seemandhra.

West Bengal:

West Bengal government has hired PSU Urban Mass Transit Company Limited to study, install and operationalize Intelligent Transport System in public transport in Kolkata. GPS will guide passengers about real-time bus routes and availability. While private telecom operators have offered free services to the transport department, there are no reports of an end-date or estimated expenditure on the project.

News and Opinion:

Over a week ago, Avantika Banerjee wrote a speculative post on the new government’s stance towards Internet policy. At Fair Observer, Gurpreet Mahajan laments that community politics in India has made a lark of banning books.

India’s Computer Emergency Response Team (CERT-In) has detected high-level virus activity in Microsoft’s Internet Explorer 8, and recommends upgrading to Explorer 11.

Of the projected 400 million users that Twitter will have by 2018, India and Indonesia are expected to outdo the United Kingdom in user base. India saw nearly 60% growth in user base this year, and Twitter played a major role in Elections 2014. India will have over 18.1 million users by 2018.

Elsewhere in the world:

Placing a bet on the ‘Internet of Everything’, Cisco CEO John Chambers predicted a “brutal consolidation” of the IT industry in the next five years. A new MarketsandMarkets report suggests that the value of the ‘Internet of Things’ may reach US $1423.09 billion by 2020 at an estimated CAGR of 4.08% from 2014 to 2020.

China’s Xinhua News Agency announced its month-long campaign to fight “infiltration from hostile forces at home and abroad” through instant messaging. Message providers WeChat, Momo, Mi Talk and Yixin have expressed their willingness to cooperate in targeting those engaging in fraud, or in spreading ‘rumours’, violence, terrorism or pornography. In March this year, WeChat deleted at least 40 accounts with political, economic and legal content.

Thailand’s military junta interrupted national television broadcast to deny any role in an alleged Facebook-block. The site went down briefly and caused alarm among netizens.

Snowden continues to assure that he is not a Russian spy, and has no relationship with the Russian government.

Search and Seizure and the Right to Privacy in the Digital Age: A Comparison of US and India

For example, the ubiquitous smartphone, above and beyond a communication device, is a device which can maintain a complete record of the communications data, photos, videos and documents, and a multitude of other deeply personal information, like application data which includes location tracking, or financial data of the user. As computers and phones increasingly allow us to keep massive amounts of personal information accessible at the touch of a button or screen (a standard smartphone can hold anything between 500 MB to 64 GB of data), the increasing reliance on computers as information-silos also exponentially increases the harms associated with the loss of control over such devices and the information they contain. This vulnerability is especially visceral in the backdrop of law enforcement and the use of coercive state power to maintain security, juxtaposed with the individual’s right to secure their privacy.

American Law - The Fourth Amendment Protection against Unreasonable Search and Seizure

The right to conduct a search and seizure of persons or places is an essential part of investigation and the criminal justice system. The societal interest in maintaining security is an overwhelming consideration which gives the state a restricted mandate to do all things necessary to keep law and order, which includes acquiring all possible information for investigation of criminal activities, a restriction which is based on recognizing the perils of state-endorsed coercion and its implication on individual liberty. Digitally stored information, which is increasingly becoming a major site of investigative information, is thus essential in modern day investigation techniques. Further, specific crimes which have emerged out of the changing scenario, namely, crimes related to the internet, require investigation almost exclusively at the level of digital evidence. The role of courts and policy makers, then, is to balance the state’s mandate to procure information with the citizens’ right to protect it.

The scope of this mandate is what is currently being considered before the Supreme Court of the United States, which begun hearing arguments in the cases Riley v. California,[1] and United States v Wurie,[2]on the 29th of April, 2014. At issue is the question of whether the police should be allowed to search the cell phones of individuals upon arrest, without obtaining a specific warrant for such search. The cases concern instances where the accused was arrested on account of a minor infraction and a warrantless search was conducted, which included the search of cell phones in their possession. The information revealed in the phones ultimately led to the evidence of further crimes and the conviction of the accused of graver crimes. The appeal is for a suppression of the evidence so obtained, on grounds that the search violates the Fourth Amendment of the American Constitution. Although there have been a plethora of conflicting decisions by various lower courts (including the judgements in Wurie and Riley),[3] the Federal Supreme Court will be for the first time deciding upon the issue of whether cell phone searches should require a higher burden under the Fourth Amendment.

At the core of the issue are considerations of individual privacy and the right to limit the state’s interference in private matters. The fourth amendment in the Constitution of the United States expressly grants protection against unreasonable searches and seizure,[4]however, without a clear definition of what is unreasonable, it has been left to the courts to interpret situations in which the right to non-interference would trump the interests of obtaining information in every case, leading to vast and varied jurisprudence on the issue. The jurisprudence stems from the wide fourth amendment protection against unreasonable government interference, where the rule is generally that any warrantless search is unreasonable, unless covered by certain exceptions. The standard for the protection under the Fourth Amendment is a subjective standard, which is determined as per the state of the bind of the individual, rather than any objective qualifiers such as physical location; and extends to all situations where individuals have a reasonable expectation of privacy, i.e., situations where individuals can legitimately expect privacy, which is a subjective test, not purely dependent upon the physical space being searched.[5]

Therefore, the requirement of reasonableness is generally only fulfilled when a search is conducted subsequent to obtaining a warrant from a neutral magistrate, by demonstrating probable cause to believe that evidence of any unlawful activity would be found upon such search. A warrant is, therefore, an important limitation on the search powers of the police. Further, the protection excludes roving or general searches and requires particularity of the items to be searched. The restriction derives its power from the exclusionary rule, which bars evidence obtained through unreasonable search or seizure, obtained directly or through additional warrants based upon such evidence, from being used in subsequent prosecutions. However, there have evolved several exceptions to the general rule, which includes cases where the search takes place upon the lawful arrest of an accused, a practice which is justified by the possibility of hidden weapons upon the accused or of destruction of important evidence.[6]

The appeal, if successful, would provide an exception to the rule that any search upon lawful arrest is always reasonable, by creating a caveat for the search of computer devices like smartphones. If the court does so, it would be an important recognition of the fact that evolving technologies have transmuted the concept of privacy to beyond physical space, and legal rules and standards that applied to privacy even twenty years ago, are now anachronistic in an age where individuals can record their entire lives on an iPhone. Searching a person nowadays would not only lead to the recovery of calling cards or cigarettes, but phones and computers which can be the digital record of a person’s life, something which could not have been contemplated when the laws were drafted. Cell phone and computer searches are the equivalent of searches of thousands of documents, photos and personal records, and the expectation of privacy in such cases is much higher than in regular searches. Courts have already recognized that cell phones and laptop computers are objects in which the user may have a reasonable expectation of privacy by making them analogous to a “closed container” which the police cannot search and hence coming under the protection of the Fourth Amendment.[7]

On the other hand, cell phones and computers also hold data which could be instrumental in investigating criminal activity, and with technologies like remote wipes of computer data available, such data is always at the risk of destruction if delay is occurred upon the investigation. As per the oral arguments, being heard now, the Court seems to be carving out a specific principle applicable to new technologies. The Court is likely to introduce subtleties specific to the technology involved – for example, it may seek to develop different principles for smartphones (at issue in Riley) and the more basic kind of cell-phones (at issue in Wurie), or it may recognize that only certain kinds of information may be accessed,[8]or may even evolve a rule that would allow seizure, but not a search, of the cell phone before a search warrant can be obtained.[9] Recognizing that transformational technology needs to be reflected in technology-specific legal principles is an important step in maintaining a synchronisation between law and technology and the additional recognition of a higher threshold adopted for digital evidence and privacy would go a long way in securing digital privacy in the future.

Search and Seizure in India

Indian jurisprudence on privacy is a wide departure from that in the USA. Though it is difficult to strictly compartmentalize the many facets of the right to privacy, there is no express or implicit mention of such a right in the Indian Constitution. Although courts have also recognized the importance of procedural safeguards in protecting against unreasonable governmental interference, the recognition of the intrinsic right to privacy as non-interference, which may be different from the instrumental rights that criminal procedure seeks to protect (such as misuse of police power), is sorely lacking. The general law providing for the state’s power of search and seizure of evidence is found in the Code of Criminal Procedure, 1973.